Security in GCP was built in from the start and certainly not an afterthought! In each service that GCP provides, you will see that security is paramount. In the public cloud era, it's true that a single breach of security can not only cause direct financial implications but can also have a detrimental effect on future business for a company. For this reason, GCP takes the view that the best way is in-depth defense rather than a single piece of technology, and it offers a lot more security than what a customer can usually afford by replicating on-premises. In this article, we will look at the key topics that we need to understand to be successful in the exam and try to give an overview of security and the importance GCP places on it.

In this article, we will cover the following topics:

- Introduction to security

- Cloud Identity

- Resource Manager

- IAM

- Organization Policies

- Service accounts

- Fire rules and load balancers

- Cloud Security Scanner

- Monitoring and logging

- Encryption

- Penetration testing in GCP

- Industry regulations

- CI/CD security overview

- Additional security services

Introduction to security

Let's start this article with a brief introduction to GCP's approach to security. As we mentioned previously, security is not an afterthought and is built into its services. But before we even think about securing our services, we need to acknowledge the fact that GCP has a holistic view of security. This can be seen by restricting physical data center access and using custom hardware and hardened versions of operating systems in the software stack.

Important Note

Google uses custom hardware with security in mind and uses a hardened version of Linux for the software stack, which is monitored for binary modifications and enforces trusted server boots.

Storage is a key service for any cloud provider, and GCP offers encryption at rest by default on all storage services. This can support customer encryption keys or manage keys on behalf of the customer. On physical storage disks, retired disks will have sectors zeroed and if data cannot be deleted, disks are destroyed in a multi-stage crusher. However, note that if a customer deletes data, it can take 180 days to be physically deleted.

Additionally, one of the main security concerns is attacks from outside organizations. To protect us from internet attacks, Google offers the ability to register against the frontend, which will check incoming network connections for correct certificates, and offers protection against Distributed Denial-of-Service (DDoS) attacks. In addition, using GCP load balancers will offer extra protection, while cloud VPNs and direct connections offer more encryption options.

Google invests heavily in securing its infrastructure, which is designed in progressive security layers. As a quick reference, let's briefly look at these main infrastructure security layers:

- Securing Low-Level Infrastructure: Google incorporates multiple layers of physical protection. As they design and build their data centers, access is limited to a very small group of Google employees. Biometric identification, metal detection, vehicle barriers, and laser-based intrusion detection systems are just some of the technologies used to provide a physical layer of protection.

In addition to this, Google also designs server boards and networking equipment, allowing Google to vet the vendors they work with and perform audits and validation on security properties provided by the components. Custom hardware security chips allow Google to securely identify and authenticate Google devices at a hardware level.

Finally, Google uses cryptographic signatures over low-level components such as the kernel, BIOS, and base operating system to ensure server machines are booting the correct software stack.

- Securing Service Deployment: A service is an application binary that a developer has written and wants to run on Google's infrastructure. Each service that runs on the Google infrastructure will have an associated service account identity and is used by the clients to ensure communication with the intended server. Google uses cryptographic authentication and authorization for inter-service communication at the application layer.

- Securing Data Storage: Google's various storage services can be configured to use keys from a central key management system to encrypt data before it is written to physical storage. This allows Google's infrastructure to isolate itself from potential threats such as malicious disk firmware. Hardware encryption support in the hard drives and SSDs that are used can also track each drive through its life cycle.

- Securing Internet Communications: Google's infrastructure consists of large sets of physical machines, connected over LANs and WANs, so there is a need to defend against DoS attacks. This is helped by only exposing a subset of machines directly to external internet traffic. There are also services such as Cloud Armor, which assist in preventing DoS attacks.

Additionally, things such as multi-factor authentication assist in securing internet communication.

- Operational Security: Google also needs to operate the infrastructure securely. Google has code libraries and frameworks that will eliminate XSS vulnerabilities in web apps, as well as tools to automatically detect security bugs. They also offer a Vulnerability Reward Program that pays anyone who can discover bugs and is heavily invested in intrusion detection.

Exam Tip

We will talk about security in more depth than what is expected for you to be successful in the exam; however, it is vital to understand this information for the role of a cloud architect. For the exam, there are several areas that we recommend that you focus on: understanding Cloud IAM roles, understanding what Cloud Identity is, understanding what Key Management System (KMS) does, understanding the difference between Customer-Supplied Encryption Keys (CSEKs) and Customer-Managed Encryption Keys (CMEKs), being aware of encryption at rest and in transit, and being aware of PCI compliance.

Shared responsibility model

Before we look deeper into security options, it is important to point out that like the other main public cloud vendors, GCP has what is known as a shared responsibility agreement. As we touched on previously, Google invests a lot in the security of its infrastructure. However, we, as consumers of the platform, also have responsibilities.

Google is not responsible for what resides in the operating system. It's important to understand the principle of shared responsibility for the exam. Google won't be responsible for everything in your architecture, so we must be prepared to take our share of the responsibility. For the exam, we should understand that the different GCP services that are offered mean that Google and the customer will have different levels of responsibility. As an example, let's look at Cloud Storage. Google will manage the encryption of storage and the hardware providing the service and will allow audit logging. However, it will not be responsible for the content that resides on Cloud Storage or the network security that's used to access it. Likewise, access to and authentication of the content will be the owner's responsibility. Compare this to, let's say, BigQuery, which is a PaaS offering. In this case, Google would take responsibility for network security and authentication.

The following diagram shows what the customer is expected to manage in each service model. From left to right, we can see the difference between fully self-managed on-premises data centers and SaaS offerings:

Figure 15.1 – Shared responsibility model

Now that we have introduced security at a high level, let's move on to understanding Cloud Identity in GCP.

Cloud Identity

Cloud Identity is a key GCP service that's offered by Google as an Identity-as-a-Service (IDaaS) solution. This is an optional service and a more basic approach can be taken to apply individual permissions to services or projects. However, for enterprise organizations, using an identity service makes far more sense. It enables businesses to manage who has access to their resources and services within a GCP organization, all from a single pane of glass. Cloud Identity can be used as a standalone product for domain-based user accounts and groups. We should note early on in this article that Google also offers a similar management pane for Google workspace users. The console works in the same way, and user and domain management have a lot of similarities. You may wish to investigate this further in detail, but for this blog and, more importantly, the exam, we will focus solely on GCP Cloud Identity.

Like many on-premises directories that can reduce the overhead of user administration, GCP allows us to centrally manage our users in the cloud through Cloud Identity. It offers a central, single pane of glass to administer users, groups, and settings and is referred to as the Google Admin console. To utilize Cloud Identity, your domain name should be enabled to receive emails, hence allowing your existing web and email addresses to be used as normal. Your GCP organization will have a single Cloud Identity, and the organization is the root node in the resource hierarchy. It is deemed the supernode of your projects.

It should be noted that there are two editions of Cloud Identity. One is free and one is a premium subscription. The premium subscription offers additional features, such as device management and security and application management. A full comparison can be found here: https://support.google.com/cloudidentity/answer/7431902?hl=en. Finally, you must understand that Cloud Identity is not controlled through the GCP console, but in fact through the Google Admin console. Once you're logged into the admin console, you will be able to add users, create groups, and assign members and disable users.

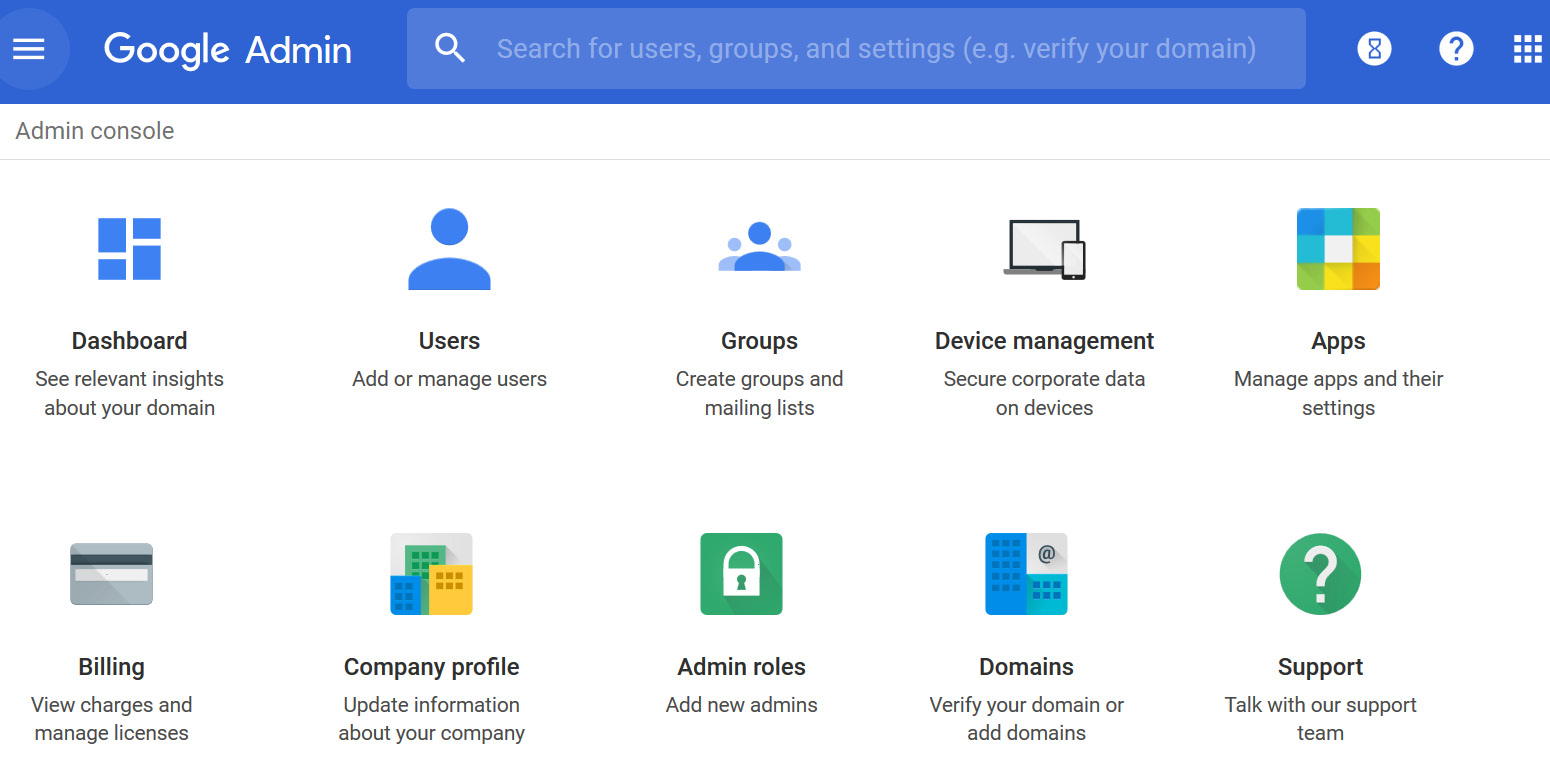

The following is a screenshot of what the Google Admin console page looks like:

Figure 15.2 – Google Admin console

Adding users manually is classed as overhead in terms of effort and is not scalable if you have a large environment to manage. The whole point of Cloud Identity is to simplify the management of users and groups as it's unlikely that an organization can afford one person to add, remove, and manage things. This is where Google Cloud Directory Sync (GCDS) comes in. Many organizations will have an LDAP database such as Microsoft Active Directory (AD). GCDS can synchronize an organization's AD or LDAP database onto Cloud Identity, and it is highly scalable. Synchronization is only one-way – that is, from on-premises to GCP –so your on-site database is never compromised. GCDS allows the administrator to perform delta syncs and scheduled synchronizations and perform tasks manually. If we require permissions to be revoked from a user, or indeed disabled, then the results are immediate.

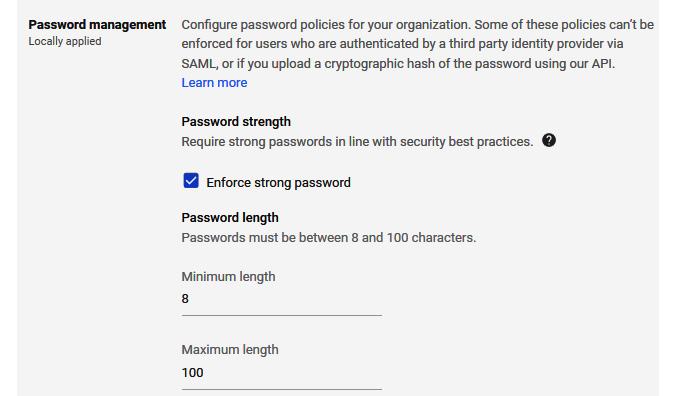

When we start to use Cloud Identity for authentication, Google stores and manages all authentications and passwords by default, but there is an option to disable this. Two-step verification can be added to this by using multi-factor tools. There is also an alternative to using Single Sign-On (SSO), which is a SAML 2.0-based authentication that also includes multi-factor authentication (MFA). Finally, password complexity can be set within Cloud Identity's password management feature to align with existing policies your business may have:

Figure 15.3 – Password management

In the preceding screenshot, we can see an example of the Password management screen. In the next section, we will look at GCP Resource Manager.

Resource Manager

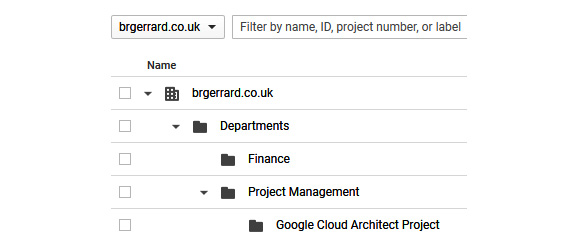

GCP Resource Manager allows you to create and manage a hierarchical grouping of objects such as organizations, folders, and projects together. Let's look at an example where we have an organization, brgerrard.co.uk, and several folders underneath that to add to the structure. Folders are optional but can be used to group projects. Access to these folders will work on a hierarchical model, meaning that if you have full access to the Departments folder, then this will be inherited down to, for example, the Google Cloud Architect Project folder, as shown in the following screenshot:

Figure 15.4 – Resource Manager

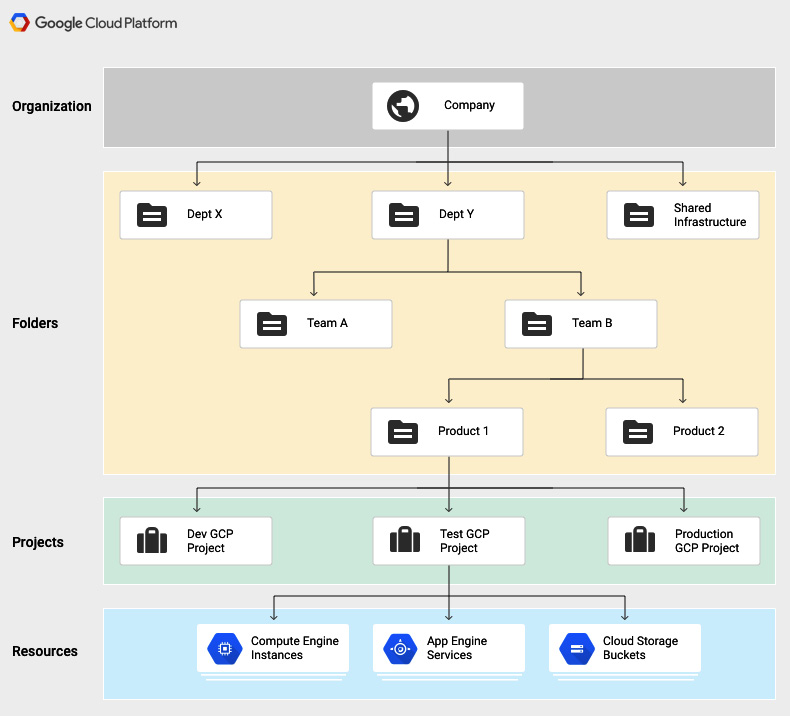

A good example to think of would be the separation of development, testing, and production environments in GCP. Separate projects for each environment allow you to grant access to only those who need access to the resources. The following screenshot shows how the hierarchy of GCP is set up:

Figure 15.5 – GCP hierarchy (Source: https://cloud.google.com/resource-manager/docs/cloud-platform-resource-hierarchy, License: https://creativecommons.org/licenses/by/4.0/legalcode)

It's important to understand that setting permissions at a higher level results in permission inheritance. For example, granting a user permission to the Dept Y folder means that the user will also have the same permissions applied to all subfolders and projects. Therefore, it is important to design your organization and permissions in such a way that they only grant access to the resources that are required to fulfill the requested role.

In the next section, we will look at Identity and Access Management.

Identity and Access Management (IAM)

GCP offers us the ability to create GCP resources and manage who can access them. It also allows us to grant only the specific access that's necessary, to prevent any unwanted access. It allows us to meet any requirements for the separation of duties. This is known as the security principle of least privilege, and we will look at this in detail shortly. First, we will have a look at some key concepts of IAM. In Cloud IAM, we can grant access to members. Members can belong to any one of the following types:

- Google accounts: These represent someone who interacts with GCP, such as a developer.

- Service accounts: These belong to your application and not an end user. We will look at service accounts in more detail later in this article, in the Service accounts section.

- Google groups: These are named collections of Google accounts and service accounts and are a good way to grant an access policy to a collection of users. A Google group can be used with IAM to grant access to roles. One important exception is that a group can only be assigned the owner role of a project if they are part of the same organization.

- Google Workspace Domain: This domain represents a group of all of the Google accounts that have been created in an organization's Google Workspace (Formerly G Suite).

- Cloud Identity Domain: Similar to Google Workspace, this domain represents all of the Google accounts in an organization.

The following are some of the concepts that are related to access management:

- Resources: Resources are projects, Compute Engine instances, or Cloud Storage buckets.

- Permissions: A permission dictates what operations are allowed on a resource and are seen in the form of <service>.<resource>.<verb>; for example, compute.instance.list. Permissions cannot be assigned directly to a user.

- Roles: Roles are a collection of permissions. To provide a user with access to a resource, we grant them a role rather than assigning permissions directly to the user. There are three kinds of roles – that is, basic, predefined, and custom – and we will discuss them later in this article. Please note that Basic roles were originally known as Primitive roles.

- IAM Policy: An IAM policy is a collection of statements that will define who has what type of access. You attach a policy to a resource and use it to enforce access control whenever it is accessed.

Important Note

In IAM, you will grant access to principles. These can be one of the following types: Google group, Google account, service account, Cloud Identity, Google Workspace Domain, all authenticated users, or all users.

Now that we have some background knowledge of IAM, let's continue with some examples. We previously mentioned the principle of least privilege, and we now know that we should only grant access to exactly what is necessary.

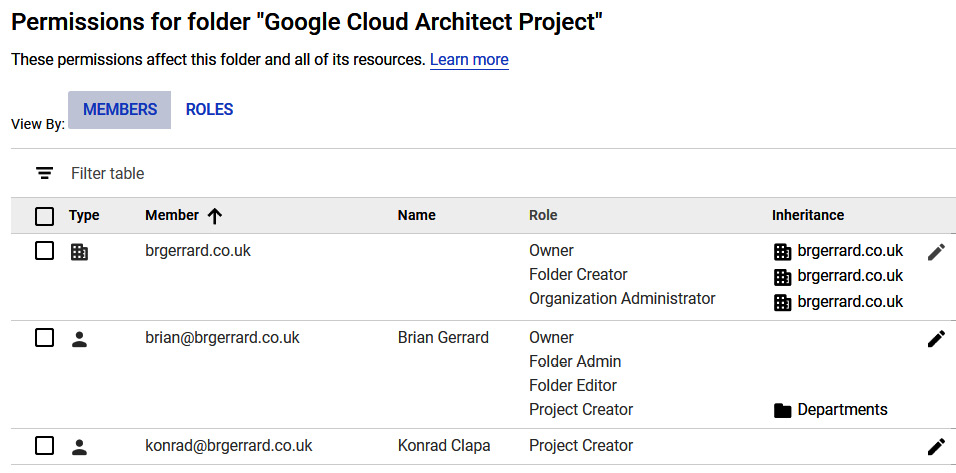

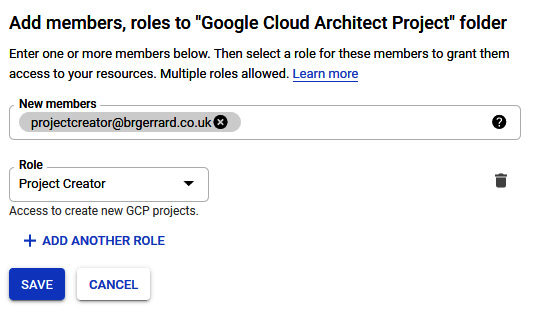

We'll look at the Google Cloud Architect Project folder permissions in the following example. We may have a user who should be able to create projects in all folders, but we may also have another user who should only create projects in a specific folder. In the following screenshot, we are granting the user called This email address is being protected from spambots. You need JavaScript enabled to view it. the Project Creator role:

Figure 15.6 – Applying permissions

Basic roles existed before Cloud IAM. These were legacy owner, editor, and viewer roles. Since these roles are limited, applying any of these roles means granting a wide spectrum of permissions. This does not exactly follow our least-permission principle! However, we should understand that there may be some cases where we wish to use primitive roles. For example, we may work in a small cross-functional team where the granularity of IAM is not required. Similarly, if we are working in a testing or development environment, then we may not wish full granular permissions to be applied.

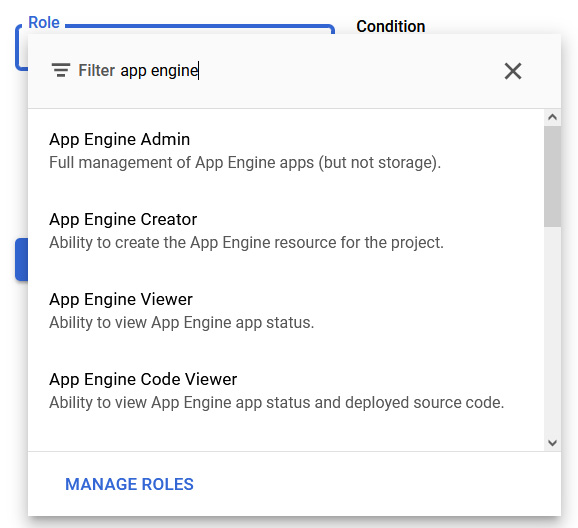

If we want to be more granular, we should use predefined roles and base our roles on job functions. These roles are created and maintained by Google, so permissions are automatically updated as necessary whenever Google Cloud adds new features or services. As an example, here are the predefined roles for App Engine:

Figure 15.7 – Predefined roles

If we want to create roles with specific permissions and not the Google-provided collection, then we can utilize custom roles. These may provide more granularity according to our requirements, but it should be noted that they are user-managed and therefore come with overhead. They can, however, prevent unwanted access to other resources. Before we create a custom role, we should always check that an existing predefined role – or a combination of predefined roles – doesn't already meet our needs.

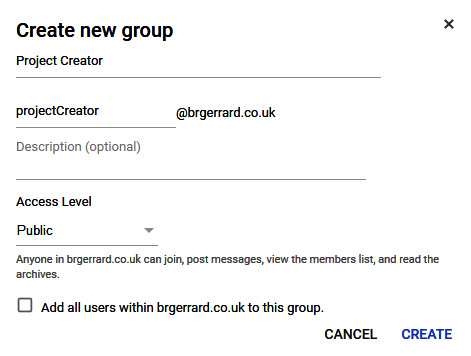

Granting access to roles on an individual basis can be tedious, and ideally, we should use groups to control access. As an example, let's create a new group from our Cloud Identity admin console called Project Creator. Looking back at Figure 15.2 from the Identity and Access Management (IAM) section, we can do this by browsing to the Groups field and clicking Add new group:

- Give the group a name, as shown in the following screenshot:

Figure 15.8 – Create new group

- Apply this to a resource in our GCP console. In this example, we are assigning the group to a folder:

Figure 15.9 – Adding group members

Now, we can centrally control permission to our resources rather than drill down into folder structures in our GCP console. This also offers a separation of duties to increase security. Now that we have created our project, we want to allow a user to only create a VM instance.

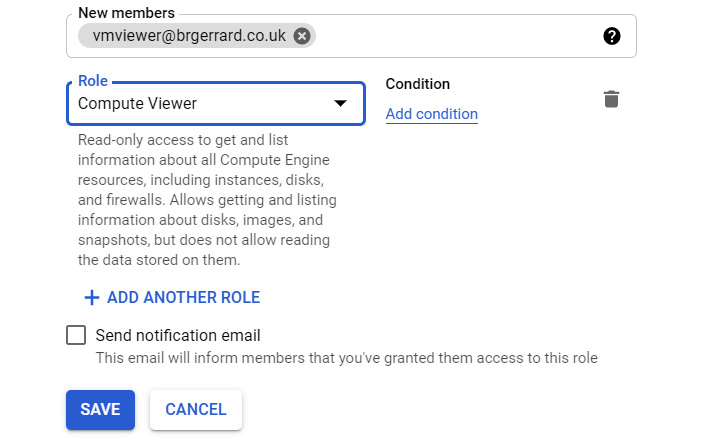

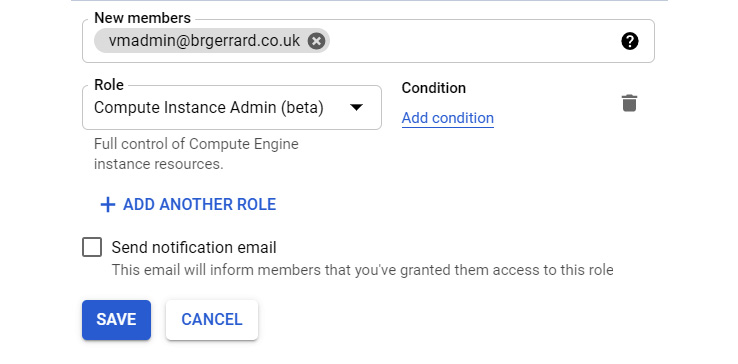

Let's say that, as a project owner, we want to allow an engineer to create a VM instance, but we want a separate engineer to have read-only access to this compute resource. Again, we can create two new groups in Cloud Identity for this purpose; let's call them vmviewer and vmadmin. We can assign these groups the relevant roles. Using predefined roles means that they are managed by Google, and any additional permissions that are added to a role would mean that your member automatically gets them.

Our vmviewer group is now assigned the Compute Viewer role at a project level, as shown in the following screenshot. Note that we also can add a condition. IAM conditions are one or more rules that are used to evaluate as true before the role associated with it is permitted. As an example, you may only want the IAM role to apply during certain hours of the day:

Figure 15.10 – Assigning a role

Our vmadmin group has now been assigned the Compute Instance Admin role at the project level:

Figure 15.11 – Assigning a role

We can also use the gcloud CLI to add permissions. This command line allows us to manage interactions with GCP APIs. As an example, if I want to assign the editor role to This email address is being protected from spambots. You need JavaScript enabled to view it. within the redwing project, then I can run the following command:

gcloud projects add-iam-policy-binding redwing –member='user:This email address is being protected from spambots. You need JavaScript enabled to view it.' –role='roles/editor'

The output of this command will confirm that This email address is being protected from spambots. You need JavaScript enabled to view it. has been granted the role of editor.

This gcloud projects command line can also be used for many more functions. Please note that we will take a deeper dive into the gcloud command line in This Post, Google Cloud Management Options.

Important Note

Please refer to the official documentation if you are keen on using this in more depth: https://cloud.google.com/sdk/gcloud/reference/projects/.

Finally, regarding IAM, we have spoken about who has permission to perform actions on resources. We should also be mindful of what organizational policies focus on. These policies allow us to set restrictions on resources. An organizational policy would allow us to disable certain options that are available to a user. These restrictions are done using constraints and can be applied to a GCP service or a list of GCP services. For example, let's say that we didn't want a default network to be available. We could set a constraint to skip default network creation, thus preventing any new VM instances on this network.

Previously, we mentioned the importance of service accounts. We will look at these in more depth now.

Service accounts

Service accounts are used to call the API of a service, hence removing users from any direct involvement. They belong to an application or VM instance. By default, every GCP project we create will have a default service account created when we enable our projects to use Compute Engine:

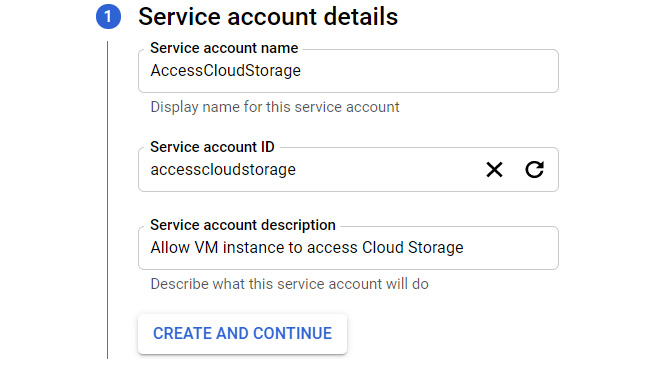

- We can create a new service account by navigating to IAM & Admin | IAM | Service accounts on our GCP console. We can also create a new service account using the gcloud CLI. As an example, let's say we need to have a VM that has access to Cloud Storage. The following screenshot shows us creating a new service account named AccessCloudStorage:

Figure 15.12 – Adding a service account

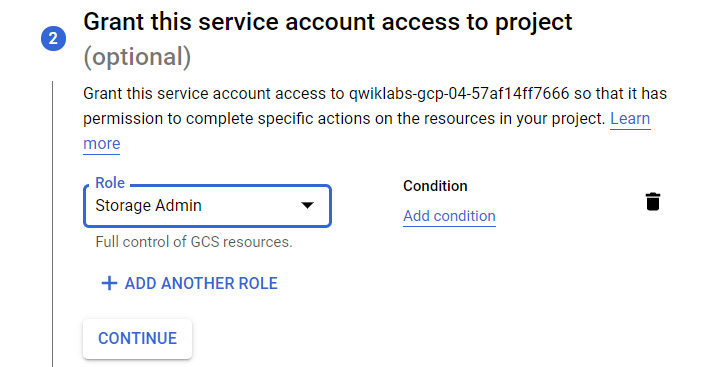

- Once we decide that we will be using service accounts for our resources, we can then decide whether we want to grant specific roles to the service account. The following screenshot shows that we have optional choices when assigning a role:

Figure 15.13 – Grant this service account access to project

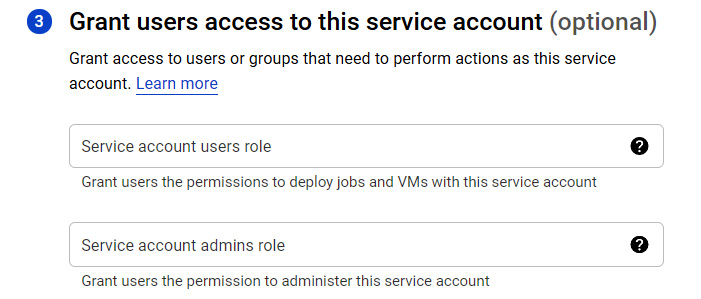

- Optionally, we can grant access to users or groups that need to perform actions as this service account:

Figure 15.14 – Assigning a role

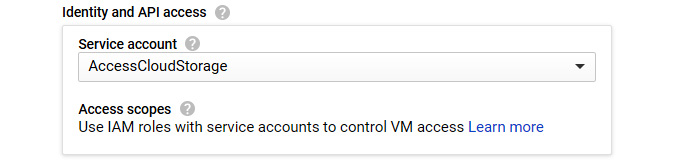

If we revisit the process of creating our VM instance, we can assign this service account under the Identity and API access option:

Figure 15.15 – Identity and API access

This will allow the service account to assume the service account identity for authenticating API requests. As we mentioned previously, we can see that we have the option to assign accounts we have created to a service account.

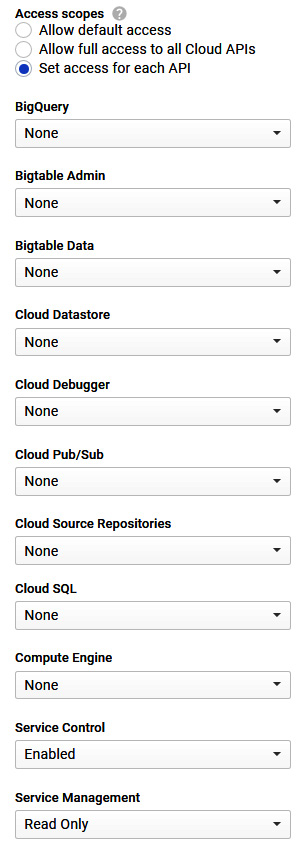

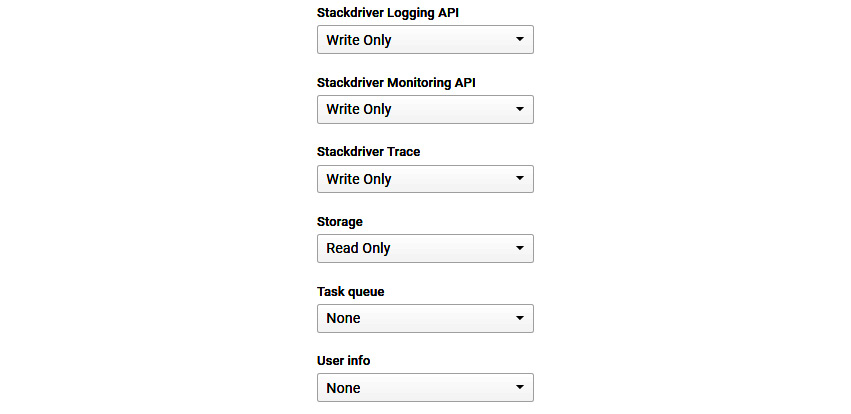

If you select the default service account, you will notice that there is the option to select an access scope. An access scope determines the level of access the API call is allowed to a service. It is good practice to select each API access individually per service to improve security. The following is the full list of APIs that are currently available:

Figure 15.16 – List of APIs

Service accounts are managed using service account keys. There are two types of service account keys:

- Google Cloud-managed: Google Cloud-managed keys are used by services such as Compute Engine and App Engine and cannot be downloaded.

- User-managed: User-managed keys are created and can be downloaded and managed by users of GCP. You are fully responsible for user-managed keys.

We will speak about key management in the Encryption section of this article.

Before we finish talking about service accounts, one final thing to note is that service accounts should still follow the same principles we learned about for IAM. Service accounts should only get the minimum set of permissions required for that service.

Exam Tip

For the exam, this topic is key so that you understand identities, roles, and resources. We have briefly touched on IAM in previous posts, but now, we want to look at it in a bit more detail. Some principles to note before you read any further, which will be explained in more detail in this article, are as follows: IAM roles are groups of permissions that can be assigned to users, groups, or service accounts; there are different types of roles; we should follow the principle of least privilege; and use groups to control access rather than granting access to individual users, if possible.

In this section, we covered service accounts. We explained that they are a special type of account that removes a user from direct involvement and that they are an important part of Google IAM. In the next section, we will look at access control lists, which are an alternative to IAM.

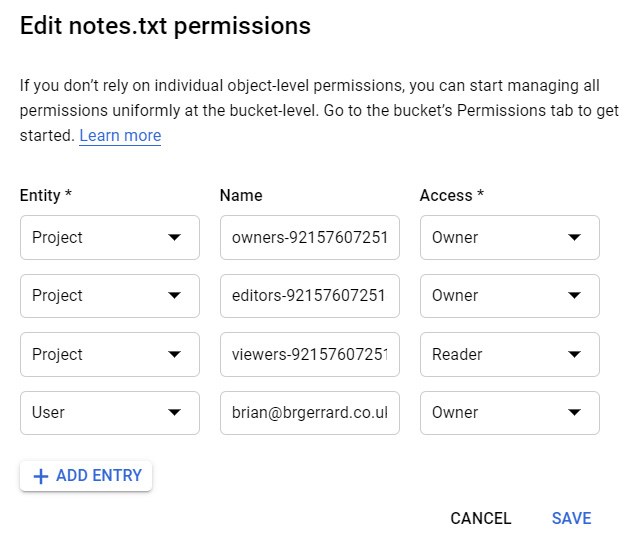

Cloud Storage access management

On top of using IAM permissions to restrict access to Cloud Storage, it can also be secured using Access Control Lists (ACLs). ACLs should be used when you want to set permissions on objects rather than the whole bucket, for example, to gain access to an individual object in a specific bucket. This is because Cloud IAM would apply permissions to all of the objects in a bucket. It's vital to understand this because, from a manageability perspective, it is not ideal to manage these individually, and if there is a commonality of permissions across all objects in the bucket, then you should use IAM to control access.

An ACL is made up of the permission that defines what can be performed, as well as the scope that defines who can perform the action. When a request is made to a bucket, the ACL will grant the user permissions, if applicable; otherwise, the request will result in a 403 Forbidden error. An object inside a bucket can only be granted Owner or Reader permission, as shown in the following screenshot:

Figure 15.17 – GCP bucket file permissions

Important Note

On top of the ACLs, GCP also offers legacy roles of Storage Legacy bucket Owner, Storage Legacy bucket Reader, and Storage Legacy bucket Writer.

As with IAM, we should follow the principle of least privilege. If you need to use an ACL, then do not apply the owner role if it is not needed. You should be careful to ensure that buckets are not unnecessarily made public (by assigning the allUser role). Assigning wrong permissions to a bucket is a very typical security gap in the real world and you should think carefully before applying ACLs.

One final piece of information you should be aware of before the exam is the use of signed URLs. This option allows you to grant access to a visitor so that they can upload or download from storage without the need for a Google account. Access can be time-limited, and access can be granted for reads or writes to anyone who has the URL. We can create a signed URL with gsutil by running the following command:

gsutil signurl -d 10m Desktop/private-key.json gs://example-bucket/cat.jpeg

In this example, we are using private-key.json as our service account private key to expose the cat.jpeg bucket object from example-bucket.

Exam Tip

Remember that we should never grant a user or service more permissions than what's required. Always use the principle of least privilege.

Next, we will look at Organization Policy Service.

Organization Policy Service

Organization Policy Service gives us programmatic control over our organization's cloud resources. This allows for centralized control to configure how our organization's resources are configured. Additionally, it helps our development teams stay within compliance boundaries and helps project owners move quickly without worrying about or breaking compliance.

It is important to highlight that Organization Policies are different from IAM, which we have discussed previously. While IAM focuses on allowing administrators to authorize who can act on specific resources based on permissions, Organization Policies focuses on allowing administrators to set restrictions on specific resources to determine how they can be configured. An organization policy is a set of restrictions and as an administrator, we define the policy to enforce restrictions on organizations, folders, or projects.

To define such policies, we would choose a particular type of restriction called a constraint. Constraints are applied against a Google Cloud service or a list of services. Essentially, this is a blueprint defining what behaviors are controlled. A constraint has a type, either list or Boolean. As you can guess, a list constraint will evaluate a list of allowed or denied values that have been set by the administrator. For example, this could be an allowed list of IP addresses that can connect to a virtual machine. A Boolean constraint would either enforce or not.

When a policy is set on a resource, all the descendants of that resource will inherit the policy by default. A user with the Organization Policy Administrator role can modify this inheritance if exceptions are required.

Violations are when a service acts in a state that is against the organization's policy restrictions. This may occur if a new policy sets a restriction on an action or state that a service is already in.

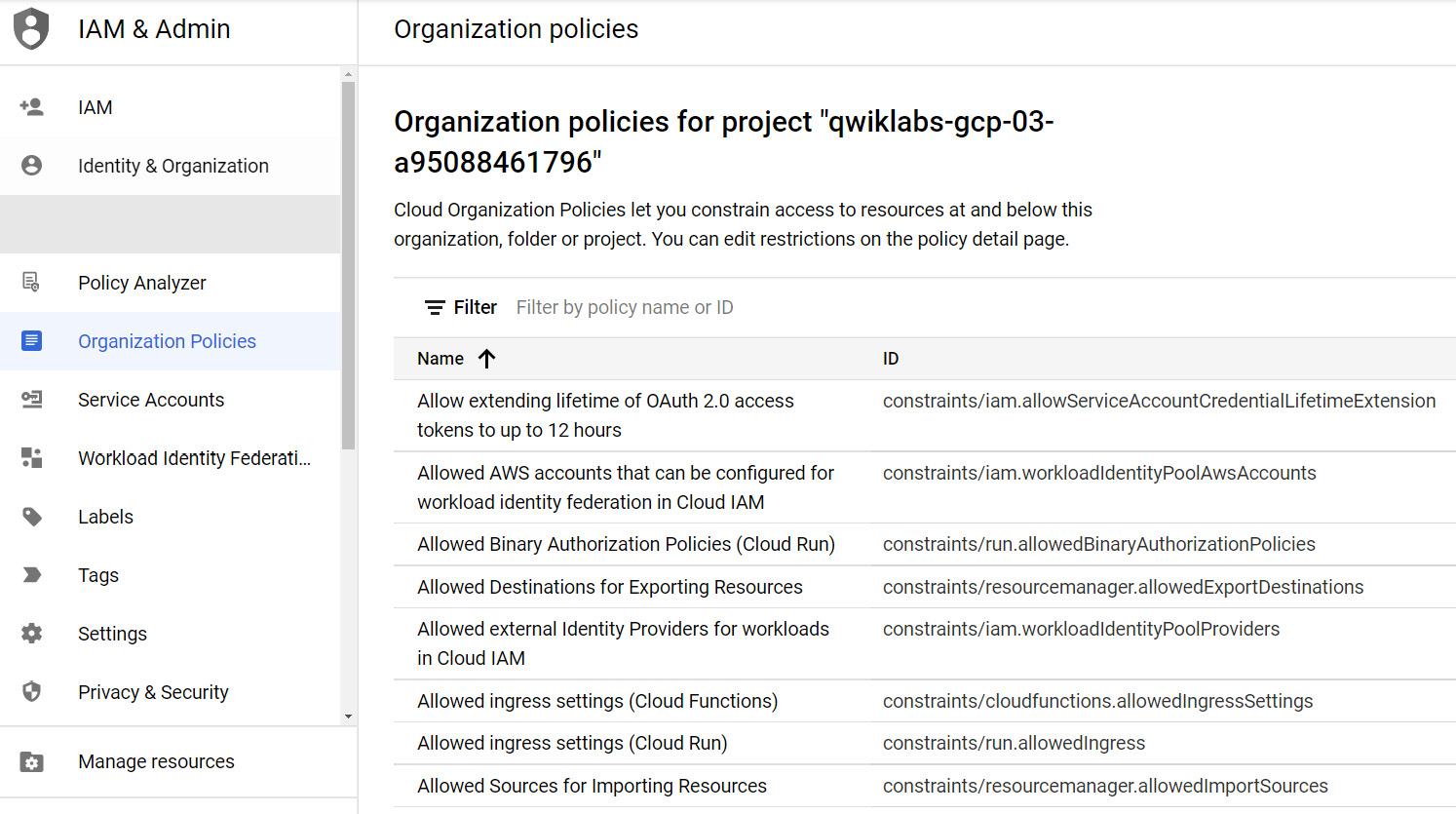

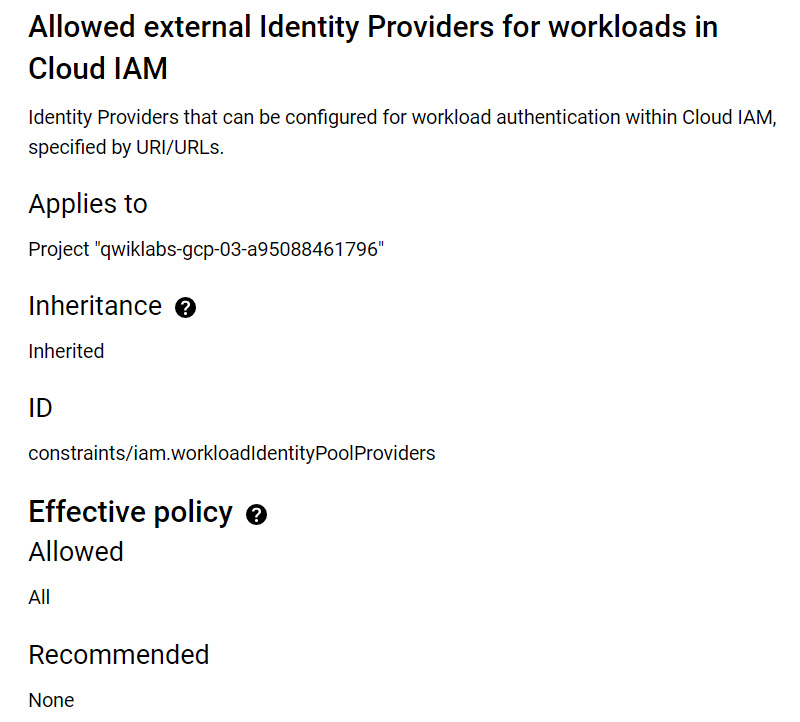

To create an Organization Policy, navigate to IAM & Admin | Organization Policies. This will list the available policies:

Figure 15.18 – Organization Policies

As an example, the following policy has been selected. Each policy describes the constraint and how it is currently applied. We can see that, by default, it would be inherited. We can edit the policy, if we are permitted to, and change the effective policy:

Figure 15.19 – Policy description

To reiterate, Organization Policies are important to understand for exam success. Please ensure that you understand how they differ from IAM.

Next, let's review firewall rules and load balancers.

Firewall rules and load balancers

We already covered networking in , Networking Options in GCP, but we would like to recap what is important from a security standpoint.

If Compute Engine instances don't need to communicate with each other, then we should host them on different Virtual Private Cloud (VPC) networks. Additionally, if we have an application made up of servers on different network tiers, then each server should be on a different subnet. Let's take a traditional web app and database application as an example. We want to segment each tier on a different subnet.

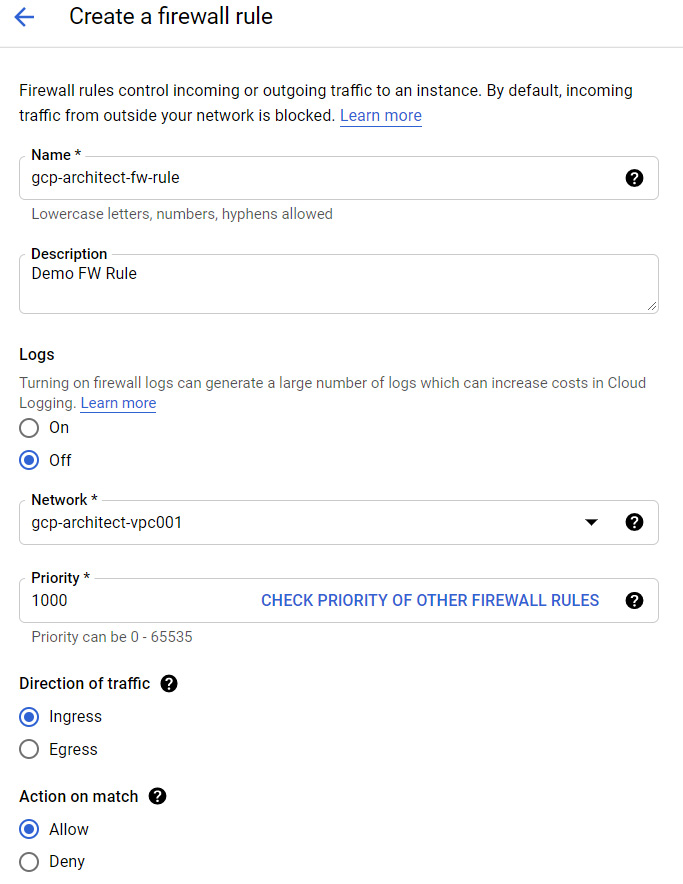

Firewall rules are the obvious choice for securing a network. As you now know, a VPC lets you isolate your network to allow for segmentation between computing resources. Firewall rules let you control the flow of inbound and outbound traffic by allowing or denying the traffic based on direction, source or destination, protocol, and priority. The following screenshot shows the creation of a new firewall rule:

Figure 15.20 – New firewall rule

It's important to note that firewall rules in GCP are stateful, meaning that if a rule is initiated by an Allow rule in one direction, the traffic will automatically be allowed to return. Likewise, you should also understand that all VPCs have two default rules. The first one permits all outgoing connections to any IP address, while the second blocks all incoming traffic. These are assigned the lowest priority, which means they can easily be overwritten by your custom rules. Additional rules that are applied to a new VPC allow ingress connections for all protocols and ports between instances. Others allow ICMP traffic (ping/trace route), SSH, and RDP from any source to any destination in the VPC.

As with IAM, for firewall rules, we should also use the principle of least privilege. By this, we mean that we should only allow communications that are needed by our applications and tie down anything that's not required. It is seen as good practice to create a rule with a low priority that will block all traffic and then layer the relevant rules on top with a higher priority.

Load balancers in GCP also offer additional security. Load balancers support SSL and HTTPS proxies for encryption in transit. There is a requirement for at least one signed SSL certificate to be installed on the target HTTPS proxy for the load balancer, and you have a choice of using self-managed SSL certificates or Google-managed SSL certificates. It seems obvious but if you need to use HTTPS traffic, then you should select the HTTPS load balancer, but for non-HTTPS traffic, you should use the SSL load balancer.

In the next section, we will introduce Cloud Web Security Scanner.

Cloud Web Security Scanner

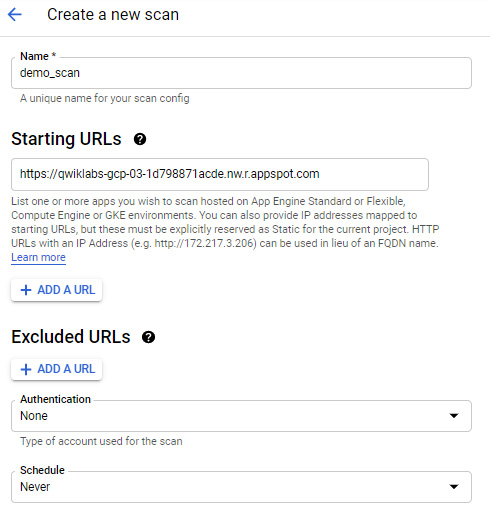

It's important to take application security as seriously as we take infrastructure security. Applications are one of the main targets of attacks, and GCP aids in this through the Web Security Scanner service. Of course, we know security is an extremely important topic and Cloud Web Security Scanner supports us in detecting vulnerabilities in our services easily.

When you create a scan, you can set this to scan URLs that your Compute Engine instance, App Engine instance, or GKE instance hosts and likewise exclude URLs. It will detect common vulnerabilities such as flash injection, mixed content, cleartext passwords, and XSS. We can also set a schedule for scans or perform them manually.

Important Note

It should be noted that Cloud Scanner can generate a real load against your application, so performance should be taken into consideration as some scans can take hours to complete. Likewise, caution should be exercised when using this service as it can post comments into the comments section of a web page or generate multiple emails, if prompted, for signup on a page. Therefore, it is good practice to scan your applications in a test environment and have a backup of your application's state before a scan is initiated.

The following screenshot shows us creating a new scan. To begin, we must navigate to App Engine | Security Scan. We must then click Enable API. We are now ready to create a new scan:

Figure 15.21 – New security scan

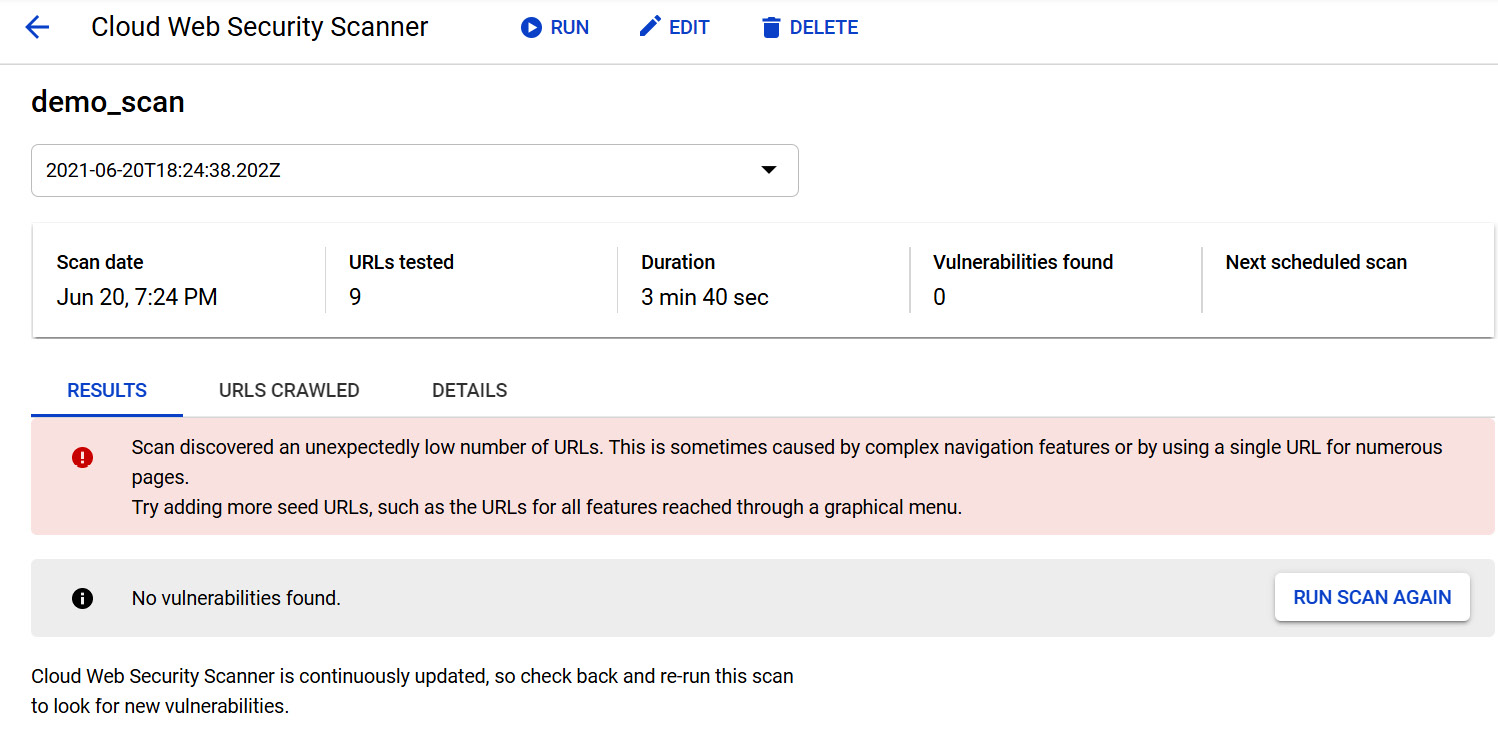

When we have run the scan, we will receive our results, which will flag any vulnerabilities, as shown in the following screenshot. We can also view the scan's date and the duration of the scan:

Figure 15.22 – Security scan results

In this section, we looked at Cloud Web Security Scanner and showed you how to set up a new scan. In the next section, we will touch on monitoring and logging.

Monitoring and logging

We will look at monitoring in a lot more detail in This Article, Monitoring Your Infrastructure, but it is wise to mention this again in terms of security. Cloud Operations is Google's service for monitoring and managing services, containers, applications, and infrastructure. Cloud Operations offers error reporting, debugging, alerts, tracing, and logging.

Logging assists in securing GCP and minimizing the downtime of your applications. Monitoring allows you to monitor application metrics that can flag an anomaly. Moreover, Cloud Operations Debugger inspects the state of your production data and compares your source code without any performance overhead. Logging allows real-time metrics logging and retains a set period, depending on the log type. If you have security requirements to keep logs for a longer period, then they can be exported to Cloud Storage, which offers inexpensive storage for extended periods.

Encryption

Encryption is a basic form of security for sensitive data. In its simplest form, encryption is the process of turning plaintext data into a scrambled string of characters. We cannot read those strings and, more importantly, a system cannot read them if it doesn't hold the relevant key to decrypt it back to plaintext format.

Encryption is a key element of GCP security. By default, GCP offers encryption at rest, which means that data stored on GCP's storage services is encrypted without any further action from users. This means that there is no additional configuration needed and even if this data did somehow get into the wrong hands, then the data would be unreadable as they wouldn't have the proper encryption key to make sense of the data.

The ability to encrypt sensitive data over GCP assures customers that confidential data will stay just there. At the core of this protection is GCP KMS, which Google uses to manage cryptographic keys for your cloud services. Cloud KMS allows you to generate, use, rotate, and destroy cryptographic keys, which can either be Google-generated or imported from your KMS system. Cloud KMS is integrated with Cloud IAM, so you can manage permissions on individual keys. When we create a new disk, for example, the default option is to use a Google-managed key.

Now, let's take a look at data encryption keys and key encryption keys.

Data encryption keys versus key encryption keys

The key that's used to encrypt a piece of data is known as a data encryption key (DEK). These keys are then wrapped by a key encryption key (KEK). KEKs are stored and managed within Google Cloud's KMS, allowing Google to track and control access from a central point. It isn't possible to export your KEK from KMS, and all of the encryption and decryption of keys must be within KMS. In addition to this, KMS-held keys are backed up for disaster recovery purposes. KEKs are also rotated over a certain period, meaning that a new key is created. This allows GCP to comply with certain regulations, such as Payment Card Industry Data Security Standard (PCI DSS), and is considered a security best practice. GCP will rotate the keys every 90 days by default.

CMEKs versus CSEKs

GCP offers additional methods for managing encryption keys that might fit better with customer security policies.

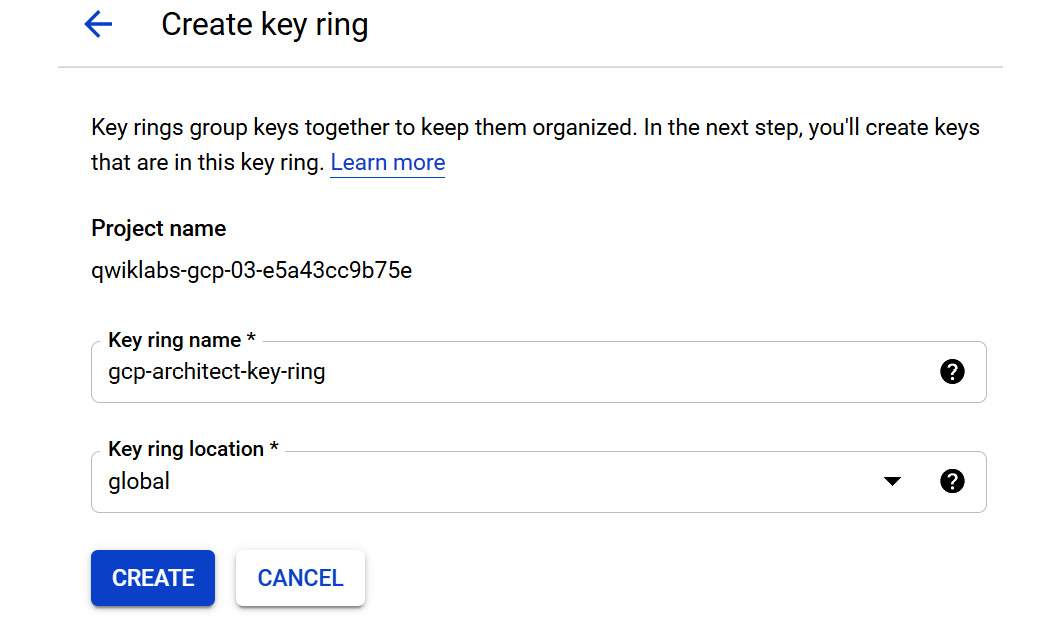

GCP offers the ability for the customer to manage KEKs, allowing us to control the generation of keys, the rotation of keys, and the expiration of keys. Keys will still be stored in KMS, but we will have control of their life cycle. This is known as CMEK. To organize keys effectively, Google Cloud KMS uses the concept of key rings to group keys together and push inherited permissions to keys.

We can create our key by performing several steps. Let's get started:

- By browsing to Security | Key Management from our GCP console, we can create a new key ring within Cloud KMS. Key rings group keys together. The first thing we must do is enable the IP and then select a key ring location. We can select a region, or we can select global. Our decisions will impact the performance of the applications using the key and also the options we have when creating the key. In the following example, we are selecting global. A global location is a special multi-region location whereby data centers are spread throughout the world, and it would not be possible to control the exact data centers that are selected. We should only use global if our applications are distributed globally, we have infrequent read or writes, and our keys have no geographic residency requirements:

Figure 15.23 – Create key ring

Once we have created the key ring, we will be prompted to create a key. We can create several keys under the key ring, and those keys will be responsible for encrypting and decrypting data. When creating a new key, you can see that we have options for setting the key's purpose; for example, whether the key is only for encryption, decryption, or both.

Tip

When deciding on which location to choose, network performance should be considered.

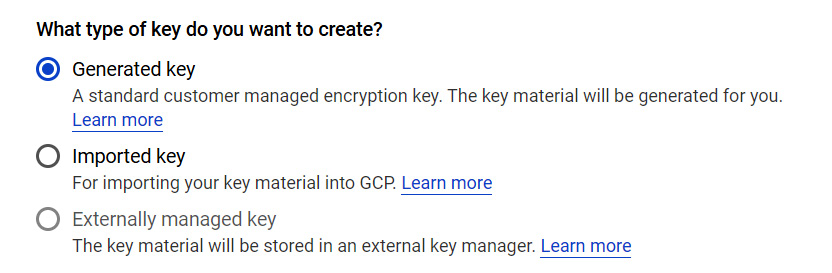

- The first selection we have to make is what type of key we want. We are given options as follows. As we selected a global key ring, we are not able to select externally managed keys. We can see from the descriptions that a generated key is a standard customer-managed encryption key:

Figure 15.24 – Selecting the type of key

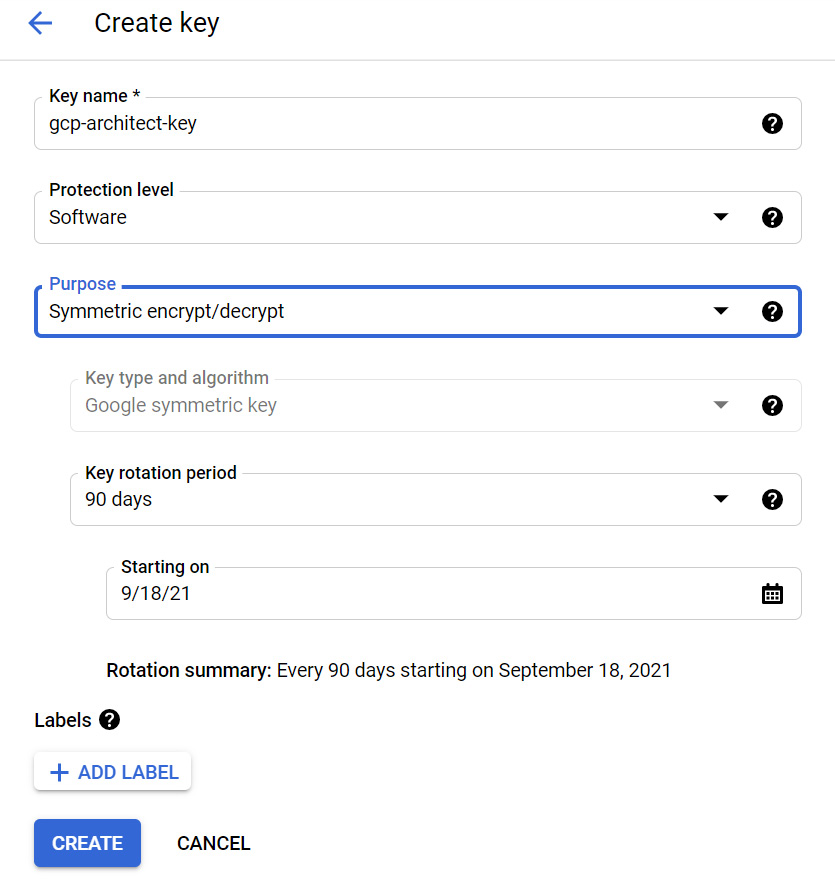

- Furthermore, we can select rotation periods and when the validity of the key begins. Once the key has been created, we can manage the rotation period, disable and reenable the key, or delete the key completely through the GCP console. The following screenshot shows us creating a new key:

Figure 15.25 – Create key

An important aspect of the KMS key structure is the key version. Each key can have many versions, which are numbered sequentially, starting with 1. We may have files encrypted with the same key but with different key versions. Cloud KMS will automatically identify which version was used for encryption and will use this to decrypt the file if the version is still enabled. We will not be able to decrypt the file if the version has been moved to a state of disabled, destroyed, or scheduled for destruction.

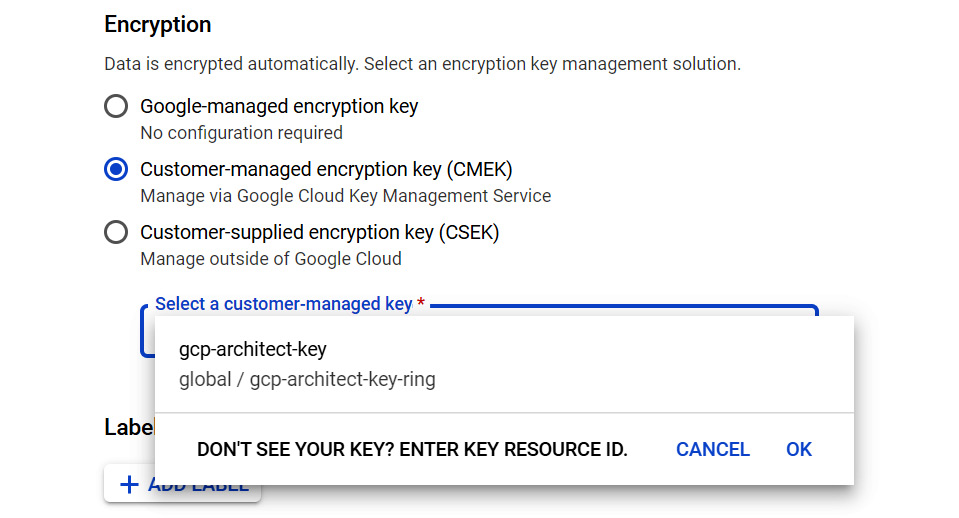

- Now that we have created our key, let's use it. If, for example, we want to create a new disk in GCP, we have several options under the encryption settings. To use our newly created key, we should select Customer-managed key and select our key from the drop-down menu when prompted, as shown in the following screenshot:

Figure 15.26 – Using Customer-managed encryption key (CMEK)

A second option is available is CSEK, where the key is not stored in KMS, and Google doesn't manage the key. CSEK allows us to provide our own AES-256 key. If we supply this key, Cloud Storage doesn't permanently store it on Google's servers or in any way manage our key. Once we have provided the key for a Cloud Storage operation, then the key is purged from Google's memory. This means that the key would have to be provided each time storage resources were created or used. The customer would have sole responsibility, which means that if the key was lost, then you would be unable to decrypt your data. Here, we would select the Customer-supplied encryption key (CSEK) option and input the relevant key.

Now, let's start looking at some industry regulations.

Industry regulations

It's also important to understand that security is more than just firewall rules or encryption. Google needs to adhere to global regulations and third-party certifications. Examples of the regulations that GCP adheres to can be found on their web page at https://cloud.google.com/security/compliance/#/. It is recommended that you review this page to familiarize yourself with the various standards that should be met. Some of these will be well-known; for example, regulations from the financial industry such as PCI DSS or the ISO 27017 address, which is responsible for interactions between cloud vendors and customers.

In this section, we will look at PCI compliance.

PCI compliance

Many organizations handle financial transactions, and Google has to go to great lengths to secure information residing on their servers. An example of PCI can tie what we learned previously into a real-life example. If there is a need to set up a specific payment processing environment, then Google can assist in helping customers achieve this. At the core of this architecture would be what we have learned in this article. To secure the environment, we should use Resource Manager to create separate projects to segregate our gaming and PCI projects. We can utilize Cloud IAM and apply permissions to those separate projects. Remember the rule of least privilege! We can also secure the environment with firewall rules to restrict the inbound traffic. We want the public to be able to use our payment page, so we need HTTPS traffic to be secured by an HTTP(S) load balancer, and any additional payment processing applications may need bi-directional access to third parties. Take a look at the article athttps://cloud.google.com/solutions/pci-dss-compliance-in-gcp for more in-depth knowledge of how GCP would handle PCI DSS requirements.

In the next section, we'll review data loss prevention.

Data loss prevention (DLP)

Removing Personal Identifiable Information (PII) is also a concern within the industry. Cloud DLP provides us with a powerful data and de-identification platform. DLP uses information types, also known as infoTypes, to define what is scanned for. These infoTypes are types of data that are sensitive such as name, email address, credit card number, or identification number. An example of how Cloud DLP can assist us is that we can automatically redact such sensitive data, which may be stored in GCP storage repositories.

We should also note that compliance with the European Union General Data Protection Regulation (GDPR) is one of Google's top priorities from a security standpoint and that these build-in infoType detectors offer GDPR compliance.

Penetration testing in GCP

It's worth noting that, if you have a requirement to perform penetration testing on your GCP infrastructure, you don't need permission from Google, but you must abide by the Acceptable Use Policy to ensure that tests only target your projects. Interestingly, Google offers an incentive program, should bugs or vulnerabilities be found, and rewards range from $100 to $31,337!

CI/CD security overview

As DevOps becomes increasingly popular, it is important to consider security when we build our pipelines, images, and delivery models. There are some best practices offered by Google Cloud when building your CI/CD pipeline. However, they, like many best practices, are subjective and may not apply to your architecture. Nonetheless, it is important to think about things such as the following:

- Branching model: It's important to consider how changes to your code will affect your production environment, so feature branches may be required to test the changes before any merge requests are made in the production code base.

- Container image vulnerabilities: When creating an image to be used in your pipeline, it is also important to scan for vulnerabilities when the image is uploaded to an Artifact or Container Registry. Cloud Build can be used in this instance to scan an image once it has been built and then block upload it to an Artifact Registry. This is known as on-demand scanning.

- Filesystem security: Even in containers, it is still important to prevent potential attackers from installing their tools. We should avoid running as root inside our container and launch the container in read-only mode.

- The size of our containers should also be considered, not only from a performant standpoint but also from a security standpoint. A small container means a smaller attack surface area. Many larger containers show vulnerabilities relating to programs that are installed but have nothing to do with the application.

Now, let's look at Identity-Aware Proxy.

Identity-Aware Proxy (IAP)

Google offers additional access control to your Cloud Engine instances or applications running on GCP via Cloud IAP. This allows the user's identity to be verified over HTTPS and grants access only if permitted. This service is especially useful for remote workers as it negates the need for a company VPN to authenticate user requests using on-premises networks. Instead, access is via an internet-accessible URL. When remote users need to access an application, a request is forwarded to Cloud IAP, and access will be granted (if permitted). Additionally, without the overhead of a traditional VPN, manageability is simplified for the administrator.

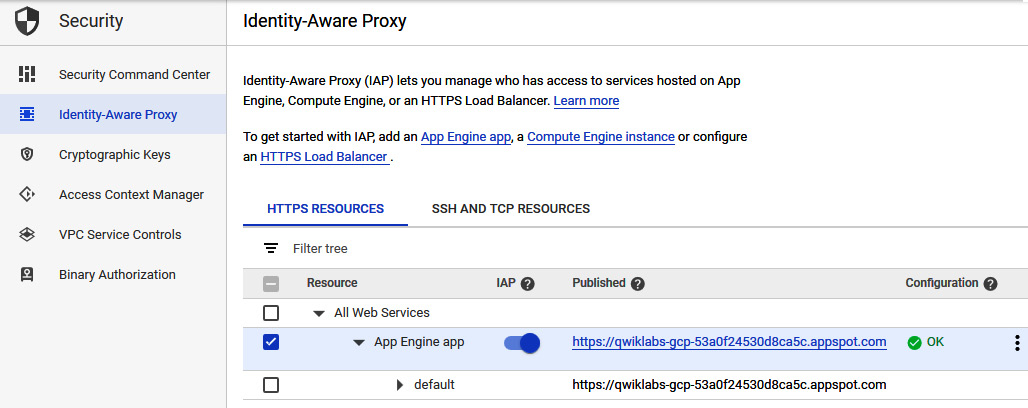

Enabling Cloud IAP is a simple process, but some prerequisites must be met. You will need to configure firewall rules to block access to the VMs that are hosting your application and only allow access through IAP. Next, you need to navigate to Security | Identity-Aware Proxy in the GCP console, where you will find a list of your application or Compute Engine resources. Simply click on the blue radio button to enable IAP.

Important Note

You may be asked for OAuth consent first.

Finally, you can populate the IAP access list with the relevant membership from the information panel that will become available. The following screenshot shows IAP enabled for an HTTPS resource:

Figure 15.27 – Identity-Aware Proxy

Now that we have provided an overview of IAP, let's look at some important features.

TCP forwarding

IAP has a port forwarding feature that allows us to Secure Shell (SSH) or Remote Desktop for Windows (RDP) onto our backends from the public internet, which prevents these services from being exposed openly to the internet. We are required to pass authorization and authentication checks before they get to their intended resources, thus reducing the risk of connecting to these services from the internet.

IAP's forwarding feature allows us to connect to random TCP ports on our Compute Engine instances. IAP will then create a listening port on the localhost that forwards all traffic to our instance. IAP will then wrap all traffic from the client in HTTPS and allow access to the interface and port if we successfully pass authentication. This authentication process is based on configured IAM permissions.

There are some limitations that we should be aware of. Port forwarding is not intended for bulk transfer of data, so IAP reserves the right to apply rate limits to prevent misuse of the service. Additionally, after 1 hour of inactivity, IAP will automatically disconnect our session, so applications using this should have logic to handle re-establishing a tunnel after being disconnected.

Access Context Manger

Access Context Manager offers Google Cloud Organization Administrators the ability to define fine-grained, attribute-based access control for projects and resources in GCP. Access Context Manager grants access based on the context of the request, such as the user's identity, the device type, or the IP address. It adds another layer of protection on top of the traditional perimeter security model, whereby anything inside a network is considered trusted. Access Context Manager takes into consideration the mobile workforce and Bring Your Own Device (BYOD), which adds additional attack vectors that are not considered by a perimeter security model.

Chronicle

One of the most recent introductions to Google's security suite is Chronicle. Although it is doubtful that we will be quizzed on this in the exam, we felt it was worthwhile to say a few words on this.

Chronicle is a threat detection solution that helps enterprises identify threats at speed and scale. It can search the massive amounts of security and network telemetry data that enterprises generate and provide instant analysis and context on an activity.

Summary

There are much bigger, deeper dives into security, but for the exam, you must understand that security is key to all of Google's services and was not an afterthought. So far, we have covered several services that are offered to make sure that your GCP infrastructure is secure. We introduced Cloud Identity, covered the IAM model, and looked at encryption.

In the next section, we will look at additional security services.

Additional security services

GCP offers several advanced services to help you secure your infrastructure and resources. In this section, we will take a short look at other key services.

Security Command Center (SCC)

SCC gives enterprises an overarching view of their cloud data across several GCP services. The real benefit of SCC comes from it assisting in gathering data and identifying threats, and it can act on these before any business impact occurs. It also provides a dashboard that reflects the overall health of our resources. It can also integrate with other GCP tools such as Cloud Security Scanner and third parties, such as Palo Alto Networks. GCP refers to possible security threats in SCC as findings. To access these findings, you must have the relevant IAM role, which includes the permissions for the Security Center Findings Viewer, and then browse to the Findings tab of the SCC. Of course, like all GCP services, there is API integration, which allows us to list any findings. SCC also lets us use security marks, which allow us to annotate assets or findings and search or filter using these marks.

To use SCC, you must view it from your GCP organization. Additionally, you must be an organization administrator and have the Security Center Admin role for the current organization. This allows you to select all of the current and future projects to be included (if you wish). Alternatively, we can include or exclude individual projects, should any security risk be found.

Security Command Center events can be exported to Chronicle, which is purpose-built for security threat detection and investigation. It is built on Google infrastructure, which lets us take advantage of Google's scale to reduce investigation time.

Forseti

Forseti is an open source security tool that assists in securing your GCP environment. It is useful if you wish to monitor resources to ensure that access control is the same as you intended it to be. It can also be used to create an alert when anything changes, via email or a post on a Slack channel. Additionally, it lets you take snapshots of resources so that you always know what your cloud looks like. It can also enforce rules on sensitive GCP resources by comparing a policy with the current state and correcting any violations using GCP APIs.

Cloud Armor

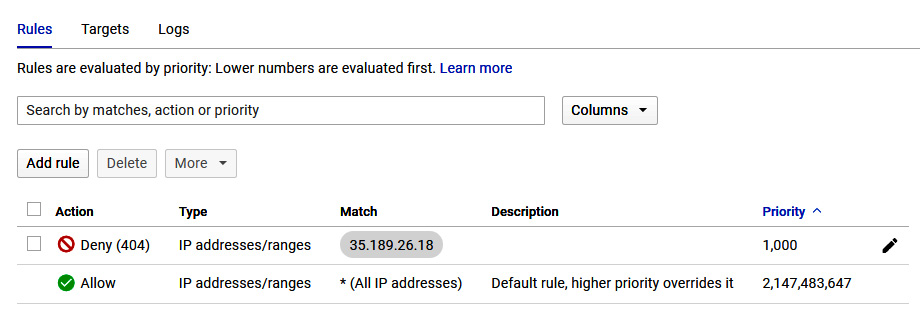

Cloud Armor uses Google's global infrastructure to provide defense at scale against DDoS attacks. It is used to blacklist or whitelist access to your HTTPS load balancer. This can be used to prevent malicious users or traffic from contacting your resources, or – worse – taking control of your VPC based on rule sets. The following screenshot shows a policy that has been set to deny a specific IP and give a 404 error:

Figure 15.28 – Cloud Armor

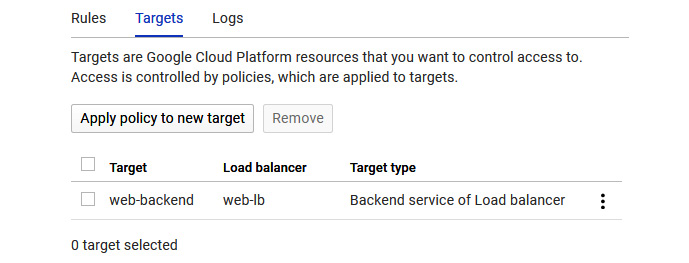

By clicking on the Targets tab, we can see that the policy is targeting a backend load balancer, as shown in the following screenshot:

Figure 15.29 – Cloud Armor – Targets

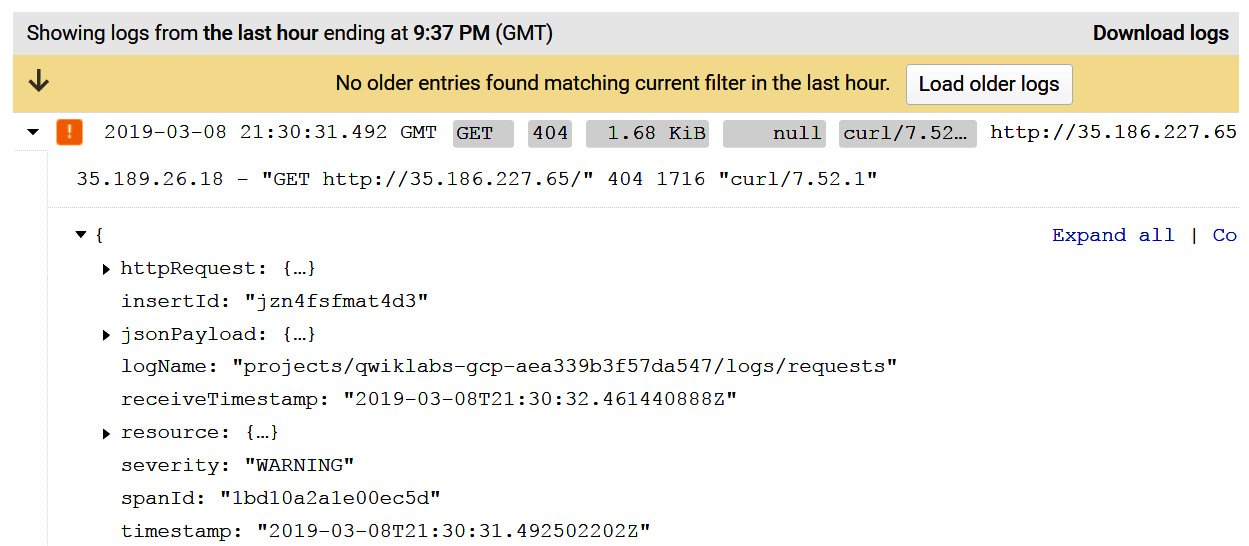

Cloud Armor logs allow us to see any access attempts and tell us which source requested access. We should be aware that these logs are provided through Stackdriver, which was described in the Monitoring and logging section of this article. This will be covered in more detail in This post, Monitoring Your Infrastructure.

The following screenshot shows some example output from the Cloud Armor logs:

Figure 15.30 – Cloud Armor logs

Secret Manager

Secret Manager is a convenient and secure system that's used to store certificates, passwords, or API keys. Storing this sensitive data in a central location allows for a single source of truth to manage, access, and audit secrets across Google Cloud. Secret Manager also allows access policies to be set for each secret. Additionally, audit logs can be configured for each secret access. This service is an alternative for third-party secret management systems such as Hashicorp Vault.

In this section, we looked at some of the other security services offered by GCP. Not all of them will be questioned in the exam, but it is good to have a high-level understanding of what each service offers.

Summary

There are much bigger, deeper dives into security, but for the exam, we must understand that security is key to all Google's services and was not an afterthought. In this article, we looked at many services that GCP offers to make sure our solution is secure. Among other things, we introduced Cloud Identity, covered the IAM model, and looked at encryption. In the next post, we will look at Google Cloud Management Options.

Exam Tip

Remember that we should never grant a user or service more permissions than what is required. Always use the principle of least privilege.

Further reading

Read the following articles for more information on what was covered in this article:

- Cloud Identity: https://cloud.google.com/identity/docs/

- IAM: https://cloud.google.com/iam/docs/

- Encryption: https://cloud.google.com/storage/docs/encryption/

- Cloud IAP: https://cloud.google.com/iap/docs/

- Security Command Center: https://cloud.google.com/security-command-center/docs/

- Cloud Armor: https://cloud.google.com/armor/docs/

- Google Cloud Security Foundations Guide: https://services.google.com/fh/files/misc/google-cloud-security-foundations-guide.pdf

- Best Practices Guides: https://cloud.google.com/security/best-practices#section-1