In this article, we will finally talk about fully serverless compute options, that is, Cloud Functions. This means no more servers and no more containers. This service is leveraging them in the backend, but they aren't visible to the end user. All we need to care about now is the code. Cloud Functions is a Function-as-a-Service (FaaS) offering. This means that you write a function in one of the languages supported by GCP and it can be triggered by an event or via HTTP. GCP takes care of provisioning and scaling the resources that are needed to run your functions.

Important Note

How does Cloud Functions work in the backend? Again, you don't need to bother with GCP's backend infrastructure, which runs the functions for you. However, being an engineer, I bet you will still search for answers on your own. Cloud Functions uses containers to set an isolated environment for your function. These are called Cloud Functions instances. If multiple functions are executed in parallel, multiple instances are created.

Exam Tip

Expect Cloud Functions questions to appear in the Cloud Architect exam. You will need to understand what Cloud Functions is and what the most common use cases are. Being able to tell the difference between two types of functions, namely HTTP and backend functions, is also important. Knowing when you would use Cloud Functions rather than other compute options, as well as remembering what programming languages are supported, is crucial. Finally, be sure that you can deploy a function both from the Google Cloud Console and the command line.

In this article, we will cover the following topics:

- Main Cloud Functions characteristics

- Use cases

- Runtime environments

- Types of Cloud Functions

- Events and triggers

- Other considerations

- Deploying Cloud Functions

- IAM

- Quotas and limits

- Pricing

Main Cloud Functions characteristics

The following are the key Cloud Functions characteristics:

- Serverless: Cloud Functions are completely serverless. The underlying infrastructure is abstracted from the end user.

- Event-driven: Cloud Functions are event-driven. There are triggered in response to an event or HTTP request. This means that they are only invoked when needed and do not produce any cost when inactive.

- Stateless: Cloud Functions do not store state nor data. This allows them to work independently and scale as needed. It is very important to understand that each invocation has an execution environment and does not share global variable memory or filesystems. To share state across function invocations, your function should use a service such as Cloud Datastore or Cloud Storage.

- Autoscaling: Cloud Functions scale from zero to the desired scale. Scaling is managed by GCP without any end user intervention. Autoscaling limits can be set to control the cost of execution. This is important as failures in the design might cause large spikes, resulting in your bill reaching the clouds.

Now that we are aware of the main characteristics, let's look at some use cases.

Use cases

Now that we have a basic understanding of Cloud Functions, let's have a look at numerous use cases. Remember that, in each of these use cases, you can still use other compute options. However, it is a matter of delivering the solution quickly, taking advantage of in-built autoscaling, and paying only for what we have used.

Application backends

Instead of using virtual machines for backend computing, you can simply use functions. Let's have a look at some example backends:

- IoT backends: In the IoT world, there's a large number of devices that send data to the backend. Cloud Functions allow you to process this data and auto-scale it when needed. This happens without any human intervention.

- Mobile backends: Cloud Functions can process data that's delivered by your mobile applications. It can interact with all the GCP services to make use of big data, machine learning capabilities, and so on. There is no need to use any virtual machines and you can go completely serverless.

- Third-party API integrations: You can use functions to integrate with any third-party system that provides an API. This will allow you to extend your application with additional features that are delivered by other providers.

Next, let's have a look at some data processing examples.

Real-time data processing systems

When it comes to event-driven data processing, Cloud Functions can be triggered whenever a predefined event occurs. When this happens, it can preprocess the data that's passed for analysis with GCP big data services:

- Real-time stream processing: When messages arrive in the Pub/Sub, Cloud Functions can be triggered to analyze or enrich the messages to prepare them for further data processing steps in the pipeline.

- Real-time files processing: When files are uploaded to your Cloud Storage bucket, they can be immediately processed. For example, thumbnails can be created or analyzed using GCP AI APIs.

Next, let's look at examples of smart applications.

Smart applications

Smart applications allow users to perform various tasks in a smarter way by using a data-driven experience. Some of these are as follows:

- Chatbots and virtual assistants: You can connect your text or voice platforms to Cloud Functions to integrate them with other GCP services, such as DialogFlow (see This Post, Putting Machine Learning to Work), to give the user a natural conversation experience. The conversation logic can be defined in DialogFlow without any programming skills. Integration with third-party applications can be created to provide services such as weather information or the purchase of goods.

- Video and image analysis: You can use Cloud Functions to interact with the various GCP AI building blocks, such as video and image AIs. When a user uploads an image or video to your application, Cloud Functions can immediately trigger the related API and return the analysis. It may even perform actions, depending on the results of that analysis.

- Lightweight APIs and webhooks: Since Cloud Functions can be triggered using HTTP, you can expose your application to the external world for programmatic consumption. You don't need to build your API from scratch.

Now, let's cover some runtime environment examples.

Runtime environments

Cloud Functions are executed in a fully managed environment. The infrastructure and software that's needed to run the function are handled for you. Each function is single-threaded and is run in an isolated environment with the intended context. You don't need to take care of any updates for that environment. They are auto-updated for you and scaled as needed.

Currently, several runtimes are supported by Cloud Functions, namely the following:

- Node.js 10, 12, and 14

- Python 3.7, 3.8, and 3.9

- Go 1.11 and 1.13

- Java 11

- .NET Core 3.1

- Ruby 2.6 and 2.7

- PHP 7.4

When you define a function, you can also define the requirements or dependencies file in which you state which modules or libraries your function is dependent on. However, remember that those libraries will be loaded when your function is executed. This causes delays in terms of execution. We will talk about this in more detail in the Cold start section of this post.

In the next section, we will look at types of Cloud Functions.

Types of Cloud Functions

There are two types of Cloud Functions: HTTP functions and background functions. They differ in the way they are triggered. Let's have a look at each.

HTTP functions

HTTP functions are invoked by HTTP(S) requests. The POST, PUT, GET, DELETE, and OPTIONS HTTP methods are accepted. Arguments can be provided to the function using the request body:

Figure 9.1 – HTTP request

The invocation can be defined as synchronous as it can return a response that's been constructed within the function.

Interesting Fact

Don't expect a question on this on the exam. However, it might be interesting to know that Cloud Functions handles HTTP requests using common frameworks. For Node.js, this is Express 4.16.3, for Python, this is Flask 1.0.2, and for Go, this is the standard http.HadlerFunc interface.

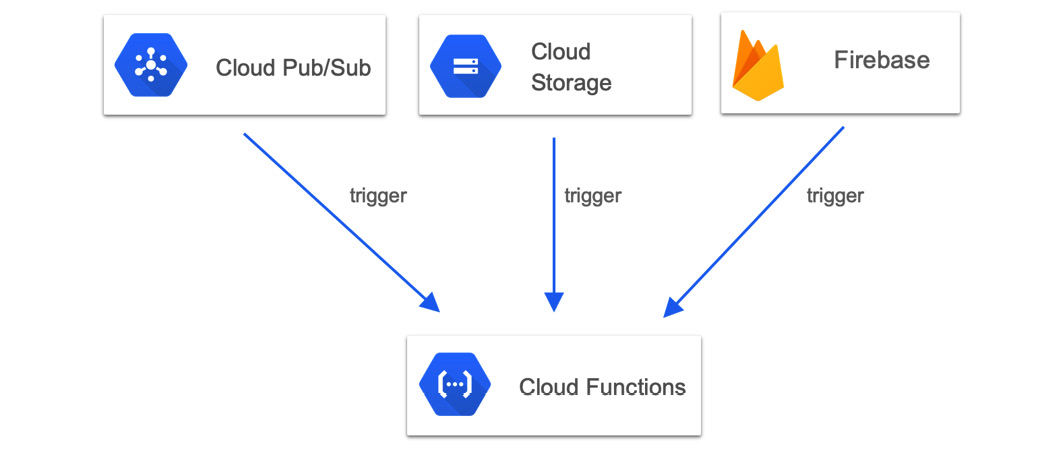

Background functions

Background functions are invoked by events such as changes in the Cloud Storage bucket, messages in the Cloud Pub/Sup topic, or one of the supported Firebase events:

Figure 9.2 – Background functions

In the preceding diagram, we can see various triggers for Cloud Functions; that is, Cloud Pub/Sub, Cloud Storage, and Firebase.

Next, let's take a look at events.

Events

Events can be defined as things happening in or outside the GCP environment. When they occur, you might want certain actions to be triggered. An example of an event might be a file that's been added to Cloud Storage, a change that was made to your database table, or a new resource that has been provisioned to GCP, to name a few. These events can come from one of the following providers:

- HTTP

- Cloud Storage

- Cloud Pub/Sub

- Cloud Logging via Pub/Sub sink

- Cloud Firestore

- Firebase Realtime Database, Storage, and Authentication

If you create a sink to forward the logs to Pub/Sub, then you can trigger Cloud Functions (for more details, check out This Post, Monitoring Your Infrastructure).

Next, let's look at triggers.

Triggers

For your function to react to an event, a trigger needs to be configured. The actual binding of the trigger happens at deployment time. We will have a look at how to deploy functions with different kinds of triggers in the Deploying Cloud Functions section.

Other considerations

When using Cloud Functions, you should be aware of a couple of features and considerations. Let's have a look at these now.

Cloud SQL connectivity

As we mentioned previously, Cloud Functions is stateless and the state needs to be saved on external storage or in a database. This can be done with external storage such as Cloud Storage or a database such as Cloud SQL. In general, any external storage can be used. We introduced Cloud SQL in This Post, Google Cloud Platform Core Services. To remind you, it is a managed MySQL, Postgres, or MS SQL database. With Cloud Functions, you can connect to Cloud SQL using a local socket interface that's provided in the Cloud Functions execution environment. It eliminates the need to expose your database to a public network.

Connecting to internal resources in a VPC network

If your function needs to access services within a VPC, you can connect to it directly by passing a public network. To do this, you need to create a serverless VPC access connector from the network menu and refer to the connector when you deploy the function. Note that this does not work with Shared VPC and legacy networks.

Environmental variables

Cloud Functions allows you to set environment variables that are available during the runtime of the function. These variables are stored in the function's backend and follow the same life cycle as the function itself. These variables are set using the --set-env-vars flag; for example:

gcloud functions deploy env_vars --runtime python37 --set-env-vars FOO=bar --trigger-http

Important Note

The first time you use Cloud Functions, you will be asked to enable the API.

Next, let's take a look at cold starts.

Cold start

As we mentioned previously, Cloud Functions executes using function instances. These new instances are created in the following cases:

- When the function is deployed

- Scaling up is required to handle the load

- When replacing an existing instance is triggered

Cold starts can impact the performance of your application. Google comes with a set of tips and tricks to help us reduce the impact of cold starts. Check out the Further reading section for a link to a detailed guide.

Local emulator

Deploying functions to GCP takes time. If you want to speed up tests, you can use a local emulator. This only works with Node.js and allows you to deploy, run, and debug your functions.

In the next section, we will learn how to deploy a Cloud Function.

Deploying Cloud Functions

Cloud Functions can be deployed using a CLI, the Google Cloud Console, or with APIs. In this section, we will have a look at the first two methods since it's likely that you will be tested on them in the exam.

Deploying Cloud Functions with the Google Cloud Console

To deploy Cloud Functions from the Google Cloud Console, follow these steps:

- Select Cloud Functions from the hamburger menu. You will see the Cloud Functions window. Click on CREATE FUNCTION:

Figure 9.3 – The Cloud Functions menu

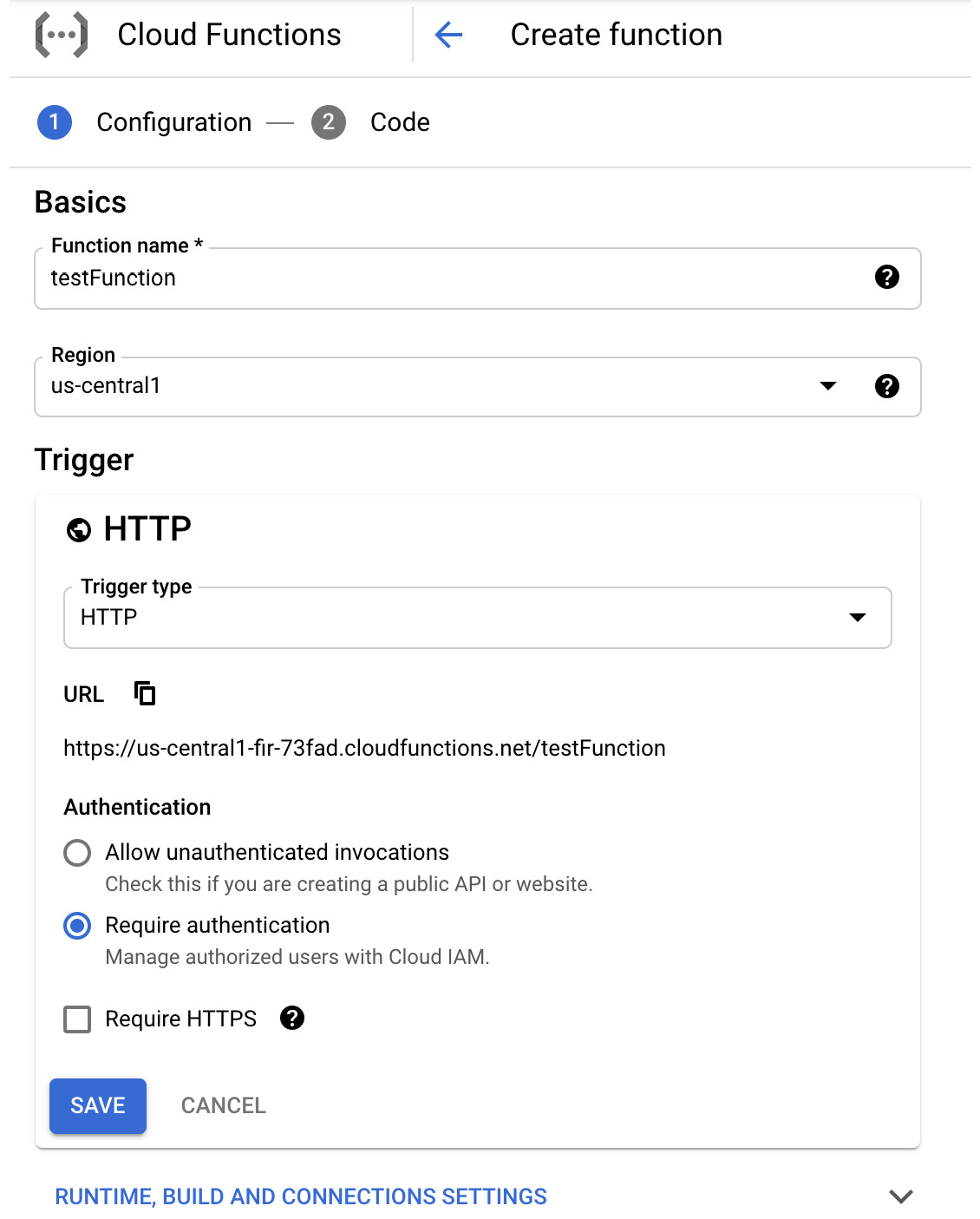

- Fill in the name of your function and the region where it will be hosted:

Figure 9.4 – Create function

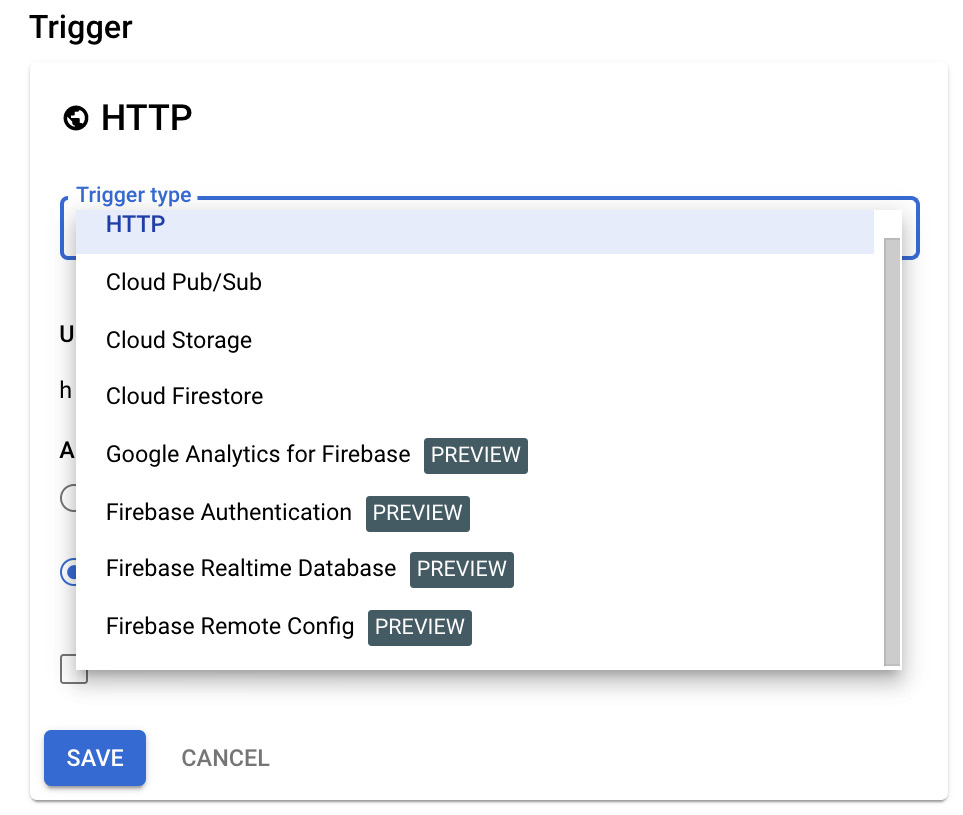

- Choose the trigger type from the drop-down menu:

Figure 9.5 – Trigger

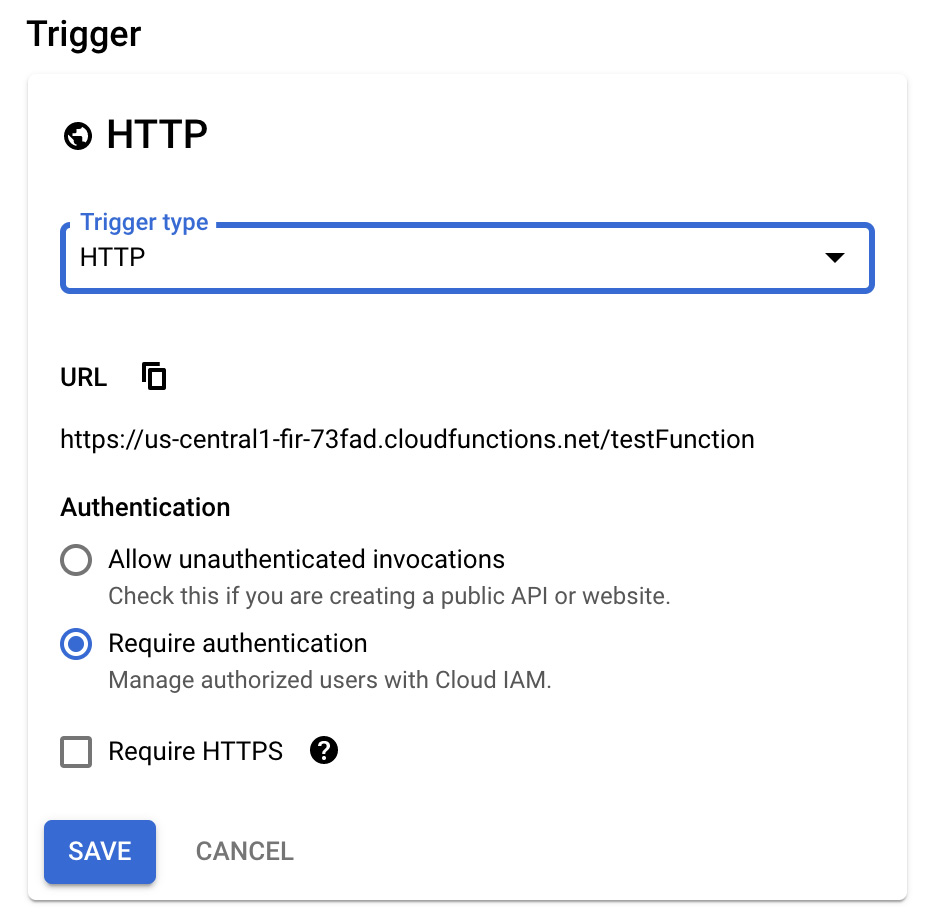

- Click the SAVE button. If you have chosen to use an HTTP trigger, the URL to call your function with will be generated for you in the URL section, like so:

Figure 9.6 – Cloud Function URL

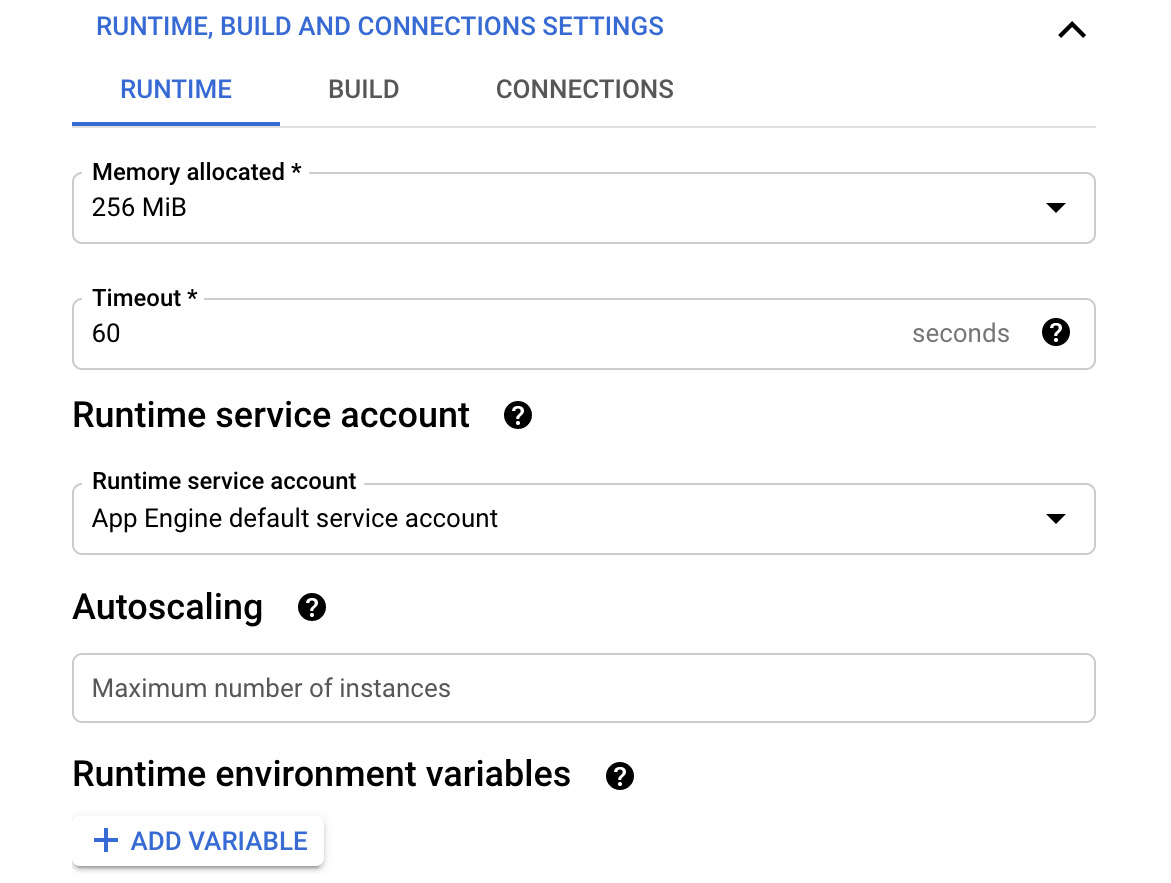

- If you want to set more advanced settings, click on RUNTIME, BUILD AND CONNECTION SETTINGS. In the RUNTIME tab, you can set Memory allocated and Timeout. You can also define the service account that will run the function. Finally, you can define the minimum and the maximum number of instances:

Figure 9.7 – Runtime options

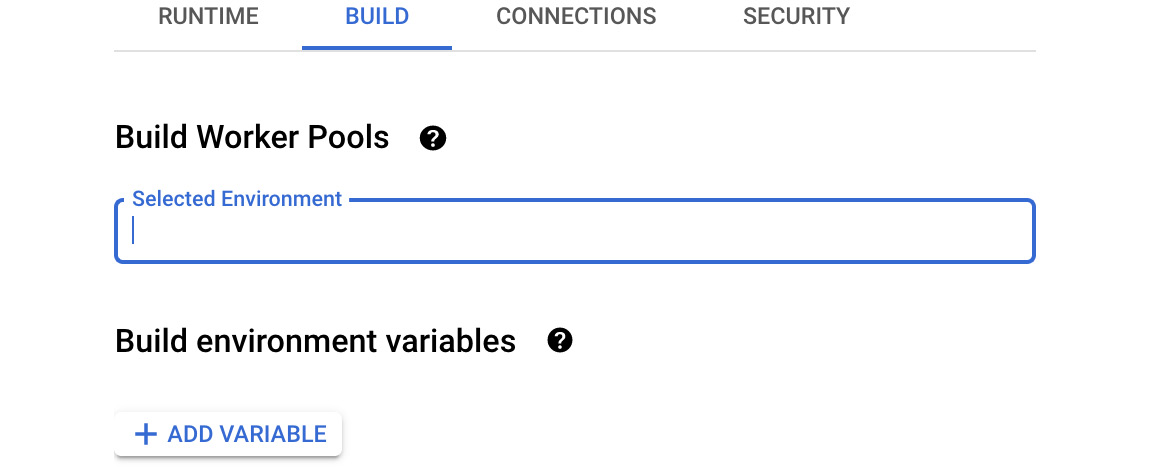

- In the BUILD tab, you can define the variables and worker pools that will be used to build the function:

Figure 9.8 – Build options

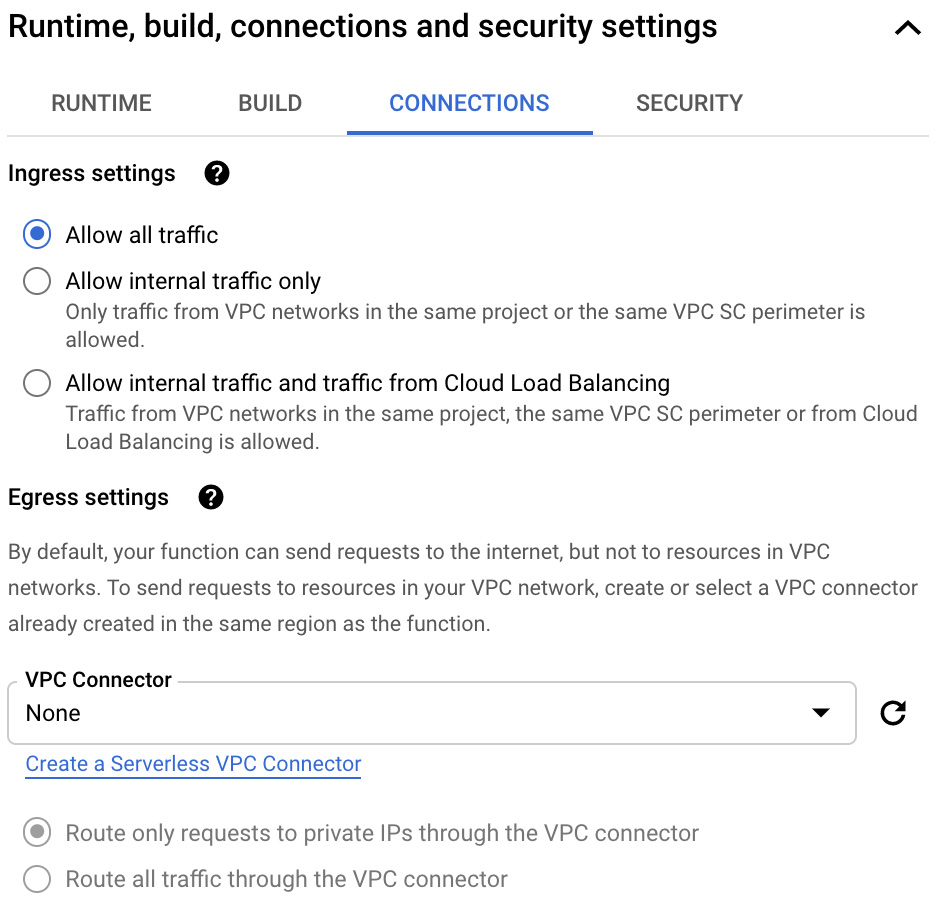

- In the CONNECTIONS tab, you can define various ingress settings. This can limit where the traffic to trigger the function can come from. You may wish to create a connector so that the function can connect to your VPC:

Figure 9.9 – CONNECTIONS

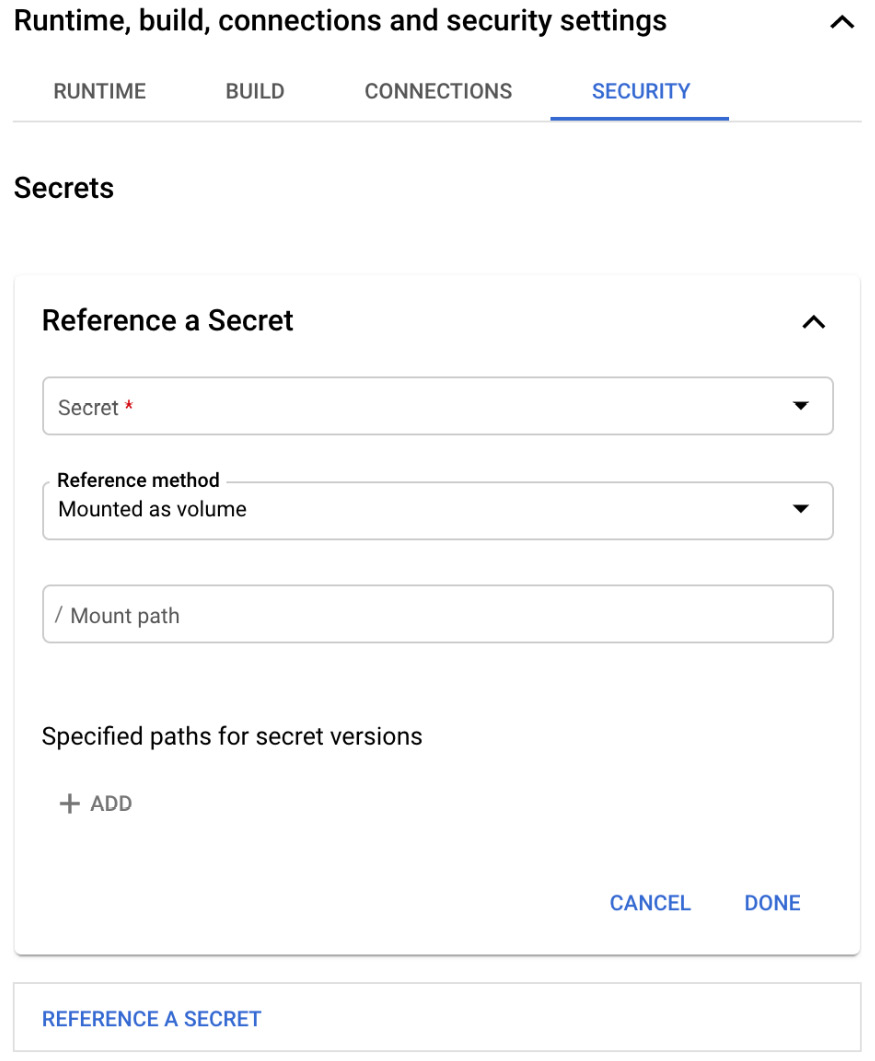

- In the SECURITY tab, you can set up a secret that can be consumed by the function:

Figure 9.10 – SECURITY

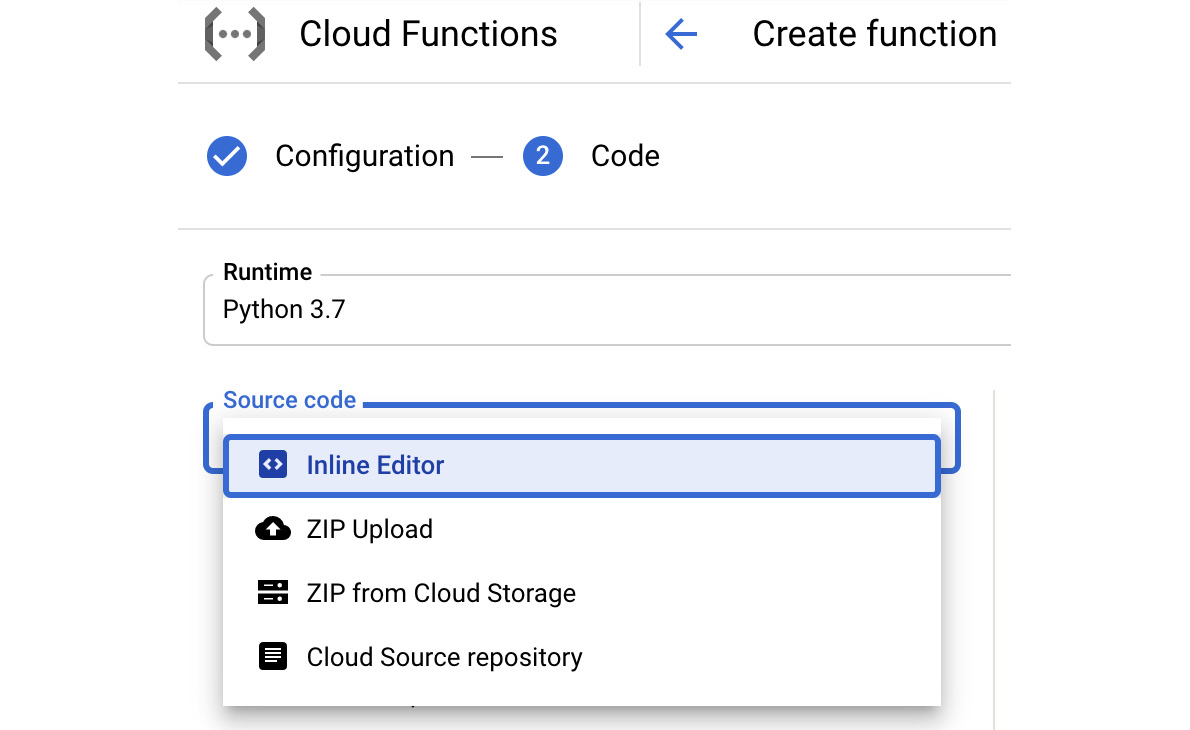

- Click the Next button. Select how you will provide your code. You can either use Inline Editor, upload it from your local machine (ZIP Upload) or ZIP from Cloud Storage, or even use Cloud Source repository:

Figure 9.11 – Source code

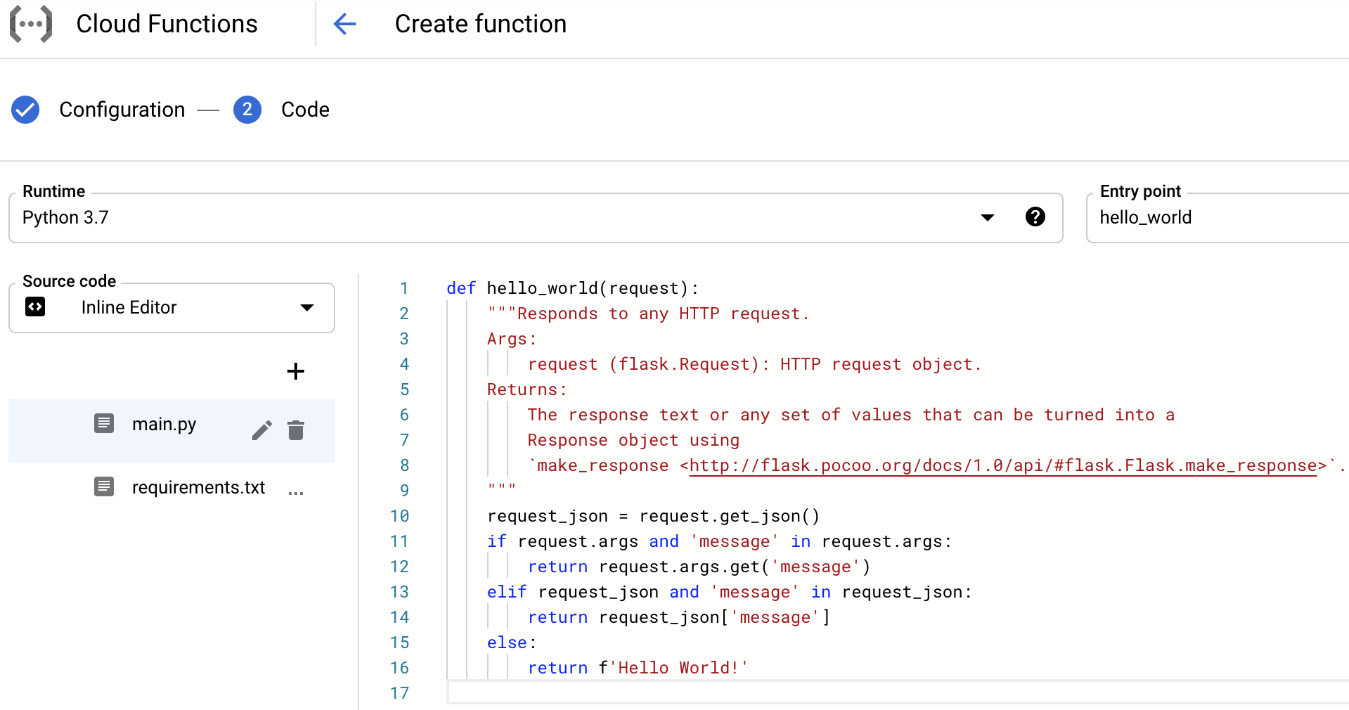

- From the Runtime dropdown, choose the programming language you will use to write your functions. For this example, we decided to use the inline editor for Python 3.7. Therefore, we need to provide two files: main.py, where we will define the function, and requirements.txt, with dependencies:

Figure 9.12 – The main.py file

- In the Entry point field, we must define the name of the entry point; for example, hello_world:

Figure 9.13 – Entry point

- Now, we can simply deploy the function by clicking on the DEPLOY button:

Figure 9.14 – DEPLOY

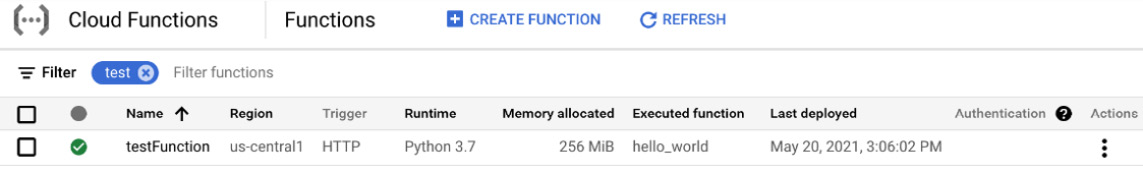

- Once your function has been deployed, you will be able to see it in the Cloud Functions list:

Figure 9.15 – Function deployed

Now, your function is ready to execute.

Deploying functions with the gcloud command

Now that we have seen how to deploy the function using the Google Cloud Console, it will be easier to explain the parameters and flags for the gcloud command.

To deploy Cloud Functions, we can use the following command:

gcloud deploy cloud functions $FUNCTION_NAME \

--region=$REGION \

--entry-point=$ENTRY_POINT \

--memory=$MEMORY \

--runtime=$RUNTIME \

--service-account=$SERVICE_ACCOUNT\

--source=$SOURCE \

--stage-bucket=$STAGE_BUCKET \

--timeout=$TIMEOUT \

--retry

Here, we have the following options:

- $REGION: The region of the function.

- $ENTRY_POINT: The name of the function (as defined in the source code).

- $MEMORY: The limit on the amount of memory that can be used. The allowed values are 128 MB, 256 MB, 512 MB, 1,024 MB, and 2,048 MB.

- $SERVICE_ACCOUNT: The IAM service account associated with the function.

- $SOURCE: The source code's location. Can be either Cloud Storage, a source repository, or the local filesystem.

- $STAGE_BUCKET: If a function is deployed from a local directory, it defines the name of the Cloud Storage bucket that the source code will be stored in.

- $TIMEOUT: The function's execution timeout.

- --retry: Applies only to background functions. If present, it defines that the function should retry running if it's not executed successfully.

Next, let's define our triggers.

Triggers

After defining the necessary parameters, you can define the following triggers, depending on how you want your function to be initiated.

To define an HTTP trigger, use the following command:

--trigger-http

An endpoint will be assigned to the function.

To trigger a function on changes to a Cloud Storage bucket, use the following command:

--trigger-bucket=$TRIGGER_BUCKET

Here, we have the following option:

- $TRIGGER_BUCKET: The Google Cloud Storage bucket name. Every change that's made to the files in this bucket will trigger function execution.

To trigger a function on messages that are arriving in a Pub/Sub queue, use the following command:

--trigger-topic=$TRIGGER_TOPIC

Here, we have the following option:

- $TRIGGER_TOPIC: The name of the Pub/Sub topic. Messages arriving in the queue will trigger this function. The message's content will be passed to the function.

For other sources, such as Firebase, use the following command:

--trigger-event=$EVENT_TYPE

--trigger-resource=$RESOURCE

Here, we have the following options:

- $EVENT_TYPE: The action that should trigger the function

- $RESOURCE: A resource from which the event occurs

Let's have a look at an example of configuring a trigger from Pub/Sub:

gcloud functions deploy hello_pubsub --runtime python37 --trigger-topic mytopic

This will deploy a function called help_pubsub, where there will be a message arriving in the mytopic Pub/Sub topic.

Important Note

You may be interested in looking at some more advanced triggers, such as using Firebase authentication. Check out https://cloud.google.com/functions/docs/calling/ for examples for every possible trigger.

In the next section, we will review the IAM roles that are available for Cloud Functions.

IAM

Access to Google Cloud Functions is secured with IAM. Let's have a look at a list of predefined roles, along with a short description of each:

- Cloud Function Admin: Has the right to create, update, and delete functions. Can set IAM policies and view source code.

- Cloud Functions Developer: Has the right to create, update, and delete functions, as well as view source code. Cannot set IAM policies.

- Cloud Functions Viewer: Has the right to view functions. Cannot get IAM policies, nor view the source code.

- Cloud Function Invoker: Has the right to invoke an HTTP function using its public URL.

Note that for the Cloud Functions Developer role to work, you must also assign the user the IAM Service Account User role on the Cloud Functions runtime service account.

Quotas and limits

Google Cloud Functions come with predefined quotas. These default quotas can be changed via the hamburger menu via IAM & Admin | Quotas. From this menu, we can review the current quotas and request an increase to these limits. We recommend that you become familiar with the limits for each service as this can have an impact on your scalability. For Cloud Functions, we should be aware of the following three types of quotas:

- Resource limits: Defines the total amount of resources your functions can consume

- Time limits: Defines how long things can run for

- Rate limits: Defines the rate at which you can call the Cloud Functions API

The list of values is quite extensive. Check out the Further reading section if you wish to see a detailed list.

Pricing

The price of Cloud Functions consists of multiple factors. These include the number of Invocations, Compute time, and network rate (Networking). These are shown in the following diagram:

Figure 9.16 – Cloud Functions pricing

Remember that there is a monthly free usage tier that you can play around with without generating any cost. At the time of writing this book, it consists of 2 million invocations, 1 million seconds of compute time, and 5 GB of egress network traffic. Enjoy it!

Summary

In this blog, we talked about Cloud Functions and several use cases where it works perfectly. We talked about two types of functions, namely HTTP and background functions, and also understood that functions can be executed via a particular event or an HTTP request. Finally, we looked at how a function can be deployed both with the Google Cloud Console and with the gcloud command. For the exam, it is important to understand the use cases of Cloud Functions and when using them could be to our advantage.

With this post, we have concluded with the Google Compute options. In the next article, we will have a look at networking.

Further reading

For more information regarding the topics that were covered in this article, take a look at the following resources:

- Cloud Functions behind the scenes: https://cloud.google.com/functions/docs/concepts/exec

- Cloud Functions and VPC: https://cloud.google.com/functions/docs/connecting-vpc

- Local Emulator: https://cloud.google.com/functions/docs/emulator

- Cold Starts: https://cloud.google.com/functions/docs/bestpractices/tips

- Quotas: https://cloud.google.com/functions/quotas

- Pricing: https://cloud.google.com/functions/pricing-summary/

- The gcloud command: https://cloud.google.com/sdk/gcloud/reference/functions/deploy

- Cloud Run: https://cloud.google.com/run/