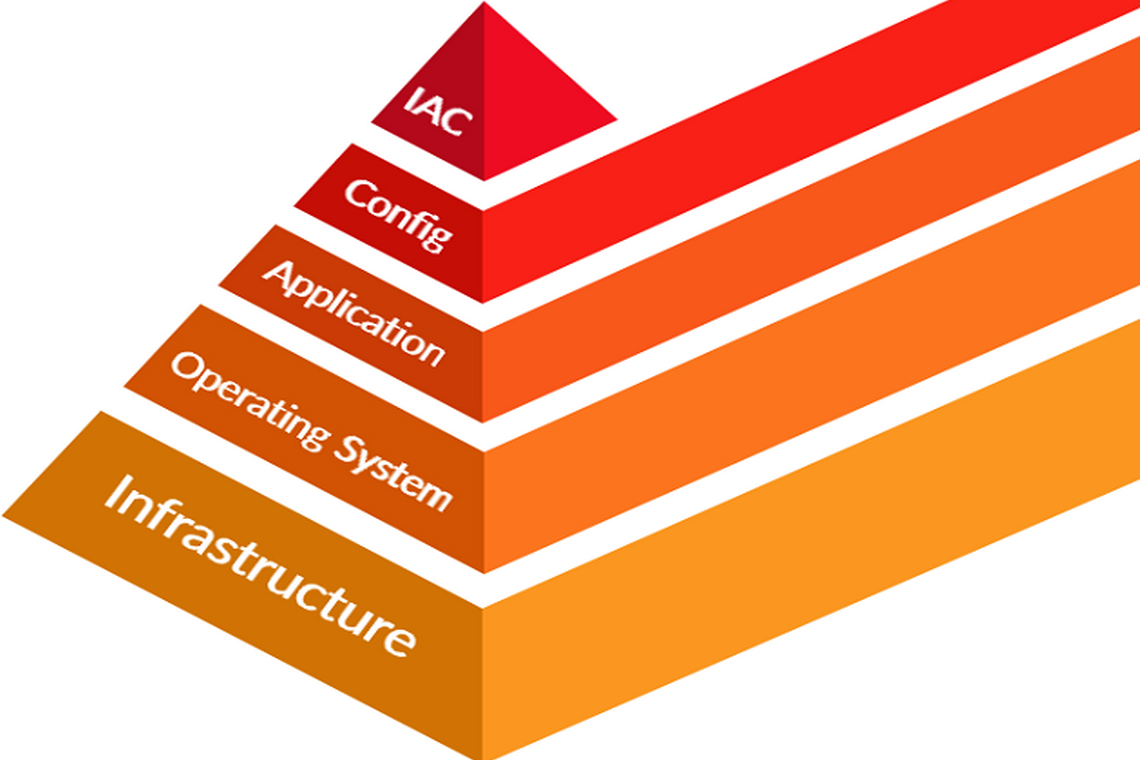

The idea behind infrastructure as code (IAC) is that you write and execute code to define, deploy, update, and destroy your infrastructure. This represents an important shift in mindset in which you treat all aspects of operations as software - even those aspects that represent hardware (e.g., setting up physical servers). In fact, a key insight of DevOps is that you can manage almost everything in code, including servers, databases, networks, log files, application configuration, documentation, automated tests, deployment processes, and so on.

There are five broad categories of IAC tools:

- Ad hoc scripts

- Configuration management tools

- Server templating tools

- Orchestration tools

- Provisioning tools

Let’s look at these one at a time.

Ad Hoc Scripts

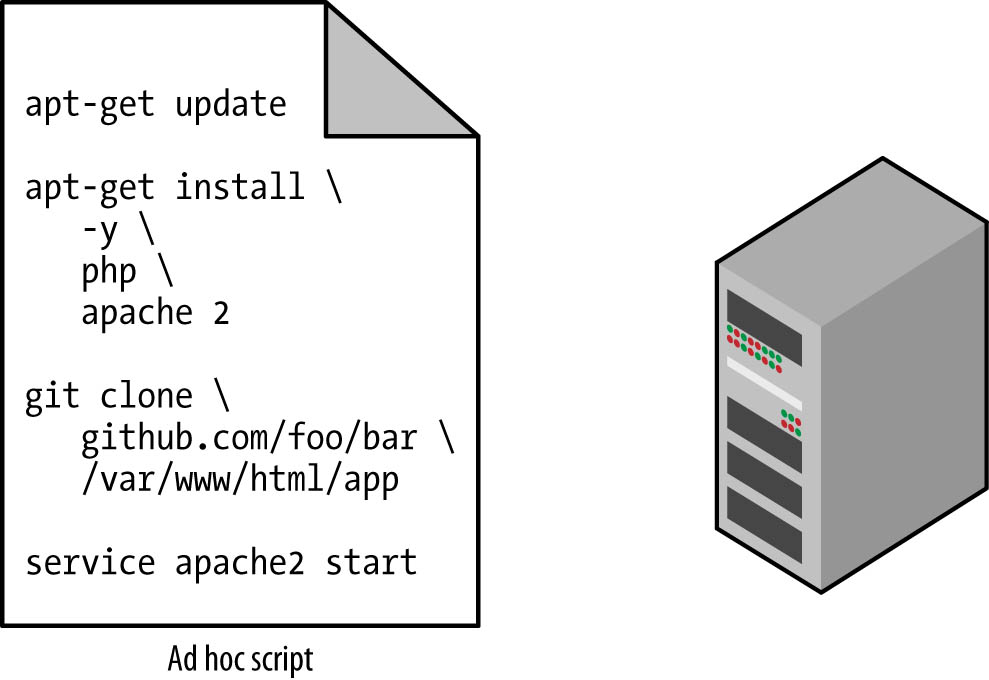

The most straightforward approach to automating anything is to write an ad hoc script. You take whatever task you were doing manually, break it down into discrete steps, use your favorite scripting language (e.g., Bash, Ruby, Python) to define each of those steps in code, and execute that script on your server, as shown in Figure 1.

Figure 1. Running an ad hoc script on your server

For example, here is a Bash script called setup-webserver.sh that configures a web server by installing dependencies, checking out some code from a Git repo, and firing up an Apache web server:

# Update the apt-get cache

sudo apt-get update

# Install PHP and Apache

sudo apt-get install -y php apache2

# Copy the code from the repository

sudo git clone https://github.com/brikis98/php-app.git /var/www/html/app

# Start Apache

sudo service apache2 startThe great thing about ad hoc scripts is that you can use popular, general-purpose programming languages and you can write the code however you want. The terrible thing about ad hoc scripts is that you can use popular, general-purpose programming languages and you can write the code however you want.

Whereas tools that are purpose-built for IAC provide concise APIs for accomplishing complicated tasks, if you’re using a general-purpose programming language, you need to write completely custom code for every task. Moreover, tools designed for IAC usually enforce a particular structure for your code, whereas with a general-purpose programming language, each developer will use their own style and do something different. Neither of these problems is a big deal for an eight-line script that installs Apache, but it gets messy if you try to use ad hoc scripts to manage dozens of servers, databases, load balancers, network configurations, and so on.

If you’ve ever had to maintain a large repository of Bash scripts, you know that it almost always devolves into a mess of unmaintainable spaghetti code. Ad hoc scripts are great for small, one-off tasks, but if you’re going to be managing all of your infrastructure as code, then you should use an IaC tool that is purpose-built for the job.

Configuration Management Tools

Chef, Puppet, Ansible, and SaltStack are all configuration management tools, which means that they are designed to install and manage software on existing servers. For example, here is an Ansible Role called web-server.yml that configures the same Apache web server as the setup-webserver.sh script:

- name: Update the apt-get cache

apt:

update_cache: yes

- name: Install PHP

apt:

name: php

- name: Install Apache

apt:

name: apache2

- name: Copy the code from the repository

git: repo=https://github.com/brikis98/php-app.git dest=/var/www/html/app

- name: Start Apache

service: name=apache2 state=started enabled=yes

The code looks similar to the Bash script, but using a tool like Ansible offers a number of advantages:

Coding conventions

Ansible enforces a consistent, predictable structure, including documentation, file layout, clearly named parameters, secrets management, and so on. While every developer organizes their ad hoc scripts in a different way, most configuration management tools come with a set of conventions that makes it easier to navigate the code.

Idempotence

Writing an ad hoc script that works once isn’t too difficult; writing an ad hoc script that works correctly even if you run it over and over again is a lot more difficult. Every time you go to create a folder in your script, you need to remember to check whether that folder already exists; every time you add a line of configuration to a file, you need to check that line doesn’t already exist; every time you want to run an app, you need to check that the app isn’t already running.

Code that works correctly no matter how many times you run it is called idempotent code. To make the Bash script from the previous section idempotent, you’d need to add many lines of code, including lots of if-statements. Most Ansible functions, on the other hand, are idempotent by default. For example, the web-server.yml Ansible role will install Apache only if it isn’t installed already and will try to start the Apache web server only if it isn’t running already.

Distribution

Ad hoc scripts are designed to run on a single, local machine. Ansible and other configuration management tools are designed specifically for managing large numbers of remote servers, as shown in Figure 2.

Figure 2. A configuration management tool like Ansible can execute your code across a large number of servers

For example, to apply the web-server.yml role to five servers, you first create a file called hosts that contains the IP addresses of those servers:

[webservers]

11.11.11.11

11.11.11.12

11.11.11.13

11.11.11.14

11.11.11.15Next, you define the following Ansible playbook:

- hosts: webservers

roles:

- webserverFinally, you execute the playbook as follows:

ansible-playbook playbook.ymlThis instructs Ansible to configure all five servers in parallel. Alternatively, by setting a parameter calledserial in the playbook, you can do a rolling deployment, which updates the servers in batches. For example, settingserial to 2 directs Ansible to update two of the servers at a time, until all five are done. Duplicating any of this logic in an ad hoc script would take dozens or even hundreds of lines of code.

Server Templating Tools

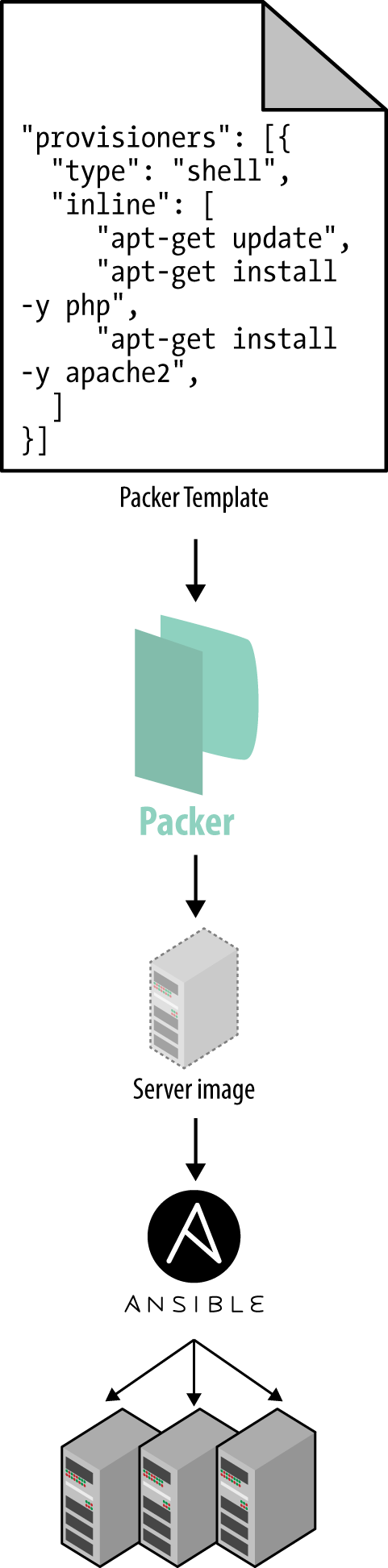

An alternative to configuration management that has been growing in popularity recently are server templating tools such as Docker, Packer, and Vagrant. Instead of launching a bunch of servers and configuring them by running the same code on each one, the idea behind server templating tools is to create an imageof a server that captures a fully self-contained “snapshot” of the operating system (OS), the software, the files, and all other relevant details. You can then use some other IaC tool to install that image on all of your servers, as shown in Figure 3.

Figure 3. You can use a server templating tool like Packer to create a self-contained image of a server. You can then use other tools, such as Ansible, to install that image across all of your servers.

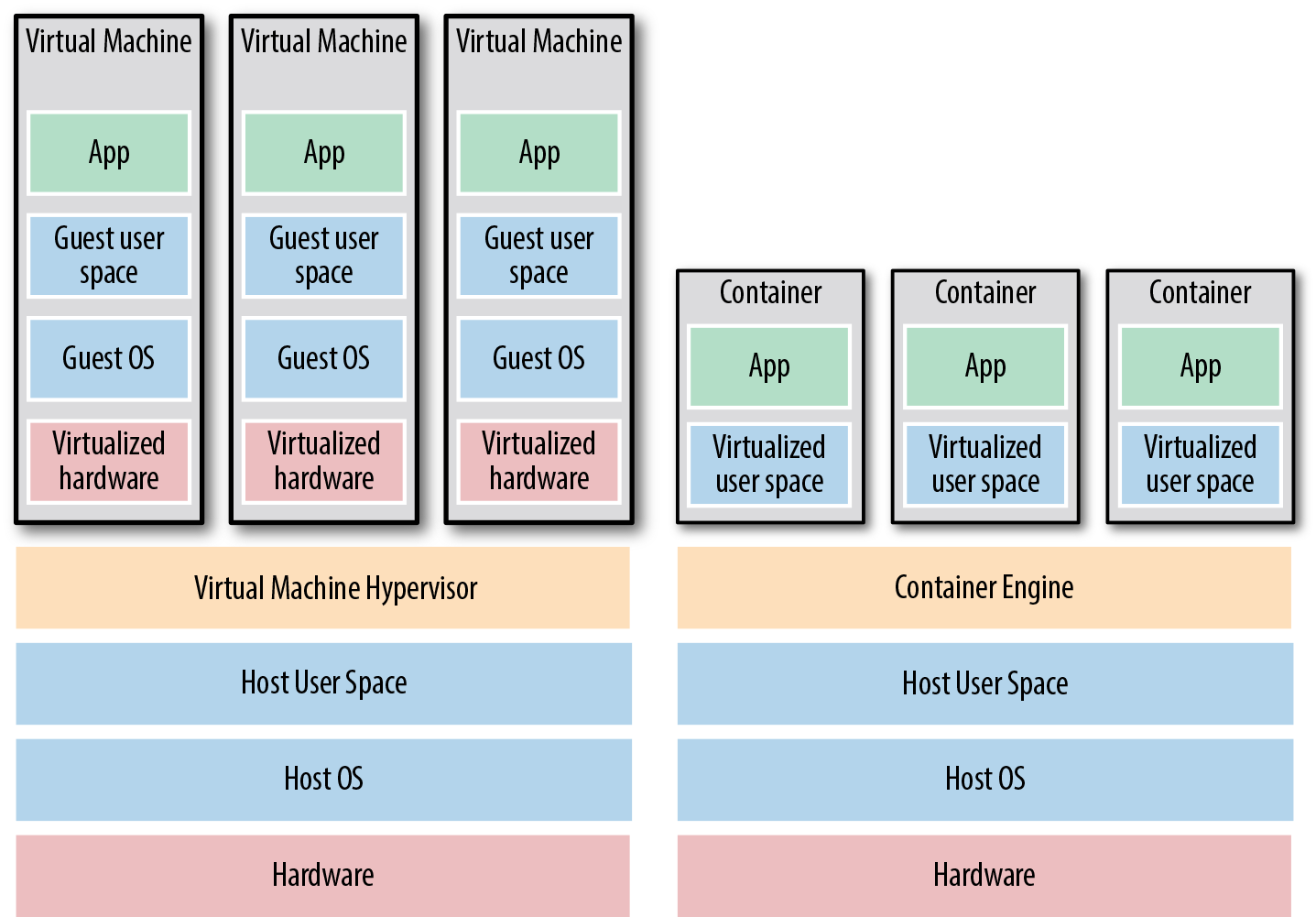

As shown in Figure 4, there are two broad categories of tools for working with images:

Virtual machines

A virtual machine (VM) emulates an entire computer system, including the hardware. You run a hypervisor, such as VMWare, VirtualBox, or Parallels, to virtualize (i.e., simulate) the underlying CPU, memory, hard drive, and networking. The benefit of this is that any VM image that you run on top of the hypervisor can see only the virtualized hardware, so it’s fully isolated from the host machine and any other VM images, and it will run exactly the same way in all environments (e.g., your computer, a QA server, a production server). The drawback is that virtualizing all this hardware and running a totally separate OS for each VM incurs a lot of overhead in terms of CPU usage, memory usage, and startup time. You can define VM images as code using tools such as Packer and Vagrant.

Containers

A container emulates the user space of an OS. You run a container engine, such as Docker, CoreOS rkt, or cri-o, to create isolated processes, memory, mount points, and networking. The benefit of this is that any container you run on top of the container engine can see only its own user space, so it’s isolated from the host machine and other containers and will run exactly the same way in all environments (your computer, a QA server, a production server, etc.). The drawback is that all of the containers running on a single server share that server’s OS kernel and hardware, so it’s much more difficult to achieve the level of isolation and security you get with a VM. However, because the kernel and hardware are shared, your containers can boot up in milliseconds and have virtually no CPU or memory overhead. You can define container images as code using tools such as Docker and CoreOS rkt.

Figure 4. The two main types of images: VMs, on the left, and containers, on the right. VMs virtualize the hardware, whereas containers virtualize only user space.

For example, here is a Packer template called web-server.jsonthat creates an Amazon Machine Image (AMI), which is a VM image that you can run on AWS:

{

"builders": [{

"ami_name": "packer-example",

"instance_type": "t2.micro",

"region": "us-east-2",

"type": "amazon-ebs",

"source_ami": "ami-0c55b159cbfafe1f0",

"ssh_username": "ubuntu"

}],

"provisioners": [{

"type": "shell",

"inline": [

"sudo apt-get update",

"sudo apt-get install -y php apache2",

"sudo git clone https://github.com/brikis98/php-app.git /var/www/html/app"

],

"environment_vars": [

"DEBIAN_FRONTEND=noninteractive"

]

}]

}This Packer template configures the same Apache web server that you saw in setup-webserver.sh using the same Bash code.4The only difference between the preceding code and previous examples is that this Packer template does not start the Apache web server (e.g., by calling sudo service apache2 start). That’s because server templates are typically used to install software in images, but it’s only when you run the image—for example, by deploying it on a server—that you should actually run that software.

You can build an AMI from this template by running packer build webserver.json, and after the build completes, you can install that AMI on all of your AWS servers, configure each server to run Apache when the server is booting (you’ll see an example of this in the next section), and they will all run exactly the same way.

Note that the different server templating tools have slightly different purposes. Packer is typically used to create images that you run directly on top of production servers, such as an AMI that you run in your production AWS account. Vagrant is typically used to create images that you run on your development computers, such as a VirtualBox image that you run on your Mac or Windows laptop. Docker is typically used to create images of individual applications. You can run the Docker images on production or development computers, as long as some other tool has configured that computer with the Docker Engine. For example, a common pattern is to use Packer to create an AMI that has the Docker Engine installed, deploy that AMI on a cluster of servers in your AWS account, and then deploy individual Docker containers across that cluster to run your applications.

Server templating is a key component of the shift to immutable infrastructure. This idea is inspired by functional programming and entails variables that are immutable, so after you’ve set a variable to a value, you can never change that variable again. If you need to update something, you create a new variable. Because variables never change, it’s a lot easier to reason about your code.

The idea behind immutable infrastructure is similar: once you’ve deployed a server, you never make changes to it again. If you need to update something, such as deploy a new version of your code, you create a new image from your server template and you deploy it on a new server. Because servers never change, it’s a lot easier to reason about what’s deployed.

Orchestration Tools

Server templating tools are great for creating VMs and containers, but how do you actually manage them? For most real-world use cases, you’ll need a way to do the following:

- Deploy VMs and containers, making efficient use of your hardware.

- Roll out updates to an existing fleet of VMs and containers using strategies such as rolling deployment, blue-green deployment, and canary deployment.

- Monitor the health of your VMs and containers and automatically replace unhealthy ones (auto healing).

- Scale the number of VMs and containers up or down in response to load (auto scaling).

- Distribute traffic across your VMs and containers (load balancing).

- Allow your VMs and containers to find and talk to one another over the network (service discovery).

Handling these tasks is the realm of orchestration tools such as Kubernetes, Marathon/Mesos, Amazon Elastic Container Service (Amazon ECS), Docker Swarm, and Nomad. For example, Kubernetes allows you to define how to manage your Docker containers as code. You first deploy a Kubernetes cluster, which is a group of servers that Kubernetes will manage and use to run your Docker containers. Most major cloud providers have native support for deploying managed Kubernetes clusters, such as Amazon Elastic Container Service for Kubernetes (Amazon EKS), Google Kubernetes Engine (GKE), and Azure Kubernetes Service (AKS).

Once you have a working cluster, you can define how to run your Docker container as code in a YAML file:

apiVersion: apps/v1

# Use a Deployment to deploy multiple replicas of your Docker

# container(s) and to declaratively roll out updates to them

kind: Deployment

# Metadata about this Deployment, including its name

metadata:

name: example-app

# The specification that configures this Deployment

spec:

# This tells the Deployment how to find your container(s)

selector:

matchLabels:

app: example-app

# This tells the Deployment to run three replicas of your

# Docker container(s)

replicas: 3

# Specifies how to update the Deployment. Here, we

# configure a rolling update.

strategy:

rollingUpdate:

maxSurge: 3

maxUnavailable: 0

type: RollingUpdate

# This is the template for what container(s) to deploy

template:

# The metadata for these container(s), including labels

metadata:

labels:

app: example-app

# The specification for your container(s)

spec:

containers:

# Run Apache listening on port 80

- name: example-app

image: httpd:2.4.39

ports:

- containerPort: 80This file instructs Kubernetes to create a Deployment, which is a declarative way to define:

- One or more Docker containers to run together. This group of containers is called a Pod. The Pod defined in the preceding code contains a single Docker container that runs Apache.

- The settings for each Docker container in the Pod. The Pod in the preceding code configures Apache to listen on port 80.

- How many copies (aka replicas) of the Pod to run in your cluster. The preceding code configures three replicas. Kubernetes automatically figures out where in your cluster to deploy each Pod, using a scheduling algorithm to pick the optimal servers in terms of high availability (e.g., try to run each Pod on a separate server so a single server crash doesn’t take down your app), resources (e.g., pick servers that have available the ports, CPU, memory, and other resources required by your containers), performance (e.g., try to pick servers with the least load and fewest containers on them), and so on. Kubernetes also constantly monitors the cluster to ensure that there are always three replicas running, automatically replacing any Pods that crash or stop responding.

- How to deploy updates. When deploying a new version of the Docker container, the preceding code rolls out three new replicas, waits for them to be healthy, and then undeploys the three old replicas.

That’s a lot of power in just a few lines of YAML! You run kubectl apply -f example-app.yml to instruct Kubernetes to deploy your app. You can then make changes to the YAML file and run kubectl apply again to roll out the updates.

Provisioning Tools

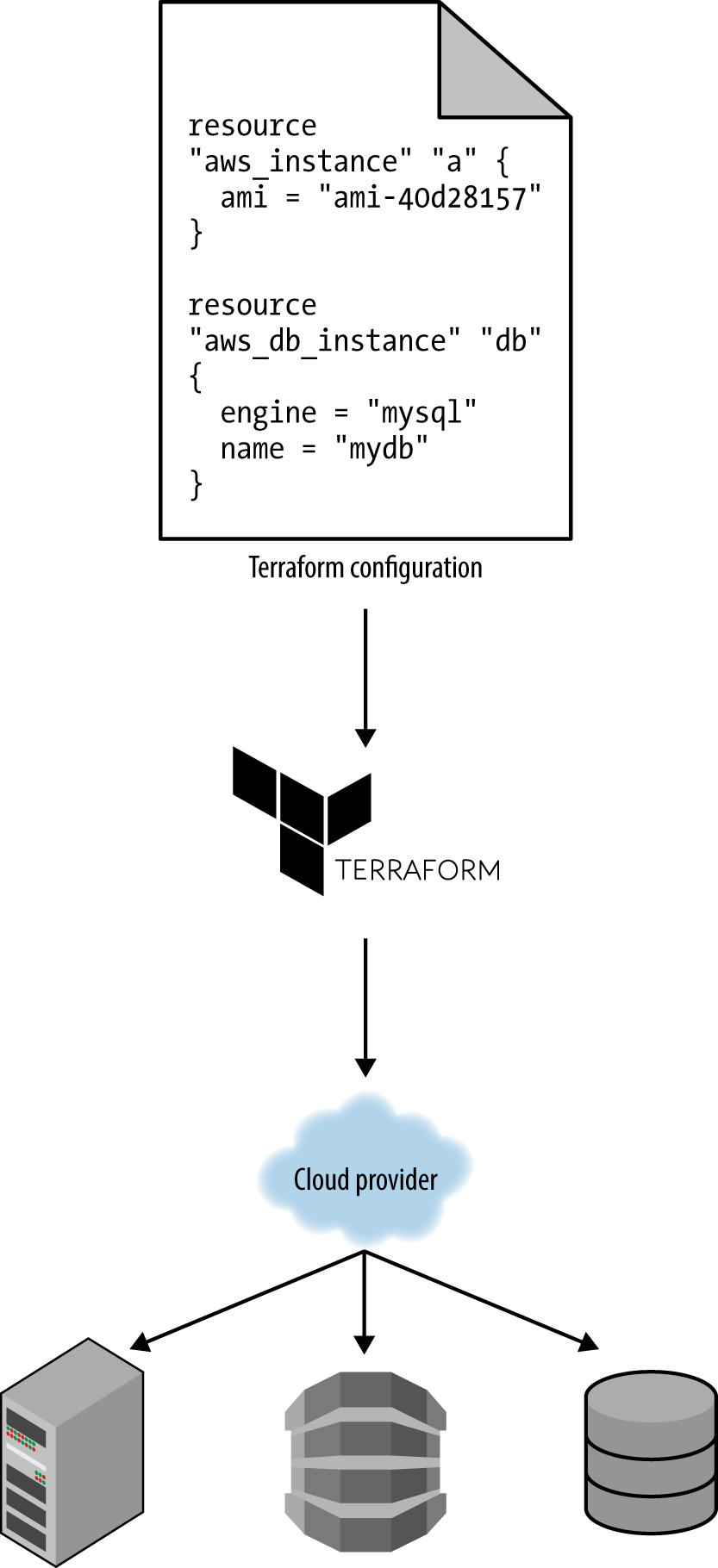

Whereas configuration management, server templating, and orchestration tools define the code that runs on each server, provisioning tools such as Terraform, CloudFormation, and OpenStack Heat are responsible for creating the servers themselves. In fact, you can use provisioning tools to not only create servers, but also databases, caches, load balancers, queues, monitoring, subnet configurations, firewall settings, routing rules, Secure Sockets Layer (SSL) certificates, and almost every other aspect of your infrastructure, as shown in Figure 5.

For example, the following code deploys a web server using Terraform:

resource "aws_instance" "app" {

instance_type = "t2.micro"

availability_zone = "us-east-2a"

ami = "ami-0c55b159cbfafe1f0"

user_data = <<-EOF

#!/bin/bash

sudo service apache2 start

EOF

}

Don’t worry if you’re not yet familiar with some of the syntax. For now, just focus on two parameters:

ami

This parameter specifies the ID of an AMI to deploy on the server. You could set this parameter to the ID of an AMI built from the web-server.json Packer template in the previous section, which has PHP, Apache, and the application source code.

user_data

This is a Bash script that executes when the web server is booting. The preceding code uses this script to boot up Apache.

In other words, this code shows you provisioning and server templating working together, which is a common pattern in immutable infrastructure.

Figure 5. You can use provisioning tools with your cloud provider to create servers, databases, load balancers, and all other parts of your infrastructure