Java has an amazing history, and not many languages that are still relevant today can claim to have been used for over twenty years. Obviously during this time the language has evolved itself, partly to continually drive improvement and developer productivity, and partly to meet the requirements imposed by new hardware and architectural practices. Because of this long history, there are now a multitude of ways to deploy Java applications into production.

Java has an amazing history, and not many languages that are still relevant today can claim to have been used for over twenty years. Obviously during this time the language has evolved itself, partly to continually drive improvement and developer productivity, and partly to meet the requirements imposed by new hardware and architectural practices. Because of this long history, there are now a multitude of ways to deploy Java applications into production.

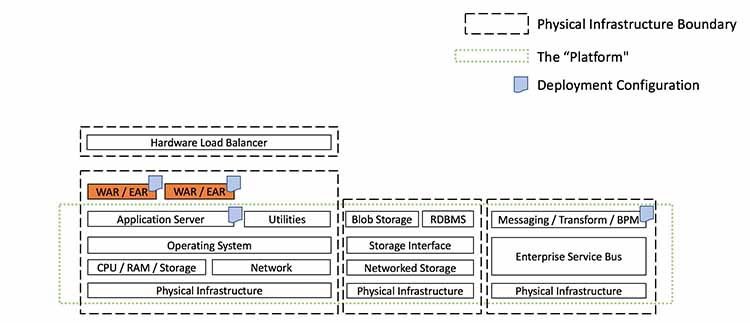

WARs and EARs: The Era of Application Server Dominance

The native packaging format for Java is the Java Application Archive (JAR) file, which can contain library code or a runnable artifact. The initial best-practice approach to deploying Java Enterprise Edition (J2EE) applications was to package code into a series of JARs, often consisting of modules that contain Enterprise Java Beans (EJB) class files and EJB deployment descriptor, and these were further bundled up into another specific type of JAR with a defined directory and structure and required metadata file.

The bundling resulted in either a Web Application Archive (WAR) -- which consisted of Servlet class files, JSP Files and supporting files -- or an Enterprise Application Archive (EAR) file -- which contained all the required mix of JAR and WAR files for the deployment of a full J2EE application. This artifact was then deployed into a heavyweight application server (commonly referred to at the time as a “container”) such as WebLogic, WebSphere or JBoss EAP. These application servers offered container-managed enterprise features such as logging, persistence, transaction management and security.

Figure 1. The initial deployment of Java applications used WAR and EAR artifacts deployed into an application server that defined access to external platform services via JNDI

Several lightweight application servers also emerged in response to changing developer and operational requirements, such as Apache Tomcat, TomEE and Red Hat’s Wildfly. Classic Java Enterprise applications and Service Oriented Architecture (SOA) were also typically supported at runtime by the deployment of messaging middleware such as Enterprise Service Buses (ESBs) and heavyweight Message Queue (MQ) technologies.

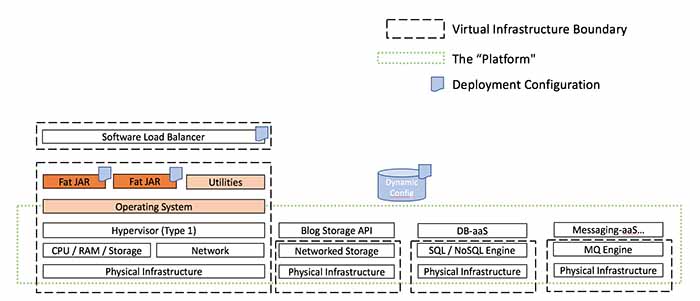

Executable Fat JARs: Emergence of Twelve Factor Apps

With the emergence of the next generation of cloud-friendly service-based architectures and introduction of open source and commercial Platform-as-a-Service (PaaS) platforms like Google App Engine and Cloud Foundry, deploying Java applications using lightweight and embedded application servers became popular. Technologies that emerged to support this included the in-memory Jetty web server, and also later editions of Tomcat. Application frameworks such as DropWizard and Spring boot soon began providing mechanism through Maven and Gradle to package (for example, using Apache Shade) and embed these application servers into a single deployable unit that can run as a standalone process - the executable “Fat JAR” was born.

Figure 2. The second generation of Java application deployment utilised executable “Fat JARs”, and followed the principles of the “Twelve Factor Application”, such as storing configuration within the environment.

The best practices for developing, deploying and operating this new generation of applications was codified by the team at Heroku as the "Twelve Factor App“.

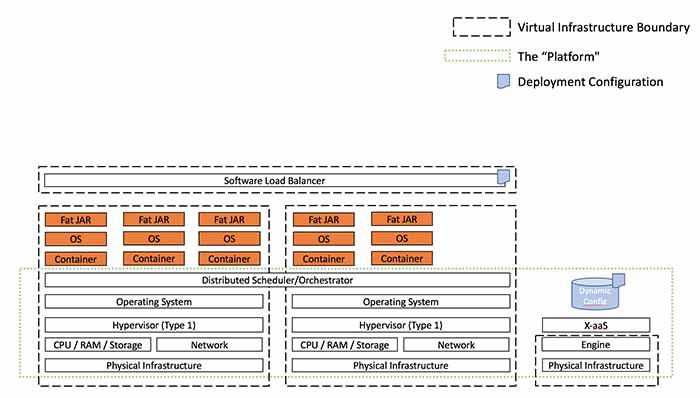

Container Images: Increasing Portability (and Complexity)

Although Linux container technology had been around for quite some time, the creation of Docker in March 2013 brought this technology to the masses. At the core of containers is Linux technologies like cgroups, namespaces and a (pivot) root file system. If Fat JARs extended the scope of traditional Java packaging and deployment mechanisms, containers have taken this to the next level. Now, in addition to packaging your Java application as a Fat JAR, you must include an operating system (OS) within your container image.

Figure 3. Deploying Java Applications as Fat JARs running within their own namespaced container (or Pod) requires developers to be responsible for packaging an OS within container images.

Due to the complexity and dynamic nature of running containers at scale, the resulting image is typically run on a container orchestration and scheduling platform like Kubernetes, Docker Swarm, or Amazon ECS.

Functions as-a-Service (FaaS): The Emergence of “Serverless”

In November 2014 Amazon Web Services launched a preview of AWS Lambda at their global re:Invent conference, held annually in Las Vegas. AWS Lambda lets developers run code without provisioning or managing servers - this is commonly referred to as “severless” - although as servers are still required to run the application the focus is typically on reducing the operational burden of running and maintaining the application’s underlying runtime and infrastructure. The development and billing model is also unique in that Lambdas are triggered by external events - which can include a timer, a user request via an attached AWS API Gateway, or an object being uploaded into the AWS S3 blobstore - and you only pay for the time your function runs and the memory consumed.

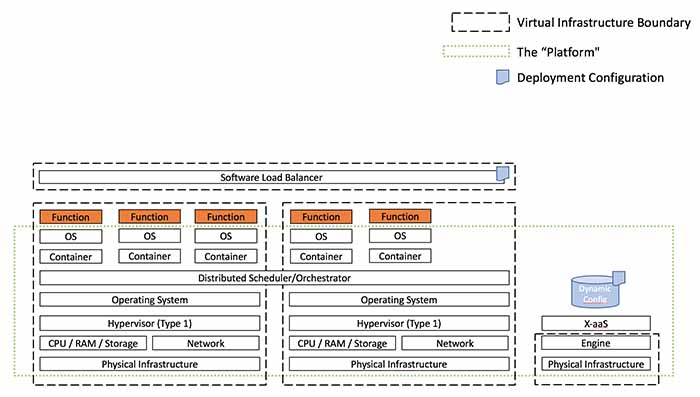

Figure 4. Deploying Java Applications via the Function-as-a-Service (FaaS) or serverless model. Code is packaged within a JAR or ZIP, which is then deployed and managed via the underlying platform (that typically utilises containers)

In June 2015 AWS Lambda added support for Java, and you now return back to the deployment requirement for a JAR or ZIP file containing Java code to be uploaded to the AWS Lambda service.

Impact of Platforms on Continuous Delivery

Developers often ask if the required platform packaging format of the application artifacts effect the implementation of continuous delivery. Our answer to this question, as with any truly interesting question, is “it depends”. The answer is “yes”, because the packaging format clearly has an impact on how an artifact is built, tested and executed: both from the moving parts involved, and also the technological implementation of a build pipeline (and potential integration with the target platform). However, the answer is also “no”, because the core concepts, principles and assertions of continuously delivering a valid artifact remain unchanged.

Throughout my blog we demonstrate core concepts at an abstract level, but will also provide concrete examples, where appropriate, for each of the three most relevant packaging styles: Fat JARs, container images, and FaaS functions.