Data science is a response to the difficulties of working with big data and other data analysis challenges we collectively face today. We examined this briefly in the introduction, but that was just scratching the surface. In fact, there is so much literature on big data that this whole chapter will still not be able to do it justice. It will, however, give you a good idea of its importance in today’s world. Furthermore, it will help you understand what all the hype is about big data (a hype that has increased significantly over the past year), and why data science is so important.

Data science is a response to the difficulties of working with big data and other data analysis challenges we collectively face today. We examined this briefly in the introduction, but that was just scratching the surface. In fact, there is so much literature on big data that this whole chapter will still not be able to do it justice. It will, however, give you a good idea of its importance in today’s world. Furthermore, it will help you understand what all the hype is about big data (a hype that has increased significantly over the past year), and why data science is so important.

Big data is a fundamental asset for today’s businesses, and it is not a coincidence that the majority of businesses today are using, or are in the process of adopting, the corresponding technology. Despite all the hype about it in various media, this is not a fad. There are specific advantages to using this asset, and the fact that it is growing more abundant is an indication that it is imperative to do something about it, and do it fast! Perhaps it is not useful for certain industries right now as big data tends to be quite chaotic or even non-existent for them. Those who do have it and make intelligent use of it, though, reap its benefits and stand a good chance of being more successful in today’s competitive economic ecosystems.

Digging into Big Data

Big data is abundant and contains information that is relevant to the business problems at hand. If you are a manager of an e-commerce company, for example, the data you collect on your servers regarding your customers and the visitors to your site are rich with information that, when analyzed properly, can be used to increase your sales, enhance your site’s design, and improve your customer service. It can also provide you with ideas on marketing strategies and ways to improve your company’s overall strategy; all that from a bunch of ones and zeroes that dwell on your servers. You just need to extract the information from them, allocating a small part of your resources. Not a bad trade-off, for sure. We’ll come back to this example later on.

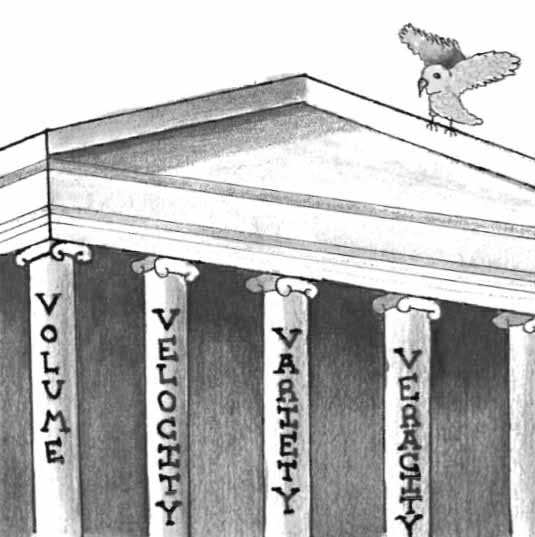

Not every amalgamation of data qualifies for the term big data, although most Web-related data falls under this umbrella. This is because big data is characterized by the four Vs.

Fig. 1 The four Vs of big data.

As we have already seen, these are:

- Volume – Big data consists of large quantities of data. This translates into several TB up to a few ZB. This data may be distributed across various locations, often in several computer networks connected through the Internet. Generally, any amount of data that is too large to be processed by a single computer satisfies the Volume criterion of big data. This alone is an issue that requires a different approach to data processing, something that gave rise to the parallel computing technology known as MapReduce.

- Velocity – Big data is also in motion, usually at high transfer speeds. It is often referred to as data streams, which are frequently too difficult to archive (the speed alone is a great issue, considering the limited amount of storage space a computer network has). That is why only certain parts of it are collected. Even if it were possible to collect all of it, it would not be cost effective to store big data for long, so the collected data is periodically jettisoned in order to save space, keeping only summaries of it (e.g., average values and variances). This problem is expected to become more serious in the near future as more and more data is being generated at higher and higher speeds.

- Variety – In the past, data used to be more or less homogeneous, which also made it more manageable. This is not the case with big data, which stems from a variety of sources and, therefore, varies in form. This translates into different structures among the various data sources and the existence of semi-structured and completely unstructured data. Structured data is what is found in traditional databases, where the structure of the content of the data is predefined in fields of specified sizes. Semi-structured data has some structure, but it is not consistent (see the contents of a .JSON file, for example), making it difficult to work with. Even more challenging is unstructured data (e.g., plain text) that has no structure whatsoever. In most cases big data is semi-structured, though rarely do its sources share the same form. In the past few years, unstructured and semi-structured data have constituted the vast majority of all big data.

- Veracity – This is one aspect of big data that is often neglected by the literature, partly because it is relatively new although equally important. It has to do with how reliable the data is, something that is taken into account in the data science process. Veracity involves the signal-to-noise ratio; i.e., figuring out what in the big data is valid for the business, which is an important concept in information theory. Big data tends to have varied veracity as not all of its sources are equally reliable. Increasing the veracity of the available data is a major big data challenge.

Note that a piece of data may have one or more of these characteristics and still not be classified as big data. Big data has all four of these. Big data is a serious issue as it is not easy, even for a supercomputer, to manage it effectively, let alone perform a useful analysis of it.

In the example we started with, a typical set of data that you would encounter would have the following qualities:

- The volume of data would be very large, with a tendency to become larger, especially if your site monitors several aspects of its visitors’ behavior. This data may easily account for several TB a year.

- It would flow constantly as visitors come and go and new visitors pay a visit to your site. This translates to continuous network activity on your servers, which is basically a data stream from the Web flowing into your server logs.

- The data you would collect from your visitors would vary greatly, ranging from simple Web statistics (time spent on each page, time of the visit, number of pages visited, etc.) to text entered on the site (assuming you have some kind of review system, like most serious e-commerce sites) and several other types of data (e.g., ratings from customers for various products, transaction data, etc.).

- Naturally, not everything you observe on your site’s servers will be trustworthy. Some of your visitors may be bots sent by hackers or other users for shady purposes, while other visitors may be your competitors spying on you! Some visitors may have spelling errors in their reviews, or leave random or spam messages on the site for whatever reason. Even if you have some kind of filtering system, it is inevitable that your site will collect some useless data over time.

Based on all of the above observations, do you think that you are dealing with big data in this company or not? Why? If you have understood the above concepts, you should be confident in replying positively to this question. Each one of the bullet points describing the data situation in that company has to do with one of the Vs of big data.

Big Data Industries

Naturally, not all industries are equally affected by the big data movement. Depending on how much they rely on data and how profitable information is to them, they may be looking at a goldmine or one more asset that can wait. Based on recent statistics, the following industries appear to have benefited, or are inclined to benefit the most from big data:

- Retail (particularly in terms of productivity boost)

- Telecommunications (particularly in terms of revenue increase)

- Consulting

- Healthcare

- Air transportation

- Construction

- Food products

- Steel and manufacturing in general

- Industrial instruments

- Automobile industry

- Customer care

- Financial services

- Publishing

- Logistics

Note that the benefit is not always directly related to the bottom line, but it is definitely of significant business value. For example, by employing big data technologies in healthcare, physicians can use previous data to gain a better understanding of the patients’ issues, yielding a better diagnosis and enabling them to take better care of their patients in general. This can eventually result in greater efficiencies in the medical system, translating into lower costs through the intelligent use of medical information derived from that data.

Another example comes from customer care, where big data can help leverage bad customer experiences. By effective use of big data technologies, companies can gain a better understanding of what their customers like and don’t like in near real-time. This can help them amend their strategies in dealing with these customers and give them insight into how to improve their services in the future.

Note that there are many other industries that have the potential for gaining from big data, but based on their current status, it is not a worthwhile option for them. For example, the art industry is still not big on big data, since the data involved in this field is limited to descriptions of artwork and, in some cases, digitized forms of these works of art. However, it is possible that this may change in the future depending on how the artists act. For example, if a certain gallery makes use of sensors monitoring the number of people who view a certain painting, and in combination with other data (e.g., number of people who bought tickets to the various exhibitions that hosted that painting), they could gradually build a large database that would contain data about the sensor readings, the ticket sales, and even the comments some people leave on the gallery’s blog about the various paintings. All this can potentially yield useful information about which pieces of art are more popular (and by how much), as well as what the optimum ticket prices should be for the gallery’s exhibitions throughout the year.

All this is great, but how is it of any real use to you? Well, higher profit margins and the potential to significantly boost productivity are not going to happen on their own. It is naïve to think that just installing a big data package and assigning it to an employee (even if they are a skilled employee) could result in measurable gains. In order to take advantage of big data, a company needs to hire qualified people who can undertake the task of turning this seemingly chaotic bundle of data into useful (actionable) information. This is the problem that all data scientists are asked to solve and one of the driving forces of all developments in the field that came to be known as data science.

Birth of Data Science

The field of data science resulted from the attempt to discover potential insights residing in big data and overcoming the challenges that were reflected in the four Vs described previously. This was possible through the combination of various technological advances of modern computing. Specifically, parallel computing, sophisticated data analysis processes (mainly through machine learning), and powerful computing at lower prices made this feasible. What’s more, the continuously accelerating progress of the IT infrastructure and technology will enable us to generate, collect, and process significantly more data in the not-so-distant future. Through all this, data science addresses the issues of big data on a technical level through the application of the intelligence and creativity that is employed in the development and use of these technologies. That is, big data is somewhat manageable and at least able to provide some useful information to make the whole process worthwhile.

It’s important to note that data science is not a fad, but something that is here to stay and bound to evolve rapidly. If you were an IT professional when the World Wide Web came about, you might have seen it as a luxury or a fad that wouldn’t catch on, but those who managed to see its real value and the potential it held made very lucrative careers out of it. Imagine being one of the first people to learn HTML, CSS and JavaScript, or one of the first to create digital graphics to be used for websites. It would be like holding a winning lottery ticket, especially if you were good at your job. This is the situation with data science today. It would probably not be so well-known if it weren’t for so many people writing about its benefits. Still, most professionals and many students are not aware of what data science really means.

If you assimilate the aforementioned facts about big data, you will understand that data science is the solution to a real problem that is only going to become more pronounced in the years to come. This problem, as mentioned earlier, is reflected in the four Vs of big data, the characteristics that make it difficult to deal with using conventional technologies. As technology is on its side, data science is bound to become more robust and more diverse in the coming decade or so. There are already some post-graduate programs making an appearance in the academic world, and there are plenty of respectable researchers writing papers on data science topics. This is not a coincidence. It shows a trend for the development of an infrastructure of knowledge and know-how that will nourish this field.

It is not very clear exactly when data science was born (there have been people working on this field as researchers for several decades), but the first conference where it received the spotlight was in 1996 (“Data Science, Classification, and Related Methods” by IFCS). It wasn’t until September 2005, however, when the term “data scientist” first appeared in the literature. Specifically, in a report released that year, data scientists were defined as “the information and computer scientists, database and software engineers and programmers, disciplinary experts, curators and expert annotators, librarians, archivists, and others, who are crucial to the successful management of a digital data collection.” In June, 2009, the importance of the role of the data scientist became more apparent, as Nathan Yau’s article “Rise of the Data Scientist” in FlowingData was written. Since then, references to and literature on data science have increased rapidly. Just take a look at how many conferences are being organized for it nowadays, appealing to both academics and people in the industry! What’s more, as several large companies that are leaders in their sectors (e.g., Amazon) make use of data science in their everyday workflow, it is quite likely that this trend will continue. Also, as the role of the data scientist adapts to the ever-changing requirements of the data world, it has come to include several things such as the application of state-of-the-art data analysis techniques, not just the original responsibilities.

Key Points

- Big data is a recent phenomenon where there is a large quantity of data, in quick motion, varying from structured to unstructured (with everything else in between), and with different reliability levels. This is often referred as the four Vs of big data: Volume, Velocity, Variety, and Veracity.

- Dealing with big data is a challenging problem due to these four Vs. Data science is our response to the challenges that big data represents.

- Data scientists are the people that make sense of big data. Through the use of state-of-the-art technologies and know-how, they manage to derive actionable information from it, usually in the form of a data product.

- Big data occurs in a variety of industries; taking advantage of it can have a profound effect on them in terms of productivity boost and revenue increase.

- Data science has been around for over two decades but has only recently taken off as the corresponding technology was developed (parallel computing, intelligent data analysis methods, and powerful computing at a very low cost).

- The role of the data scientist first made an appearance in the literature in 2005, while it started becoming quite popular in 2009. In an article in Harvard Business Review, data science was called the “sexiest” profession of the 21st century.

- Data science is expected to continue to grow in terms of business value, technology, available knowledge and know-how, and popularity in the years to come.

Actually, some people include an additional two Vs, variability and visibility, which refer to the fact that Big Data changes over time and is hardly visible to users.

One of them, created by Berkley, costs around $60,000, which is significantly more than the high-priced MBAs you see elsewhere. This is a clear indication that people in the academic world as well as in the industry are taking data science quite seriously.