McKinsey Global Institute published a report on big data in five industries, including healthcare, public sector, retail, manufacturing, and personal-location data. It found that big data generated tremendous value in each of these domains. Many believe that the innovative use of big data is crucial for leading companies to outperform their peers. In this blog, we’ll describe key big data analytical use cases in a variety of industries.

McKinsey Global Institute published a report on big data in five industries, including healthcare, public sector, retail, manufacturing, and personal-location data. It found that big data generated tremendous value in each of these domains. Many believe that the innovative use of big data is crucial for leading companies to outperform their peers. In this blog, we’ll describe key big data analytical use cases in a variety of industries.

Retail and CPG

Retail is an important sector of our economy. It is also an industry that faces a high level of competition and is known for its low profit margin. It is estimated that a retailer using big data to the fullest extent could increase its operating margin by more than 60 percent. So, how are companies in retail businesses capturing the value of big data and exceeding competition?

Market Basket Analysis and Customer Profiling

Let’s take a quick look back at the 2002 science-fiction thriller Minority Report. For those who haven’t seen the movie, there are some interesting depictions of the futuristic shopping experience of the main character. Using a retinal scanner, the billboards on the walls of a subway station call out the names of people passing by. An advertisement for American Express shows the passerby’s name on an American Express card, with the Member Since field dynamically updated to reflect that person’s membership. A Guinness billboard speaks to Tom Cruise’s character as he walks by, saying, “Hey, John, you look like you could use a Guinness!” The most interesting example, however, is when Cruise’s character walks into a Gap store. A virtual shop assistant welcomes him back and asks if he enjoyed the shirts he had bought previously.

It might sound scary, but it’s more realistic than most people think. Advertisers are developing digital posters that will recognize people’s faces and respond if they are paying attention. Cameras attached to the billboards will scan to see whether passersby are looking and immediately change their display to keep them watching.

Some retailers in Europe have embedded cameras in the eyes of the mannequins that feed data into facial-recognition software like that used by police. It logs the age, gender, and race of passersby. Facial recognition technology has been around for some years, but only recently has it been used in retail because it is now affordable. Most retailers have a number of security cameras throughout their stores, but these new cameras that utilize face-recognition technology and incorporate it into mannequins could provide more accurate data because they’re positioned at eye level and are much closer to the passing consumers.

The systems can also detect frowning or nodding to determine the interest at the display from the potential shoppers. The retailers are using these techniques to enhance the shopping experience, to improve product assortment, and to help brands better understand their customers. As a result of such analysis and insight, an apparel store introduced a children’s line after the results showed that kids made up more than half its mid-afternoon traffic. Another store found that a third of the visitors using one of its doors after 4 P.M. were Asian, prompting it to place Chinese-speaking staff by that entrance. The company that developed this technology is now testing a new product that recognizes words to allow retailers to eavesdrop on what shoppers say about the mannequin’s attire. They also plan to add screens next to the mannequin to prompt customers about products relevant to their profile, much like cookies and pop-up ads on a web site.

While some believe this raises legal and ethical issues as well as privacy concerns, customer profiling has been around for a long time. The most celebrated use case in this category has to be the Target pregnant teen story.

A man walked into a Target in the Minneapolis area and demanded to talk to the manager. He was apparently angry and was holding coupons that had been addressed to his daughter. “My daughter got this in the mail!” he said. “She’s still in high school, and you’re sending her coupons for baby clothes and cribs? Are you trying to encourage her to get pregnant?”

The manager looked at the coupon book that was sent to the man’s daughter. The mailer contained advertisements for maternity clothing, nursery furniture, and pictures of smiling babies. The manager was at a loss and apologized to the customer. He then called a few days later to apologize again. On the phone, though the father was somewhat embarrassed. “I had a talk with my daughter,” he said. “It turns out there’s been some activities in my house I haven’t been completely aware of. She’s due in August. I owe you an apology.”

For years, retailers have been mining through customer demographic information, point-of-sale (POS) data, and loyalty program history to understand shopping habits. The traditional demographic information that most retail stores collect includes age, marital status, household size (such as number of children and their age), approximate distance of potential shoppers’ residence to the closest store, estimated household income, recent moving history, credit cards frequently used, and recently visited web sites. In addition to the variety of demographic information they collect every time the shopper visits the store, shops online, or calls customer service, most retailers buy enrichment data on shopping ethnicity; job history; the magazines shoppers read; credit history; the year you bought (or lost) your house; education status; social media feeds; personal and brand preference on a variety of products including coffee, paper towels, cereal, or applesauce; political orientation; reading habits; charitable giving; and the number and makes of cars owned.

In the case of the pregnancy prediction, Target was able to identify 25 products (such as prenatal vitamins, cocoa butter lotion, diaper-size bag, maternity clothes) that, when analyzed together, could generate a “pregnancy score” for each of the shoppers. And that score has been proven to be incredibly accurate. More importantly, it would also allow them to estimate her due date to within a small window so Target could send coupons timed to specific stages of her pregnancy.

The game-changer in this case is the ability to integrate various data sources and allow for ever-narrower segmentation of customers and therefore much more precisely tailored products or services.

In addition to market basket analysis and customer profiling, there are many other innovative ways retailers are unlocking the big data value.

Demand Forecast

Demand forecast is another area where retailers are trying to better understand customers through the smart use of new data sources. As we discussed earlier in this blog, a surge in online shopping during the 2013 holiday season left stores breaking promises to deliver packages by Christmas, suggesting that retailers and shipping companies still don’t fully understand consumers’ buying patterns in the Internet era. In addition, new business models are making accurate and timely demand forecast an even more critical success factor.

For example, one well-known sports apparel manufacturer and retailer is changing its go-to-market approach from selling individual items to assortment-based merchandising. In other words, instead of selling shoes and T-shirts separately, they are switching to a model that sells an entire outfit, including matching shoes, socks, hats, shirts, and pants or shorts. The assortments could be sports themed, location based, and season oriented. This changes what, where, and how data is to be sourced and applied in the business processes. A variety of new data sources will be integrated into the system, including social media and survey feedback on new product and assortment development, store layout maps for efficient display planning as well as foot traffic analysis, weather information, and location data with residents’ demographics, local sports teams, and event schedule, all of which to help determine and maximize the operational efficiency for inventory, manufacturing, and logistics planning and management.

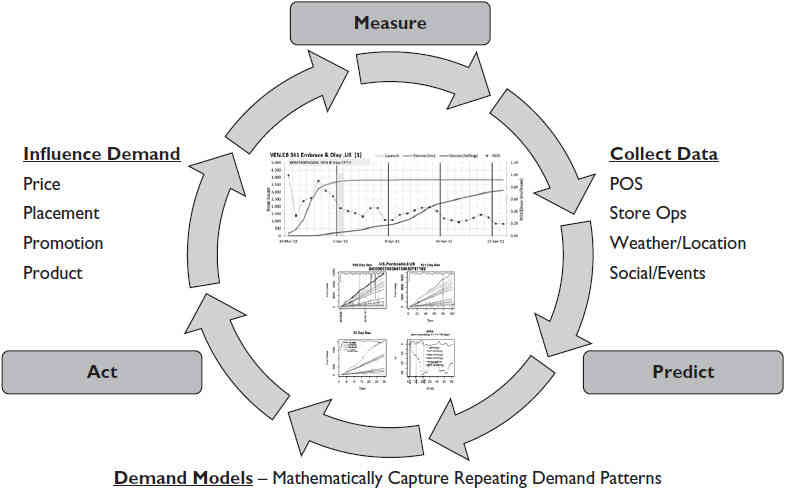

Figure 1 describes the demand forecast model used at a CPG company to predict the success of various digital initiatives and launches of new products.

FIGURE 1. Sample demand forecast model

Location-Based Marketing

The prevalence of smartphones gives rise to location-based marketing. It targets consumers who are close to stores or already in them. Some retailers have developed mobile applications for iOS and Android devices that integrate with their loyalty management program. The customers can download these applications and use them to store digital coupons, keep loyalty cards, and scan product bar codes to compare prices as well as obtain more information about the product details and manufacturer information. Retailers have also teamed up with mobile notification software providers for real-time customer engagement. These platforms can be implemented through distrib uting mobile beacons that can be plugged into any electrical outlet and transmit inaudible sound signals in stores. Some retailers alternatively have chosen to distribute signals through their existing PA systems. These methods allow retailers to track the customer movements in the store or a shopping mall with accuracy to the exact aisle they are in. This allows customers to receive deals sent to these mobile applications on their smartphones, enabling retailers to upsell customers with in-store conversion tools. It can also provide a channel for retailers to sell advertising to partners to target users while they are shopping.

There are similar location-based marketing use cases in the “Telecommunication” section we’ll discuss in more detail next.

Telecommunication

Documents leaked by National Security Agency (NSA) contractor Edward Snowden indicate that the NSA is collecting 5 billion phone records a day. That’s nearly 2 trillion records a year. The NSA gathers location data from around the world by tapping into the cables that connect mobile networks globally and that serve U.S. cell phones as well as foreign ones. This recent revelation has stirred up controversy over privacy. It also brought awareness to the general public regarding location tracking. Cell phones broadcast locations even when they are not in use. They will reveal your location even if you have your GPS or location service turned off.

According to McKinsey, overall personal location data totals more than 1 petabyte per year globally, and users of services enabled by personal-location data could capture $600 billion in consumer surplus as a result of price transparency and a better match between products and consumer needs.

Location-Based Promotion

Location-based promotion, also known as geofencing (when smartphone users enter a certain area), uses geolocation information derived from passive network location updates to identify potential customers for the geomarketing campaigns of mobile advertisers. The main business goals are to increase campaign and advertisement response, maximize offer conversion, and reduce cost per acquisition (CPA).

The main data sources used for this type of analytical applications include cellular network signaling, base station data, GPS-enabled devices, Wi-Fi, hotspots, and IP data. Some vendors also use additional data sets to improve accuracy, including billing data, census data with enriched demographic information, and call record data to understand social connections.

Sample geomarketing scenarios include port-of-departure, retail time-sensitive, and retail time-insensitive product offers. Port-of-departure use cases target customers who are in a location that can be identified as a travel hub, where customers may be interested in travel-related products, such as travel insurance. The most critical element for this use case is the correct filtering of false positives, such as people working or living close to the travel hub or passing through the area on a regular basis. Time-sensitive retail advertisement use cases target customers in close vicinity of a particular venue, such as restaurants. The advertisement is a short-term offer to drive traffic to the venue and requires accurate time and location determination. Time-insensitive retail advertisement use cases target customers who travel in the proximity of a retail outlet. This type of campaign runs over longer periods and is broken into two phases: a capture phase and a redeem phase. Advertisements are delivered only during the capture phase when customers are near the retail outlet and could reach a large number of eligible subscribers.

Because of privacy restrictions, mobile customers need to consent to have location data used for marketing purposes, and customers can opt out at any time.

Real-time event processing and analytics enable cell service providers (CSPs) to track a customer’s location, and when a customer enters a certain area, the CSP can correlate that demographic, usage, and preference data to create targeted offers and promotions for CSP and partner services. CSPs can also analyze a subscriber’s mobile network location data over a certain period to look for patterns or relationships that would be valuable to advertisers and partners.

One telecommunication company that has successfully implemented this type of solution is Turkcell, which realized that the GSM network presents many opportunities to provide real-time business recommendations with its data. The ability to immediately recognize the key event patterns for call activations with associated locations could enable new ways to enhance customer satisfaction by offering targeted services and promotions, when and where relevant. This is not without challenges because of a wealth of streaming telecommunication elements and different data representations. Turkcell developed analytical applications to capture real-time streaming information immediately from its GSM network for identifying subscriber events from mobile devices with a GeoSpatial and SMS text message with temporal relevance. This enables Turkcell to offer a wide range of new, valuable, and interesting services delivered when they are most applicable. These applications improved customer satisfaction and introduced a wide array of revenue opportunities through the ability to predict trends based on changing locations in association with real-time external conditions such as weather, traffic, and events.

Fraud Prevention

Communications fraud, or the use of telecommunication products or services without intention to pay, is a major issue for telecommunication organizations. The practice is fostered by prepaid card usage and is growing rapidly. Anonymous network-branded prepaid cards are a tempting vehicle for money launderers, particularly since these cards can be used as cash vehicles—for example, to withdraw cash at ATMs. It is estimated that prepaid card fraud represents an average loss of $5 per $10,000 in transactions. For a communications company with billions of transactions, this could result in millions of dollars lost through fraud every year.

Naturally, telco companies want to combat communications fraud and money laundering by introducing advanced analytical solutions to monitor key parameters of prepaid card usage and issue alerts or block fraudulent activity. This type of fraud prevention would require extremely fast analysis of petabytes of uncompressed customer data to identify patterns and relationships, build predictive models, and apply those models to even larger data volumes to make accurate fraud predictions.

Companies are deploying real-time analytical solutions to monitor key parameters and usage, such as call detail records (CDRs), usage data, location, and prepaid card top-ups. They then issue alerts or block fraudulent activity. Data discovery tools are used to explore questions such as “why” and “what if” to detect and identify anomalies and detect recurring fraud patterns.

The same telecommunication company mentioned earlier, Turkcell, has developed this type of analytical solution that enables it to complete antifraud analysis for large amounts of call-data records in just a few hours. It is able to achieve extreme data analysis speed through a row-wise information search, including day, time, and duration of calls, as well as number of credit recharges on the same day or at the same location. The system enables analysts to detect fraud patterns almost immediately. Further, the architecture design allows Turkcell to scale the solution as needed to support rapid communications data growth.

Machine to Machine

Machine to machine (M2M) devices and applications generate a wealth of usage, telemetry, and sensor data that can be monetized and analyzed. Enabling these capabilities requires comprehensive analytics and big data technologies ranging from operational reporting, analytics, and BI to big data and real-time event processing in order to capture the high-volume and high-velocity event data from M2M devices for analysis.

Real-time event processing is seen as a major opportunity for the next generation of M2M applications. This refers to the scenario when several data sources contribute to a decision process without human intervention. Such data sources include sensor and telemetry data from M2M-enabled devices, including smart meters, fleet management, manufacturing, automotive, home automation, and various data sources from operations of a smart city. Big data technologies store and analyze this sensor and telemetry data and are an important part of the M2M application development platform from device to data center.

Here are some examples of M2M applications: One large auto manufacturer is increasing data scale to include 13 years of dealer history. It also added new data sources, including telematics data, from its new hybrid vehicles. The manufacturer uses an analytical solution with these data sources to prevent and catch problems early. This allows the manufacturer to recommend what service is due and which dealer to choose based on dealer service history. It also enables its customers to perform social media interaction with the share function built in to the communication module in the cars.

Another example is with a navigation and communication branch of a large auto manufacturer. The manufacturer collects large amounts of telemetry data by nature. With customer consent, the manufacturer is developing applications and data services to enable potential customers such as rental car companies to improve fleet management and speed up car return processes.

Network Operations: DPI Analysis

The voluminous nature of network data has meant that traditional network performance management and optimization and planning tools are typically the domain of niche vertical industry software platforms from network equipment providers (NEPs) or niche Internet service providers (ISPs). The introduction of big data analytics opens the door to general-purpose analytics and commercial off-the-shelf (COTS) analytics platforms in the network domain. CSPs can now identify trends in networks with the potential of overcapacity and undercapacity by analyzing across different attributes, including type of network traffic, geography, events, key dates, and time of day.

Big data analytic applications enable fast processing of a high volume of raw network data. They enable CSPs to analyze network capacity, outages, and congestion, as well as plan for growth in network capacity where it is most needed. In addition, they enable CSPs to analyze and determine root cause for faults and outages. Data discovery tools enable CSPs to explore questions such as “why” and “what if” and bring new agility to business intelligence.

Streaming capabilities are also enabling CSPs to process and analyze raw network data in real time, detect outages and congestion, and act on these events. They can be applied to different aspects of network operations, including capacity and coverage planning, network utilization management and operations, new service introduction, network traffic management, and congestion prevention.

Various data sources used in improving network operations include call detail records, network logs, signaling logs, deep packet inspection (DPI), network element (NE) logs and counters, element management system (EMS), and various network management system (NMS) logs.

Financial Services

Similar to the retail industry, financial services organizations have long realized the importance of data and have been an innovative force in analytics and insights. Much of data architecture work and discussions we have had have been with financial companies in the areas of master data management, data integration architecture, and data governance. Even with higher profit margins and deeper pockets, there still have been compromises in terms of the capabilities of historic data storage and limitations in the ability to incorporate unstructured data. Big data has permanently changed the landscape with drastically lower storage costs and new tools for text enrichment functionalities.

Big data analytics use cases in financial services typically fall into one of the following four areas: customer insight, product innovation, company insight, or loss prevention. Customer-focused use cases include credit worthiness analysis, customer profiling and targeting, portfolio management, and collections. Product innovation helps determine bank products and bundles, insurance offers, money management portfolio development, and trading strategies. Loss prevention is a key area where analytics can be applied in different aspects to detect payment fraud and mortgage fraud and to measure and mitigate various risk areas including reputation and liquidity internally and for counterparties. Last but not least, company insight analytics can enhance brand awareness, monitor employee sentiment, and improve compliance man agement in insider trading detection and investigation to ensure the alignment of fiduciary responsibilities of a company and its employees.

Reputation Management

Let’s first take a look at a use case for compliance and reputation management. One company we studied has been trying to prevent the extension of financing to corporate or wealth management clients with questionable reputations on environmental issues, ethical lapses, or regulatory malfeasance. However, it has faced difficulties in processing unstructured data from multiple sources as well as analyzing large volumes of data in a timely manner.

With new capabilities in unstructured data integration with their compliance engine and text enrichment, this company is now able to capture data from thousands of third-party sources, such as print media, government agencies, nongovernment organizations (NGOs), think tanks, web sites, newsletters, social media, and blogs, to detect controversial information and compliance issues at companies and other large institutions. The company is now able to provide standardized information and user experience across departments; this allows financial and risk analysts to vet clients to ensure their reputational risk profile falls in line with the bank’s developing reputational risk posture and to obtain a 360-degree view of a company in the process of deciding whether they want to do business with them.

Fraud Prevention

Large financial institutions play a critical role in detecting financial criminal and terrorist activity. However, their ability to effectively meet these requirements is affected by the following challenges:

- The expansion of anti–money laundering laws to include a growing number of activities, such as gaming, organized crime, drug trafficking, and the financing of terrorism

- The ever-growing volume of information that must be captured, stored, and assessed

- The challenge of correlating data in disparate formats from a multitude of sources

Their IT systems need to provide abilities to automatically collect and process large volumes of data from an array of sources, including currency transaction reports (CTRs), suspicious activity reports (SARs), negotiable instrument logs (NILs), Internet-based activity and transactions, and much more. Detecting fraud was once a cumbersome and difficult process that required sampling a subset of data, building a model, finding an outlier that breaks the model, and rebuilding the model. Now, more and more large financial organizations are moving to an approach that allows them to capture and analyze unlimited volumes of unstructured data, integrate with billions of records, and build models based on an entire universe of data rather than a subset, making sampling obsolete. They can examine every incidence of fraud going back many years for every single person or business entity for fraud detection and prevention as well as credit and operational risk analysis across different lines of business, including home loans, insurance, and online banking.

Personalized Premium and Insurance Plan

In an effort to be more competitive, insurance companies have always wanted to offer their customers the lowest possible premium, but only to those who are unlikely to make a claim, thereby optimizing their profits. One way to approach this problem is to collect more detailed data about individual driving habits and then assess their risk.

In fact, some insurance companies are now starting to collect data on driving habits by utilizing sensors in their customers’ cars. The sensors capture driving data, such as routes driven, miles driven, time of day, and braking abruptness. As you would expect, these insurance companies use this data to assess driver risk. They compare individual driving patterns with other statistical information, such as average miles driven in an area and peak hours of drivers on the road. This is correlated with policy and profile information to offer a competitive and more profitable rate. The result is a personalized insurance plan. These unique capabilities, delivered from big data analytics, are revolutionizing the insurance industry.

To accomplish this task, a great amount of continuous data must be collected, stored, and correlated. This automobile sensor data must be integrated with master data and certain reference data, including customer profile information, as well as weather, traffic, and accident statistics. With new tools to integrate and analyze across all these data sources, it is possible to address the storage, retrieval, modeling, and processing sides of the requirements.

Portfolio and Trade Analysis

When a leading investment bank tried to do some portfolio analysis 18 months ago, it faced challenges with the large volumes of data that its data scientists wanted to use. The firm was limited to using smaller sample sets. The desire to work with large volumes and varieties of data from all angles remained a lofty goal until recently, when the bank looked into a schema-less data design and data model approach. Now the data scientists can incorporate market events in a timely manner and discover how they correlate with performance characteristics. They are able to reconcile the front-office trading activities with what is occurring in the back office and determine what went wrong in real time, instead of days, weeks, or months later. Ultimately, they have a scalable solution for portfolio analysis.

The U.S. securities markets have undergone a significant transformation over the past few decades, particularly in the last few years. Regulatory changes and technological advances have contributed to a tremendous growth in trading volume and the further distribution of order flow across multiple exchanges and trading platforms. Moreover, securities often trade on various disperse markets, including over-the-counter (OTC) instruments. Additionally, products with similar nature and objective are traded on different markets. The Securities and Exchange Commission (SEC) Rule 613, called Consolidated Audit Trail (CAT), was approved in 2012 to serve as a comprehensive audit trail that allows regulators to more efficiently and accurately track activity in National Market System (NMS) securities throughout the U.S. markets. The CAT is being created by a joint plan of the 18 national securities exchanges and FINRA, collectively known as the Self-Regulatory Organizations (SROs). As a result, the SEC is building a trading activity analysis and repository system for CAT. The main objective of this system is to assist the efforts to detect and deter fraudulent and manipulative acts and practices in the marketplace and to regulate their markets and members. In addition, it is intended to benefit the commission in its market analysis efforts, such as simulating the market effect of proposed policy changes, investigating market reconstructions, and understanding causes of unusual market activity. Further, this solution enables the timely pursuit of potential violations, which could play a great role in successfully seeking to freeze and recover any profits received from illegal activity.

The total number of messages per day is estimated to be 58 billion and 13TB in size. It’s currently under a request for proposals (RFP) process. Companies that submitted a bid to build this system include Google, IBM, NASDAQ, FINRA, AxiomSL, Software AG, Tata, Wipro, and Sungard Data Systems. This is perhaps one of the most interesting big data implementation projects to watch in 2014.

Mortgage Risk Management

The recent economic challenges have affected the number of delinquent loans and credit card payments in retail banking, resulting in growing defaults. Most banking and mortgage companies have a vast amount

of complex and nonuniform customer interaction data across delivery channels. In addition, operational systems store customer interaction data for only a limited amount of time for analysis. To address these challenges, one large bank implemented an analytical system that mashes up different types of data, including transactions, online web logs, mobile interactions, ATM transactions, call center interactions, and server logs. From this, its mortgage analysts can determine the most appropriate collection calls to make and even tie the collection activities into upsell campaigns. Some of the positive outcomes from this solution include early detection of potential defaults, lower default rates on retail loan products, improved customer satisfaction, and increased revenue with the ability to offer upsell campaigns during the collections process.

Healthcare and Life Science

Based on McKinsey, if U.S. healthcare were to use big data creatively and effectively to drive efficiency and quality, the sector could create more than $300 billion in value every year. Two-thirds of that would be in the form of reducing U.S. healthcare expenditures by about 8 percent. These goals can be achieved by using data innovatively and by breaking down political and operational boundaries. Some of the use cases discussed in this section include remote patient monitoring, health profile analytics, optimal treatment pathways, clinical research and study design analysis, and personalized medicine.

Remote Patient Monitoring

A majority of older adults are challenged by chronic and acute illnesses and/or injuries. In fact, eight out of ten older Americans are living with the health challenges of one or more chronic diseases.

Telemedicine can be used to deliver care to patients, regardless of their physical location or ability. Research has shown that patients who receive such care are more likely to have better health outcomes and less likely to be admitted or readmitted to the hospital, resulting in huge cost savings. One well-proven form of telemedicine is remote patient monitoring. Remote patient monitoring may include two-way video consultations with a health provider, ongoing remote measurement of vital signs, or automated or phone-based check-ups of physical and mental well-being. These systems include monitoring devices that capture and send information to caregivers, including patients’ vital signs such as blood sugar level, heart rate, and body temperature. They can also transmit feedback from caregivers to the patients who are being monitored. In some cases, such devices even include “chip-on-a-pill” technology, which are phar maceuticals that keep track of medication and treatment adherence. Some instruments can also self-activate and alert patients and caregivers that a test or medication must be taken. Data is subsequently transferred to healthcare professionals, where it is triaged through patient-specific algorithms to categorize risk and alert appropriate caregivers and clinicians when answers or data exceeds predetermined values. Studies have shown that the combination of the two interventions—in-home monitoring and coaching—after hospitalization for congestive heart failure (CHF) reduced rehospitalizations for heart failure by 72 percent and reduced all cardiac-related hospitalizations by 63 percent.

More generally, the use of data from remote monitoring systems can reduce patient in-hospital bed days, cut emergency room visits, improve the targeting of nursing home care and outpatient physician appointments, and reduce long-term health complications. According to a study by the Center for Technology and Aging, the U.S. healthcare system could reduce its costs by nearly $200 billion during the next 25 years if remote monitoring tools were used routinely in cases of CHF, diabetes, chronic obstructive pulmonary disease (COPD), and chronic wounds or skin ulcers.

Health Profile Analytics and Optimal Treatment Pathways

Advanced analytics can be applied to segment patient profiles to identify individuals who would benefit from preemptive care or lifestyle changes. Advanced analytics help identify patients who are at high risk of developing a specific disease (for example, diabetes) and would benefit from a proactive care or treatment program. In addition, they could enable the better selection of patients with preexisting conditions for inclusion in a disease-management program that best matches their needs. Further, patient data can provide an enhanced ability to measure the outcome of these programs as a basis for determining future funding and direction.

Similar methods can be applied to analyze patient and outcome data, to compare the effectiveness of various interventions, and to identify the most clinically effective and cost-effective treatments to apply. This analytical approach provides great potential to reduce incidences of overtreatment, as well as reducing incidences of undertreatment (in other words, cases in which a specific therapy should have been prescribed but was not).

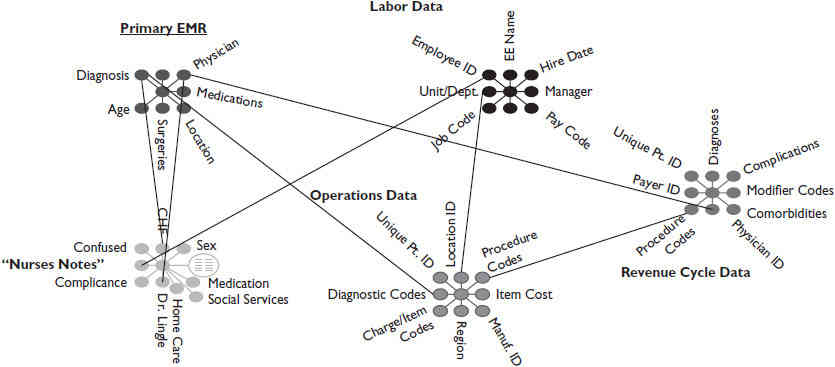

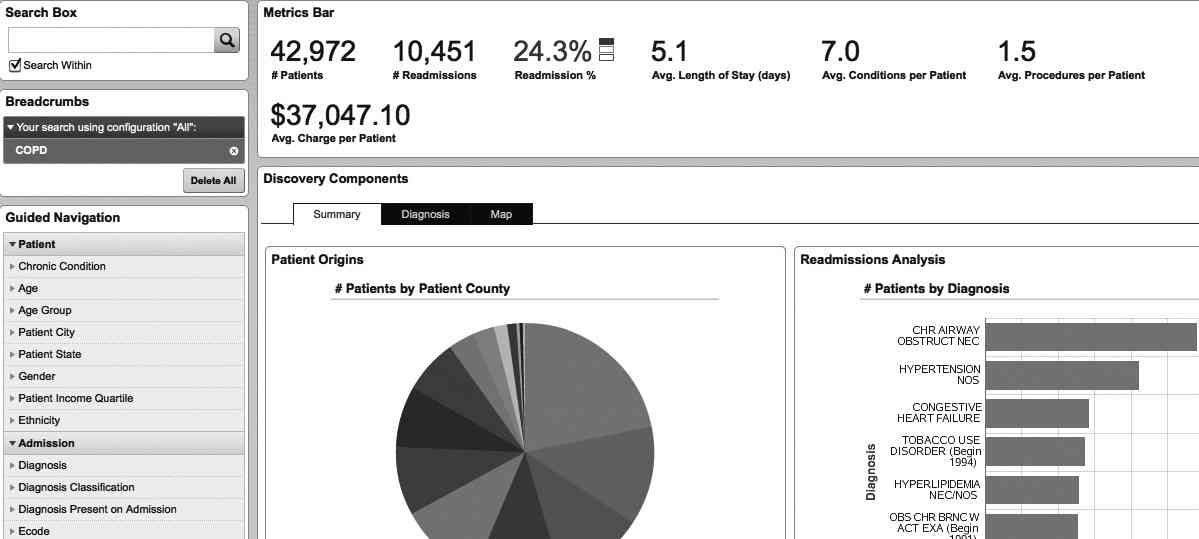

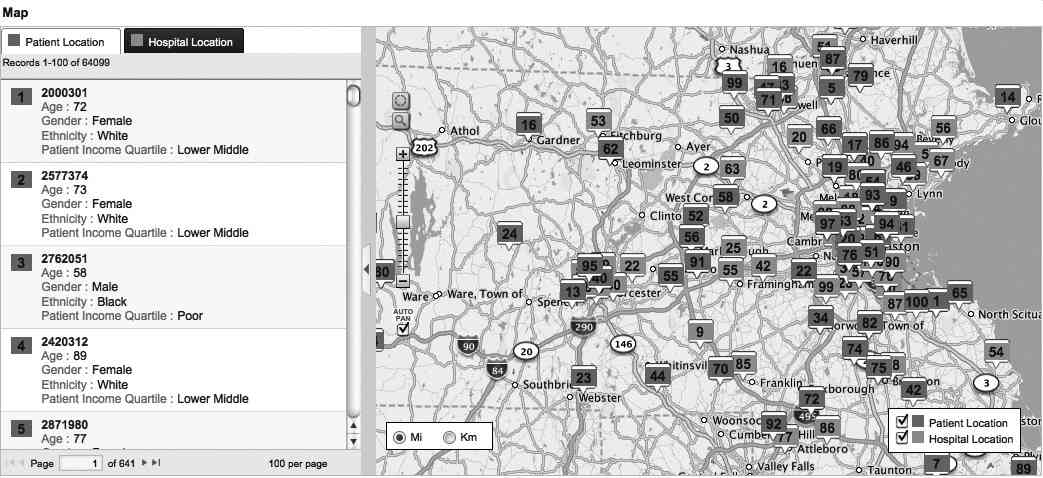

Figures 2, 3, and 4 show sample analytical applications for healthcare correlations that allow analysts to understand readmission rates and to improve healthcare outcomes. Figure 3 contains a number of visual components. It includes a search box on the top left with a Guided Navigation section below the search box that enables the user to filter patient demographics and admission diagnoses. The metric bar contains key performance indicators for patient number, readmission statistics, average stay, and average number of procedures. The discovery components can be customized to display patient county breakdowns and more detailed diagnosis analysis. Figure 4 is a map view of patients and hospital correlations in the New England area that’s under analysis.

FIGURE 2. Sample data sources for healthcare correlation analysis

FIGURE 3. Sample discharge analysis application

FIGURE 4. Map view of patients and hospital correlations

This sample analytical application brings together data from patient registration systems (responsible for admit, discharge, and transfer [ADT] events), electronic medical record (EMR) systems, billing systems, and unstructured data such as nurse notes and radiology reports. The users of this system include healthcare providers whose job is focusing on improving care management, ensuring successful medical outcomes, and accelerating operational performance improvements.

Clinical Analysis and Trial Design

Clinical analysis refers to analyzing physician order–entry data and comparing it against medical guidelines to alert for potential errors such as adverse drug reactions or events. Big data analytical applications can add more intelligence by including image analysis of medical images (such as X-ray, CT scan, or MRI) for prediagnosis, routinely scanning medical research literature to create a repository of medical expertise capable of proposing treatment options to physicians based on a patient’s medical records, symptoms, and past treatment outcomes.

Big data analytical applications are also being used to improve the design of clinical trials. Through mining patient data in different regions and locations and historic clinical trial outcome, statistical methods can assess patient recruitment feasibility, expedite clinical trials, provide recommendations on more effective protocol designs, and suggest trial sites with large numbers of potentially eligible patients and strong track records. Predictive modeling leverages the aggregation of research data for new drugs and the determination of the most efficient and cost-effective allocation of R&D resources. A simulation and modeling approach is being used on preclinical and early clinical data sets to predict clinical outcomes as promptly as possible. Evaluation factors can include product safety, efficacy, potential side effects, and overall trial outcomes. This new class of analytical solutions can reduce costs by suspending research and clinical trials on suboptimal compounds earlier in the research cycle.

The benefits of this approach include lower R&D costs and earlier revenue from a leaner, faster, and more targeted R&D pipeline. These solutions help bring drugs to market faster and produce more targeted compounds with a higher potential market and therapeutic success rate. Predictive modeling can shave 3 to 5 years off the approximately 13 years it can take to bring a new compound to market.

Personalized Medicine

The recent revolution in genetic research has affected virtually every aspect of medicine. It is recognized that the genetic composition of humans plays a significant role in an individual’s health and predisposition to common diseases such as heart disease and cancer. In addition, patients with the same disease often respond differently to the same treatments, partly because of genetic variation. The adjustment of drug dosages according to a patient’s molecular profile to minimize side effects and maximize response is known as modular segmentation.

This new genomic era provides excellent opportunities to identify gene polymorphisms, to identify genetic changes that are responsible for human disease, and to build an understanding of how such changes cause disease. In the clinical arena, it is now possible to utilize the emerging genetic and genomic knowledge to diagnose and treat patients. New solutions include the analysis of a large set of genome data to improve R&D productivity and develop personalized medicine. These solutions examine relationships among genetic variation, predisposition for specific diseases, and specific drug responses to account for the genetic variability of individuals in the drug development process.

For personalized medicine to be a fully functioning reality at the clinical level, certain features are essential: an electronic medical record, personalized genomic data available for clinical use, physician access to electronic decision support tools, a personalized health plan, personalized treatments, and personal clinical information available for research use.

Specific advantages that personalized medicine may offer patients and clinicians include the following:

- Ability to make more informed medical decisions

- Higher probability of desired outcomes thanks to better-targeted therapies

- Reduced probability of negative side effects

- Focus on prevention and prediction of disease rather than reaction to it

- Earlier disease intervention than has been possible in the past

- Reduced healthcare costs

Manufacturing

A common misconception of using big data is that it concerns only social media and consumer interaction. Contrary to this misunderstanding, big data and analytical applications are achieving extensive value for manufacturing companies to enhance and optimize operational efficiencies and capabilities.

Quality and Warranty Management

Quality management for manufacturing companies is critical to success. The ability to determine the root cause of quality issues in a timely manner, especially under public pressure, has always been challenging. One large auto manufacturer experienced just that.

Known and cherished for quality standards, this auto manufacturer was under tremendous fire from media and government and faced litigation pressure for dismissing some claims regarding unexpected acceleration. For reliability engineers, it is always a challenge to sort through thousands of claims a day to prioritize the investigation to proactively discover engineering and design issues and filter out other causes such as driver error. Much of the information needed is unstructured data coming from customer complaints and technician resolution notes.

The auto manufacturer deployed a data discovery application that allows them to go beyond data classification and find hidden correlations through simple search interfaces. Traditionally, it is difficult to trace failures back to suppliers and find out what suppliers and parts contributed to the warranty problems. The reason is that all of the underlying data needed for the analysis is scattered across various systems, including enterprise resource planning (ERP) systems, product life-cycle management (PLM) applications, and quality and warranty management systems. The ability of the data discovery platform to join this variety of structured and unstructured data sources allows the reliability engineers to answer not only the “what” questions but also the “why” questions. In addition, the most vital questions are the questions that can’t be asked. These are the unscripted questions that surface only through exploring the data, testing hunches, and uncovering surprising trends.

For example, the auto manufacturer was investigating some rising trend in battery and smoke complaints. The data discovery system enabled them with self-service data loading, iterative visualization, visual summary, automated data profiling and clustering, and an all-record search to cast a wide net across all of the data sources mentioned earlier. The application automatically configured data range filters based on the actual data values for ease of exploration. The search capability allowed the engineers to leverage their expertise, intuition, and tribal knowledge to ask relevant questions. Through iteratively applying filters, changing dimensions to geographic display, and using different visualization methods such as a heat map, the engineers were able to pinpoint the root cause that low temperature in northern Midwest areas caused premature failure of the batteries. With iterative discovery and the process of exclusion, they were able to test various hypothesis, remove noise, and get to the answer in a few minutes. Other techniques they applied included using bubble charts to look at multiple variables at one time and using dimension cascade for automatic grouping and drilling. Ultimately, they were not only able to confirm battery problems but were able to determine where and when it was happening. They also found out which supplier and part was causing the defect. That enabled them to look into sourcing alternatives and take other actionable measures to correct the problem in a timely manner.

Production Equipment Monitoring

Another usage pattern for the manufacturing industry takes big data into real time. The data factory and data warehouses typically store historic information for equipment performance history and maintenance records. Advanced algorithms can be built to analyze correlations between certain events and characteristics, which can be used to predict future mechanical failures. As sensor data moves through the event-processing engine, a match for that event with the predictive model will occur and determine whether an alert needs to be raised. Maintenance staff can be deployed before a breakdown occurs. After the maintenance staff is deployed, they can report to the system whether that prediction is valid. That information will feed back to the predictive model and continue to improve the model and capability.

Public Sector

With ever-shrinking budgets, government agencies in many parts of the country are under pressure to pro vide better services with less funding. Big data presents significant potential to improve productivity and to minimize waste, fraud, and abuse.

Law Enforcement and Public Safety

Leveraging technology to search and correlate crime data is an absolute necessity in the modern era of intelligence-led policing. Let’s take a moment to consider the challenges faced by policing organizations today. Data and information are always fragmented. Specific systems aligned to specific functions have grown organically over a number of years, and it is not uncommon for a policing organization to rely on hundreds of siloed applications to support their operations.

Police officers need to access multiple systems to find answers to commonly asked questions, such as these: Who is the registered owner of the vehicle caught on CCTV? Which suspects have these physically identifying marks similar to those described by a witness? Who do these partial fingerprints retrieved from a crime scene belong to? Who else can I put in a photo identity line-up? The police officer in the field responding to incidents needs to know answers to these questions in real time and with reliability.

Large police departments are combining data from social media with their existing departmental data to identify hot spots, correlate deployment of thousands of police officers, initiate operational orders, and retrospectively identify potential witnesses. The Chicago Police Department leveraged information discovery capabilities during the May 2012 NATO Summit in that city. In addition to the 7,000 visiting dignitaries, the summit brought to the city tens of thousands of protestors. The application’s unstructured data analysis capabilities were crucial in integrating social media information with their departmental 911 and force deployment information. With this analysis, officers could be rapidly redeployed to preemptively address evolving threats.

Waste, Abuse, and Fraud Prevention

Revenue departments at various levels of government are leveraging big data technologies to optimize, deepen, and extend their revenue fraud prevention capabilities. Some of them are developing analytical applications, including classification, duplicate filing, clustering, and anomaly, to prioritize audits and to respond more quickly to taxpayer inquiries, such as when a refund will be processed. Some of the effort has been focused on providing easy access to the data so the auditor needs less frequent contact with a taxpayer undergoing an audit.

A number of innovative leaders in this space are also finding ways to deepen the analysis. Some are leveraging crowd sourcing to digitize paper filings. Text analytics are being used to integrate auditor notes and other relevant content repository. Some are also using social media to correlate claim and filing accuracy. For example, “check-ins” on Facebook or Foursquare can reveal location information about the taxpayer. Year-over-year comparisons are also becoming commonplace with more historic filings becoming available.

Moreover, many state agencies are breaking down boundaries and including external data sources in their analysis. For example, the state of Georgia is using Nexus Lexus services to supply public records, including voting records, incarceration records, birth and mortality data, and house purchase and mortgage rate for primary residence, as well as litigation data. Several states have formed the Multistate Clearinghouse to share data across states. In some cases, this organization is able to locate individuals who filed a nonresident return in one state but failed to file a resident return in their home state.

Service Improvement

There are many examples of how government agencies are using big data technologies to improve services, including reducing infant mortality and child fatality, pest infestation prevention, and better city planning.

Big data has great potential to improve city planning. One use case is to use mobile device location data to develop algorithms to look for patterns and help transportation planners on traffic reports. Here’s an example: A coastal city near another major business hub is trying to attract businesses to the city, but they face the stigma of a reputation for crime and poverty. They leverage anonymized location data, combined with DMV records and U.S. census information, to show the patterns of wealth and vibrancy in the city to convince national chains that don’t know the city well to open a store there and then help them choose ideal locations.