Availability is the time that business services are operational. In other words, availability is a synonym of business continuity. And when it comes to IT Infrastructure, systems, site, or data center, availability reflects the span of time applications, servers, site, or any other infrastructure components that are up and running and providing value to the business.

Availability is the time that business services are operational. In other words, availability is a synonym of business continuity. And when it comes to IT Infrastructure, systems, site, or data center, availability reflects the span of time applications, servers, site, or any other infrastructure components that are up and running and providing value to the business.

The focus of this article is based on the IT Infrastructure and data center availability leading to business uptime.

IT Infrastructure availability ensures that its components are fault tolerant, resilient, and provide high availability and reliability within site and disaster recovery cross-site/data centers in order to minimize the impact of planned or unplanned outages. Reliability ensures systems have longer lives to be up and running, and redundancy helps ensure system reliability.

Expectations on high availability may vary depending upon the service level being adopted and agreed upon with the business stakeholders. And in terms of disaster recovery, the availability is the reflection of time to return to normal business services, either through failover of services to the disaster recovery site or through restoration from backup, ensuring minimal data loss and reduced time of recovery.

Availability Challenges

Having observed some major disasters in the IT Infrastructure and data center space, availability is a top priority for business continuity. We need to understand the challenges introduced due to the availability aspect of IT Infrastructure and/or data centers.

But before even starting to understand the challenges in managing and supporting IT Infrastructure specific to server availability, let’s first understand the backbone of IT Infrastructure, which is a data center and its primary purpose.

- Data Center is a collection of server/computers connected using network and storage devices controlled by software and aims to provide seamless business functioning. A data center is the brain and also one of the sources of energy to drive the business faster with the least or no interruption.

- In the early age of data center design, there were computer rooms; the computers were built in a room dedicated to IT. With the rapid growth of IT requirements, risks on the data integrity and security, modularity, availability, and scalability challenges, the data centers were moved out of company premises to a dedicated site; and they kept network, security, and storage as different dedicated components of Data Center Management.

So what are the data center availability challenges? Here are some of the critical data center measures that lead to availability challenges.

At the very beginning, lack of planning for the selection of an IT site or data center site could have severe availability impact due to natural disasters such as floods, earthquakes, tsunamis, etc. Similarly unplanned floor design may lead to a disaster due to flood or any other water clogging.

Not having sufficient fire protection and policies is another big challenge to the availability and may lead to data center disasters. The next challenge is insufficient physical security, such as not having Photo Identity, absence of CCTV, not maintaining visitors’ log registers, etc.

Hardware devices, such as Servers, Storages, and Network and their components installed with no redundancy will have an immediate impact on availability. Most of the traditional data centers suffer the disruption of services due to non-redundant devices.

Electrical power is one of the most critical parts of data center uptime. Not having dual power feeds, backup powers supplies, or green energy power sources are some of the critical areas of challenges for availability. Similarly, absence of temperature control in the data center will have a disastrous impact on running high-computing temperature-sensitive devices.

Unskilled or not, having the right level of skill and untrained technical resources are big contributors to risks on data center availability.

Not having monitoring solutions at different levels such as heating and cooling measurements of the data center to the monitoring of applications, servers, network- and storage-based devices will have direct impacts on the host availability. Also, having no defined and applied backup and restoration policy will have an impact on the host availability.

Absence of host security measures in terms of hardware and software security policies is another challenge to availability.

Addressing Availability Challenges

As discussed in the previous section, availability is the most important factor that ensures application and business uptime. To address the challenges posed to the data center availability, each of the above challenges, be it site selection, floor planning, fire safety, power supply redundancy, servers/network and storage-level redundancy, physical security, Operating System-level security, network security, resource skills, and monitoring solutions should be addressed and resolved.

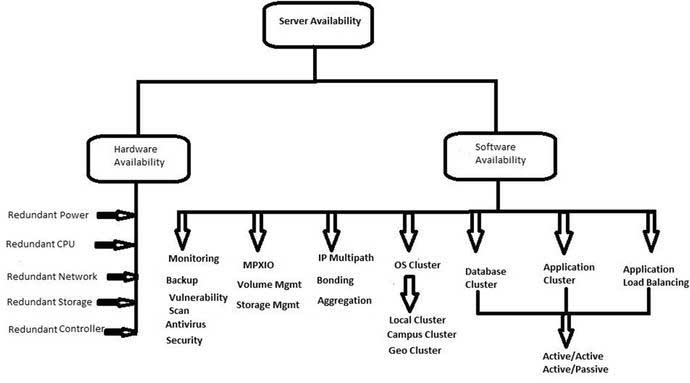

When it comes to server availability, it reflects hardware redundancy and software-based high availability. In other words, redundant hardware components along with software-driven fault tolerant solutions ensure server availability. Figure 1 further gives detailed components impacting and contributing to the server availability.

Figure 1. High Availability Components

As explained above, Server Availability is mainly covered as hardware and software availability. On hardware availability, introducing redundant hardware for critical components of the server will provide high resilience and increased availability.

The most critical part of addressing availability challenges is to ensure the server has redundant power points. Power supplies should be driven from two difference sources of power generators and with a minimal time to switch. Battery backup/generator power backups also ensure minimal or no server downtime.

The next important component for host availability is the CPU, and having more than one CPU can ensure, in the case of failure, that one of the processors that processes is failed over to a working CPU.

Next in the line addressing server availability challenges is to have redundant network components, both at switch level and server-level port redundancy. The network redundancy is later coupled with software-based network redundancy such as port aggregation or IP Multipathing or Interface Bonding to ensure network interface failures are handled well with very insignificant or no impact on the application availability.

Storage-based redundancy is achieved at two stages. One is at the local storage, by using either hardware- or software-based raid configuration; and second, using SAN- or NAS-based storage LUNs, which are configured for high availability. OS-based MPXIO software ensures the multipath.

On a larger scale for the data center (DC) availability, based on business availability requirements, data centers are classified and certified in four categories:

- Tier I – Single non-redundant distribution paths; 99.671% availability allows 28.817 hours of downtime.

- Tier II – Along with tier I capabilities, redundant site capacity, with 99.741% availability and allows 22.688 hours of downtime.

- Tier III – Along with tier II capabilities, Multiple distribution availability paths (dual powered), with 99.982% availability and allows 1.5768 hours of downtime.

- Tier IV – Along with tier III capabilities, HVAC systems are multipowered, with 99.995% availability and 26.28 minutes or .438 hours of unavailability.

And finally, when it comes to host monitoring (hardware, OS, and application), it should also be integrated with the event generators and events further categorized into different service levels based on the criticality of the event generated, ensuring minimum disruption to the services.

OS Clustering Concepts

Operating System cluster architecture is a solution driven by highly available and scalable systems ensuring application and business uptime, data integrity, and increased performance by performing concurrent processing.

Clustering improves the system’s availability to end users, and overall tolerance to faults and component failures. In case of failure, a cluster in action ensures failed server applications are automatically shut down and switched over to the other servers. Typically the definition of cluster is merely a collection/group of object, but on the server technology, clustering is further expanded to server availability and addressing fault tolerance.

Clustering is defined as below:

“Cluster analysis or clustering is the task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar (in some sense or another) to each other than to those in other groups (clusters).” – Wikipedia definition

“Group of independent servers (usually in close proximity to one another) interconnected through a dedicated network to work as one centralized data processing resource. Clusters are capable of performing multiple complex instructions by distributing workload across all connected servers.” – Business Dictionary. Read more.

OS-based cluster is classified based on the location of the cluster nodes installed. They are Local Cluster, Campus Cluster, Metropolitan Cluster, and Geographic Cluster.

- Local cluster is the cluster environment built in the same Data Center Site. In this configuration disks are shared across all cluster nodes for failover.

- Campus Cluster, also known as stretched cluster, is stretched across two or more Data Centers within the limited range of distance, and mirrored storages are visible across all other cluster nodes such as local cluster configuration.

- Metropolitan cluster setup with Metropolitan area, still limited by distances. The setup for Metropolitan cluster is similar to the one in Campus cluster, except that storage is set up for replication instead of disk mirroring, using Storage remote replication technologies. Metropolitan provides disaster recover in addition to the local high availability.

- Geographic cluster, which is ideally configured for a dedicated disaster recovery cluster configuration, is set up across two or more data centers geographically separated.

Local and campus clusters are set up with similar cluster configurations and using the same cluster software, although Metropolitan and Geographic clusters use a different software-based configuration, network configuration, and storage configuration (like replication True Copy, SRDF, etc.).

Business Value of Availability

Nonavailability of IT services has a direct impact on business value. Disruption to the business continuity impacts end users, client base, stakeholders, brand image, company’s share value, and revenue growth. A highly available IT infrastructure and data center backbone of an enterprise caters to the bulk needs of customer requirements, reduces the time to respond to customers’ needs, and helps in product automation – ultimately helping in revenue growth.

Below are some examples of incidents of data center disasters due to fire, flood, or lack of security that caused systems and services unavailability for hours and resulted in a heavy loss of revenues and a severe impact on the company’s brand image.

On April 20, 2014, a fire broke out in in the Samsung SDS data center housed in the building. The fire caused users of Samsung devices users, including smartphones, tablets, and smart TVs, to lose access to data they may have been trying to retrieve (reed more).

Heavy rain broke a ceiling panel in Datacom’s (an Australian IT solutions company), colocation facility in 2010, destroying storage area networks, servers, and routers belonging to its customers. On September 9, 2009, an enormous flash flood hit Istanbul. Vodafone’s data center was destroyed.

The addition of a single second adjustment applied to UT (Universal Time) due to Earth’s rotation speed to the world’s atomic clocks caused problems for a number of IT systems in 2012, when several popular web sites, including LinkedIn, Reddit, Mozilla, and The Pirate Bay, went down.

In 2007, NaviSite (now owned by Time Warner) was moving customer accounts from Alabanza’s main data center in Baltimore to a facility in Andover, Massachusetts. They literally unplugged the servers, put them on a truck, and drove the servers for over 420 miles. Many web sites hosted by Alabanza were reportedly offline for as long as the drive and reinstallation work took to complete.

A few years ago there was a data center robbery in London. UK newspapers reported an “Ocean’s Eleven”-type heist with the thieves taking more than $4 million of equipment.

Target Corporation, December 2013: 70 million customers’ credit/debit card information was breached, amounting to a loss of about $162 million.

It is quite obvious that any impact on the availability of the data center components such as server, network, or storage will have a direct impact on the customer’s business.