All of the current practices I’m aware of that are typically used for performance tuning can be grouped into a category that I call a small model approach. The small model approach includes a variety of methods that I also refer to as traditional methods because they represent the way tuning has been accomplished since Oracle began instrumenting and exposing performance metrics. The amount of data available to tuners has increased greatly, but the methods for examining the metrics remain essentially the same.

All of the current practices I’m aware of that are typically used for performance tuning can be grouped into a category that I call a small model approach. The small model approach includes a variety of methods that I also refer to as traditional methods because they represent the way tuning has been accomplished since Oracle began instrumenting and exposing performance metrics. The amount of data available to tuners has increased greatly, but the methods for examining the metrics remain essentially the same.

All of the traditional methods rely on a subset of metrics chosen from the overwhelming number of metrics persisted by Oracle and stored in the AWR (Automatic Workload Repository) or other repositories in the case of v$ views, StatsPack, custom tools, and other third-party tools. Oracle gathers these metrics automatically in the background (while applications are running) and persists them into a repository that is known as AWR. In its raw form, AWR is a massive repository of historic performance metrics and statistics. In an effort to make that data sensible to the user, Oracle exposes many of these metrics, though not all, in various reports (e.g., AWR report, active session history (ASH) report, AWR compare report, etc.). They have also developed tools that use the collected metrics and advanced algorithms to help direct tuning experts in their efforts to address performance issues (e.g., the various advisors available with the Tuning Pack license).

The purpose of all traditional approaches is to pinpoint areas of low performance which can then be targeted for tuning. The metrics chosen for analysis are selected based on their usefulness for solving performance problems, but for any given incident, the performance problem may or may not be manifested in the particular set of predefined metrics exposed by the tool. In my experience, performance issues are different every time (despite patterns of issues), and each class of problem tends to manifest in a different set of metrics; so if the tool misses the metric, the tuner is left to navigate her own path through a tsunami of metrics to find the problem. Expertise can be gained in knowing which metric to choose for examination, but it is often a hunt-and-seek process that can be particularly discouraging to someone new to the field.

Late I will discuss briefly a few of the most common traditional methods/tools used by performance tuners and how they can be useful. I’ll mention their limitations as well in order to set the stage for the introduction of the DOPA process. This dynamic process is able to provide a more robust predictive analysis based on a much greater data source and to do so quickly and with proven impact. I’ve chosen the following four traditional approaches to discuss because they are the most frequently used by tuning experts.

OEM

OEM is shorthand for Oracle Enterprise Manager Cloud Control 13c. When I refer to OEM, I usually mean the performance pages of OEM. OEM includes metrics contained in active session history (ASH) and other DBA_HIST views related to ASH (including DBA_HIST_SQLSTAT, DBA_HIST_SQL_PLAN) as well as metrics from a variety of other sources. I have found OEM most helpful for detecting problems in the top resource-consuming SQL statements that may require tuning. For example, the OEM ASH Analytics page will enable you very quickly to identify the SQL statements that are consuming the most resources. Generally, if you can tune this SQL, you can get better performance.

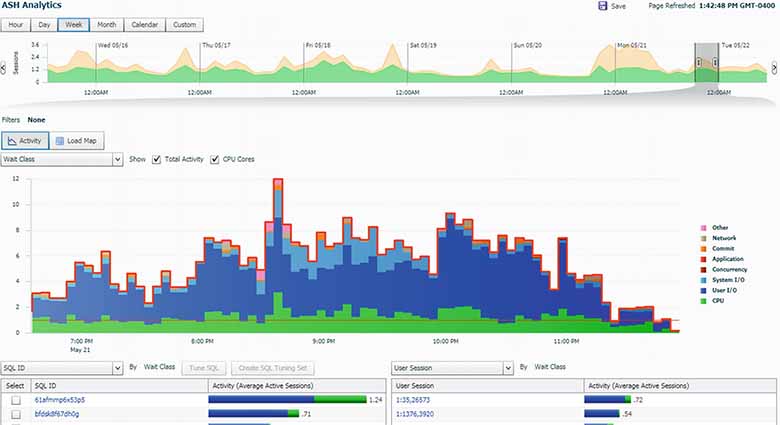

Figure 1 is an example of an ASH Analytics page from within OEM. It shows the top running SQL statement for the database in question to be sql_id 61afmmp6x53p5 [a sql_id is the primary identifier that Oracle uses to identify a SQL statement; refer to the Oracle documentation for more details]. This top running SQL statement is taking significantly more time than the next longest running SQL statement. Navigating around the ASH Analytics page in OEM, you can easily see the top SQL statements and find out what it/they are waiting on; in this case sql_id 61afmmp6x53p5, I was waiting on event, “db file sequential read,” which is essentially indexed I/O.

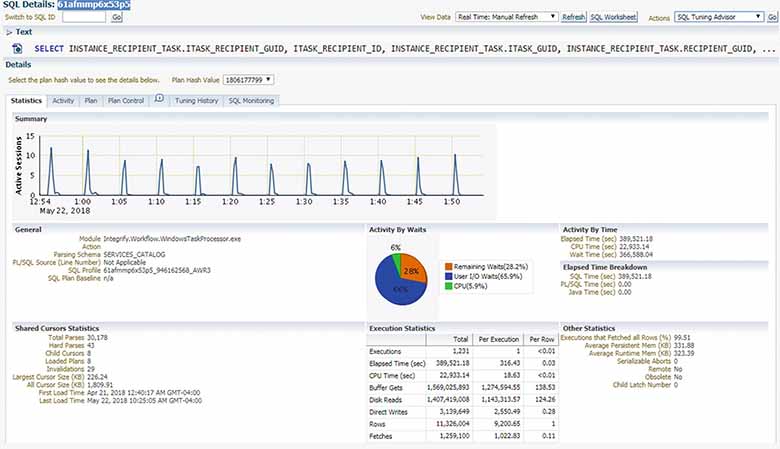

By clicking on the hyperlink for the SQL ID in the ASH Analytics page (Figure 1), I can drill even deeper to the SQL Details page, and this SQL runs every five minutes and already has a custom SQL Profile on it. An example of the SQL Details page is in Figure 2. You can see in the figure that the statement being examined runs every five minutes and that there is a SQL Profile defined for it [in the section labeled “General,” field labeled “SQL Profile”]. Note: SQL Profiles are sometimes provided by SQL Tuning Advisor as a solution to a SQL performance problem; it essentially fixes the execution plan through hints persisted outside the SQL statement. I refer the reader to the Oracle documentation on SQL Profiles for more details.

Figure 1 Example ASH Analytics page from within OEM

Figure 2 Example SQL Details page

OEM is very easy to use for top SQL analysis. Because OEM is easy to run and because SQL is fairly easy to tune (when the SQL Tuning Advisor provides actionable advice), many tuners jump right in and automatically tune the top SQL statement thinking that if a database is running a particular SQL statement a lot, then it must be at least a part of the performance problem. However, this is just not true in most of the performance cases I’ve worked on.

First of all, in my experience, application sessions running in the background (rather than sessions running application front-end SQL statements) are usually the source of top SQL, and this background work is almost never the source of the users’ complaint. Their complaint is usually related to some business function in the online portion of the application that is not meeting the performance requirements. To the extent that tuning the top SQL statements will improve performance of these background processes and therefore reduce load on the machine, tuning top SQL can be helpful, but tuning top SQL when the chief problem lies elsewhere just won’t fix the problem that the users are experiencing.

For example, in the preceding problem interval, users were complaining about a bit of SQL running from their reporting application that was taking approximately a minute and a half to execute, but the performance requirements were on the order of a few seconds. The users knew the time frame of the poor performance, the connect account name, and the module/machine name running the code. In this case, the SQL was not easy to identify using OEM because it wasn’t a top resource-consuming SQL statement. When I used a custom query against DBA_HIST_ACTIVE_SESS_HISTORY, I was able to identify the sql_id. I ran that SQL statement through the SQL Tuning Advisor which recommended two new indexes [refer to the Oracle documentation for details on how to run the SQL Tuning Advisor]. Once these indexes were implemented, users saw a 90% performance improvement.

Another performance issue I encountered where the OEM/ASH Analytics use case did not identify the problem was when a user’s session was stuck on an idle wait event (SQL*Net message from client). Again, using a custom SQL statement against the session history, I was able to identify the issue. As it turns out, this was a query across a database link and something happened to the remote session. For this problem, the solution was simple — kill the session and restart the query.

ASH Reports

The ASH report embodies a problem-solving approach similar to OEM ASH Analytics page in that it targets SQL tuning by focusing on the top SQL statements for a session. Both of these tools can be useful when the source of the problem is a SQL statement. Both tools target SQL tuning by identifying the top resource-consuming SQL statements running on a database. The shortfall of ASH reports is similar to those already discussed for OEM. In both cases, if the problem is other than the top SQL, the problem will be missed using these tools.

I have observed that less battle-hardened/seasoned tuners often grab the top SQL and declare it/them to be the problem and then go about trying to fix it/them—but, as I discussed in the preceding section on OEM, just because a SQL statement ran often or long doesn’t mean it was a cause of the poor performance being reported by the application users. When SQL tuning is the cause of poor performance, we want to identify not the longest running SQL but the SQL that is actually causing the performance problem. Spending time tuning a top SQL statement when that is not the real problem is a time waster; several fix/test cycles may pass before you are able to hone in on the real problem (if you ever get there).

While I was still using traditional approaches, I found both tools, OEM and ASH reports, cumbersome to use because, while they are good for the use cases for which they are designed (identifying top SQL), they were not helpful for most of the performance problems I encountered on a daily basis. Only a small percentage of the problems I see are caused by the top SQL. I improved upon these tools by developing custom code to directly query the AWR data for many of the same metrics contained in OEM and ASH reports with the slightly different purpose of finding the slowest SQL involved in a reported slow process, instead of the top SQL overall for the instance. My code allowed me to subset at a more granular level giving me greater flexibility, and this enabled me to focus in on the problem quickly. I have done several professional presentations on the method I used for tuning with this and other tools that I developed. I won’t digress to discuss them in detail here since evaluation of SQL performance is incorporated into the more comprehensive DOPA process presented in my blog and much more effectively accomplished by it.

OEM/ASH Analytics and the ASH report were designed to identify top SQL. When used for this purpose, they are effective tools. They are not, however, an effective or efficient starting point for tuning in general. This is because they only identify top SQL. There is a high potential for lack of alignment with the reported performance problem as the preceding examples demonstrate. An even greater concern is that many causes of performance degradation have nothing at all to do with SQL, and these will be missed entirely using these tools.

AWR

The AWR is the Cadillac of data sets. It is also the tsunami of data sets. The AWR report exposes roughly 50 AWR data views containing tens of thousands of metrics, but to a great extent, the report lacks an interpretive component. Whereas the problem with OEM and ASH reports was that they were too narrowly focused and therefore useful for only a particular type of problem-solving use case, the data collected in the AWR is comprehensive and can tell you about every conceivable problem on the database, but the sheer volume of data is incredibly overwhelming. Consequently, you have to choose what metrics to look at. You also have to know what each metric measures and what kind of value it is indicating (time, percent usage, cumulative, etc.), and you have to know where to find it.

I have talked to many database analysts about AWR, and the most common remark I hear is that it is so overwhelming they don’t know how to use it or where to start. I’ve met very few people who are proficient at using it. There are a few easily identifiable sections that most tuners adopt as their go-to sections, but most of the AWR is ignored.

If a tuner wanted to attempt to use AWR, he/she might start by trying to understand a particular metric. Some documentation exists, but much of AWR is not well documented. Even where documentation does exist, you might need to piece information together.

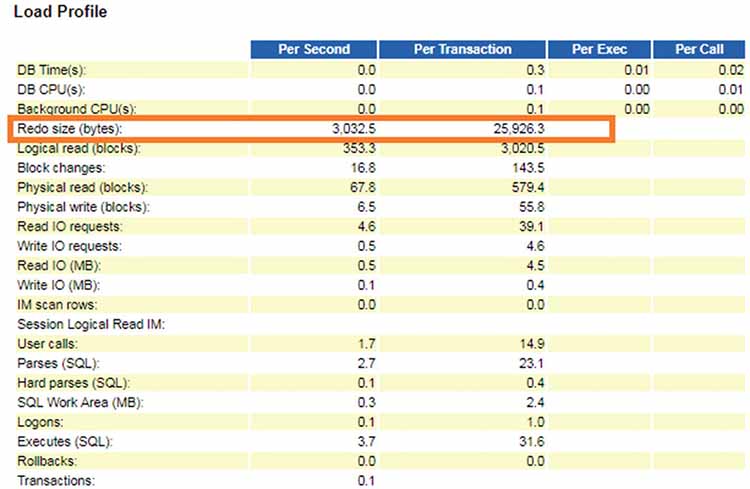

For example, the load profile section of AWR shows a line item “Redo size (bytes)” as pictured in Figure 3. There is a column for this variable in the AWR report showing Per Second and Per Transaction values. I discovered thru my own investigation that this is really a compilation of two metrics:

- Redo size/sec which is collected from Sys Metric Summary where its metric name is “Redo Generated Per Sec”

- Redo size/transaction which is collected from Sys Metric Summary where its metric name is “Redo Generated Per Txn”

Figure 3. An example load profile section from an AWR report showing a line item “Redo size (bytes)”

Knowing the variable name and where it comes from is a first step, but you also have to know what is being measured and how that information can be useful. The analyst must bring a knowledge of computer science and Oracle architecture in order to make sense of the metrics.

Another level of difficulty lies in the fact that raw numbers must be interpreted. Even if the analyst has a good knowledge of computer science and understands the metric, he has to determine if the value reported is indicative of a problem. Returning to Figure 3, an analyst would probably be able to guess that the Redo size metric is some measure of update activity against the database in bytes, but I don’t think most would be able to tell you if this is a normal number or if it is indicative of a problem.

Most people who use the AWR data choose to learn about a few metrics and use them regularly. Perhaps they heard a speaker say how useful it was. They get comfortable with their chosen metrics and rely on them whenever analyzing a performance problem. This is what I did when I used a traditional approach for tuning; I had my go-to metrics for solving problems.

Using the metrics in AWR may be the best way to find any performance problem on any database, but using all of the metrics is humanly impossible. Which metrics to choose for solving a problem is the challenge. A select few favorites may enable you to get it right the first time, every time, but more often it is a process that requires very skillful navigation and multiple levels of investigation to be able to hone in on a problem. Even with an educated selection of metrics to begin with, there is usually some trial and error, narrowing down of the problem, eliminating some areas, and focusing on others. It’s a process that requires many important decisions along the way. A skillful tuner can navigate quickly using the parts of AWR that are most useful for a given problem, but the novice will probably be quickly overwhelmed.

ADDM

Unlike the AWR report, which has no interpretive component, the Automatic Database Diagnostic Monitor (ADDM) is designed to be an analytic tool. Oracle Corporation describes ADDM as “a revolutionary, first-of-its-kind performance self-diagnostic solution.” ADDM interprets stats collected/reported by the AWR framework and analyzes them in regard to the top SQL statements and wait events [beginning in version 12c, Oracle includes the high-level findings from the ADDM report in the AWR report]. The goal of the ADDM analysis is to optimize database time; thus, long-running/high resource–consuming processes are identified as “problems.” ADDM will often direct you to run further investigations on these “problems” (usually SQL Tuning Advisor or Segment Tuning Advisor). It will also frequently generate “solutions.” The ADDM solutions most frequently involve parameter changes, specifically increasing SGA or PGA or log buffer.

When I was tuning using a traditional approach, I found the ADDM to be a valuable tool as have many other tuners. The metrics contained in it are extremely useful for identifying many problems that can affect performance, and the actionable advice is often profitable. However, once again, this tool has its limitations.

One problem with the ADDM that I frequently encounter is a scenario in which the problem SQL is not identified as one of the top SQL statements in the ADDM analysis. In these cases, the ADDM totally misses the problem SQL and will therefore not address the SQL statements that are key to solving the specific performance problem that has been reported. In this situation, an inexperienced tuner might take the SQL statements highlighted by the ADDM and spend time tuning SQL that have nothing to do with the real issue. The reason for this “deficiency” in the ADDM tool is its inability to evaluate a specific problem at a level detailed enough to give really useful information for that problem. That is, ADDM looks at the entire database, not a specific issue, and quite frequently, application performance issues are with SQL far below the top 5. I learned to view the ADDM as most useful for identifying “low-hanging fruit” because it is generally the case that if you tune SQL identified by the ADDM, you will get some performance improvement, but this improvement may or may not be significant. More importantly, as I’ve already mentioned, it may not even address the real problem, even if the real problem is SQL related.

Another shortcoming of the ADDM is that not all ADDM tuning advice will yield meaningful performance impact. If actioned unquestioningly, performing all the tuning recommended by ADDM would supply lots of busy work for the tuning professional and add cost unnecessarily. For example, ADDM might recommend increasing memory. Much effort could be spent upgrading memory with negligible impact to the overall performance. This would be a waste of effort as well as a monetary loss since adding memory has a significant cost.

Summary

Oracle Corporation designed AWR and the suite of tools that accompany it as part of their effort to provide a manageability framework to undergird a self-managing database. Their goal was/is to make out-of-the-box database tuning as effortless as possible, but the tools they’ve created do not replace the performance tuner. Just as medical technology has produced extremely advanced tools for surgical procedures, the metrics stored in the AWR must still be used by a skilled operator to achieve the best outcome.

The traditional approaches discussed represent some of the most commonly used tools for performance tuning. Each of them relies on a subset of the data available in the AWR to help the tuner resolve performance problems, and therefore I consider them small model approaches. The limitation with all of these traditional, small model approaches is that although they can be useful for some instances, the tuner has to know which tool to use for each different type of problem. It is just not possible for a single individual to look at all of the available data collected by Oracle, so he/she must choose. The effectiveness of his analysis will depend on making the right choice. A tuner with lots of experience may be able to “intuitively” know which tool to go to in response to a particular complaint and may be able to diagnose problems with some consistency. But for the novice, making the wrong choice may cause him to come up empty-handed, or it may lead him into tuning efforts that are nonproductive. For the novice using the traditional approach, learning almost always involves lots of failures and frustrations.

The difficulty of acquiring skill in performance tuning using the traditional approaches is made significantly greater by the fact that tuning must be learned on a “broken” system. When databases are “broken,” tensions can be high and the stress real. That is not an optimum time to experiment with tools that may or may not produce results. Many newbies give up and choose a new career trajectory.

With each new version of Oracle, more and more metrics have been added. These metrics may be very helpful for diagnosing problems, but they are additions to what I have previously referred to as the AWR tsunami. If a tuner were to try to take in all the metrics in the AWR at once, it would be like trying to drink from a fire hose or worse. The additional metrics complicate even further the question, “Which metric do I consider and in what order?” It is just not humanly possible to consider the thousands of metrics available and so people choose their favorite. If human effort were the only alternative, the suggestion of considering all/most of the metrics instead of just a few hand-picked ones would be outlandish, but with the use of big data techniques, this is exactly what the dynamic process enables tuners to do and with very little effort. The dynamic process ushers in a technique that, unlike the small model approaches currently used, employs vast amounts of the available data. It is for this reason that I call the dynamic approach a complete model approach.