When I began tuning Oracle databases and applications back in the mid-1980s, I was using the methods that were state of the practice at that time and then moved to the traditional methods and tools mentioned in this article as they became available. For metrics-based analysis, I looked at data in individual tables/views—first in the v$ views, later the StatsPack views when they were introduced, and finally, beginning with version 10g, I considered metrics persisted in the AWR views. I researched and worked hard to understand the content and meaning of the various metrics. I relied heavily on input from users about when a problem occurred and what the symptoms were to make choices about where to look. Over time and with practice, I was able to improve my ability to quickly hone in on a problem by choosing the right metrics to consider. I was always concerned, however, that there were so many metrics being ignored/left out of the analysis. Although I felt confident in my ability to perform a root-cause analysis for the problems I addressed, I was certain that a more complete analysis would yield a better, more holistic picture of the database and what was going on “under the covers.”

When I began tuning Oracle databases and applications back in the mid-1980s, I was using the methods that were state of the practice at that time and then moved to the traditional methods and tools mentioned in this article as they became available. For metrics-based analysis, I looked at data in individual tables/views—first in the v$ views, later the StatsPack views when they were introduced, and finally, beginning with version 10g, I considered metrics persisted in the AWR views. I researched and worked hard to understand the content and meaning of the various metrics. I relied heavily on input from users about when a problem occurred and what the symptoms were to make choices about where to look. Over time and with practice, I was able to improve my ability to quickly hone in on a problem by choosing the right metrics to consider. I was always concerned, however, that there were so many metrics being ignored/left out of the analysis. Although I felt confident in my ability to perform a root-cause analysis for the problems I addressed, I was certain that a more complete analysis would yield a better, more holistic picture of the database and what was going on “under the covers.”

I knew what I really wanted/needed was a more scientific method of tuning that would use all the data/metrics and that would point me to the problem instead of having to predetermine where to look, but it took me time to discover a better method. Following is a short recollection of the epiphanies that led me to the development of the DOPA process as it is today. This approach accomplishes much of what I hoped to accomplish, although I am working continually to improve the underlying code.

Shortly after beginning to use AWR data for Oracle Performance Tuning, I discovered the DBA_HIST versions of the v$Sysmetric_Summary, v$SysStat, and v$Sys_Time_Model views [the Oracle documentation provides a good starting point for the reader who wants more information as to the content of these views and the meaning of the metrics they contain]. These views have hundreds of normalized metrics in them. I hand-picked several metrics (about two dozen) from these views since there were way too many to use all of them given my process at the time. The metrics I chose were those that aligned to things like CPU, IO, memory, network, workload, and REDO — classes of metrics I knew could be helpful for analyzing performance problems. I coded a program that captured the metrics I wanted to look at and pivoted them out into a multicolumn tabular format for each time interval in the analysis period under consideration. This enabled me to view only “relevant” metrics in such a way that abnormalities could be more easily identified.

I used this collection and presentation of metrics for diagnosing performance problems for many years. It provided results most of the time. Because my work involves support of over 300 production Oracle instances with more than 2500 applications, I had thousands of tuning opportunities and gained a tremendous amount of experience. I discovered that my ability to provide metrics-based root-cause analysis using these hand-picked metrics became quite good over time, but still, I knew that in overlooking so many other metrics, I might be missing essential observations. I knew my analysis was never as fully informed as it could be and that this lack of information might result in a poor problem hypothesis and/or a lower quality solution.

About two years ago, my son was completing his PhD work in Operations Research. I was privileged to be able to converse with him and his cohorts as they discussed the work they were doing in big data analytics. I began to wonder how I might be able to incorporate some of the techniques they used on big data to take advantage of all the data stored in the AWR for my analysis instead of only using the subset of data that my finite mind was able to analyze/comprehend. It is my experience that one can use big data analytical techniques such as embodied in the DOPA process against Oracle performance metrics, whether they be from a data warehouse application or an online transaction processing application that is running on the database.

One basic problem faced by performance tuners is a lack of knowledge about what is “normal” for a given metric; it’s hard to know when something is “out of whack” if you don’t know what values you should expect for the metric. So, I began my experimentation by calculating the normal range (using the rule of thumb: +/–2 standard deviations from the mean) of a given metric and “flagging” any metric value that was outside that normal range. I did this first for the hand-picked metrics I had been using, and I also included a few metrics I knew had absolute values I could easily identify. Examples of these known metric values included items such as the following:

- CPU utilization: For optimal response time, you want it to be below 65%.

- Single-block read latency: Above 20 milliseconds is high.

This experiment was successful; it demonstrated to me that establishing a normal range and then identifying metrics whose values fell outside the norm was an effective strategy for predicting where performance problems could be found. However, because the calculation had to be coded separately for every metric, scalability presented a major obstacle to its implementation. I could evaluate more metrics than previously, but individually coding the calculation for all the available metrics was not feasible given the many thousands of AWR metrics available.

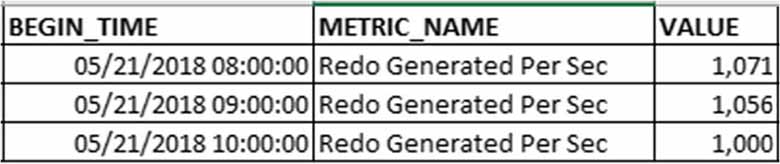

The next step in my experimentation was to organize the data into a normalized data structure prior to performing calculations instead of pivoting it out into a traditional short-wide structure with multiple columns per time interval as I had been doing. The normalized data type is a data structure referred to as key-value pair (KVP). In this format, each row of data represents the metric and its value for a given time instance. Figure 1 shows an example of what this looks like.

Figure 1 This is an example of the normalized key-value pair (KVP) data structure. Note that each row in the KVP structure represents the metric and its value for a given time instance.

I applied the statistical calculation for normal range to the KVP format and flagged anything outside of normal range. Eureka!

It was a simple progression to union in other metrics that already existed in a normalized format, such as sys time model. Then, it occurred to me that any metric could be normalized by simply unpivoting the multicolumn tabular (MCT) structure out into a KVP (tall, skinny format). This was the beginning of the DOPA process.

I began using my new toy right away to solve performance problems. As time permitted, I implemented additional metrics.

The entire process I’ve outlined thus far is accomplished using SQL. There is no fancy coding or special language required. Everyone who touches a database knows how to “talk SQL” to his database, and therefore this method is accessible to anyone who desires to learn it.

What I’ve relayed is the thought process and experimentation that led to the development of the DOPA process. At my son’s suggestion, I explored the area of taxonomies. Application of taxonomies to the DOPA process increased the usefulness of this process even further, so I’ve added this component to the process.

In its current form, I find the Dynamic Oracle Performance Analytics (DOPA) method to be extremely effective and powerful, but I see this process and its implementation as still in its nascent form. The DOPA process as currently implemented is powerful, but I think there is much more potential that can be achieved. The process I have outlined provides a useful tool as is and a framework on which further work can continue. I am persuaded that further efforts will not only enhance its usefulness for tuning but may lead to use cases far beyond tuning; that is to say, in a generic sense, the DOPA process is for anomaly detection, and this approach would work against any instrumented metrics to which you can talk SQL. For this reason, I consider the dynamic process a work in progress.

The DOPA process overcomes the major limitations of the small model approach which has been the sole tool of tuners to date.

- It uses a vast number of the available metrics, instead of a subset of metrics.

- It avoids user “bias” in the selection of metrics.

- Because of the large number of metrics, tuners can be more confident that all potentially problematic areas have a representation in the analysis.

- This larger set of evaluation/metrics enables a greater predictive accuracy.

Having discussed how the DOPA process evolved and why it is superior to the traditional/small model approach, I want to proceed to explain the actual process. The DOPA process described in my blog vastly expands the metrics that can be considered in the tuning analysis without overwhelming the performance tuner with irrelevant data. It does this by consistently applying the following steps, each of which will be examined and explained in further detail in my late blogs:

- Step 1: Gathering Problem Information

- Step 2: Data Preparation

- Step 3: Statistical Analysis

- Step 4: Feature Selection

- Step 5: Taxonomy

In short, the Dynamic Oracle Performance Analytics (DOPA) process computes statistical normal ranges for the instrumented metrics (11,000 metrics instrumented from AWR thus far) and uses those normal ranges to flag the unusual metric values from a problem interval (i.e., feature selection). When evaluating a problem interval, the DOPA process is used to report the flagged out of normal metrics, sorting those metrics with the most unusual values to the top. Essentially, the DOPA process I’ve developed uses a single SQL statement to refine the set of all metrics within the retention period down to the metrics which have unusual values (mostly high values) for a given problem interval. Thus, rather than an analyst pre-selecting the metrics they want to look at, the DOPA process dynamically determines which metrics are likely to be key influencers on the performance issue.

The metrics are instrumented/made available to the DOPA process by first normalizing them (i.e., unpivoting from a multicolumn table structure to a key-value pair structure and computing delta values for metric sources when the source values are persisted as cumulative) and then unioning all the normalized metrics into a single “view.” This “view” on instrumented metrics and their values is fed into the portions of the code that remove outliers (if present), calculates the normal ranges for each metric, and then does the flagging as described in the preceding text. The DOPA process also factors in the concept of a taxonomy (I built two taxonomies, an infrastructure taxonomy and an Oracle taxonomy) where metrics are assigned to higher level categories and subcategories within the taxonomy [the taxonomies help as they bundle the metrics into higher level categories for easier interpretation]. The flagged metrics can be reported in a variety of ways, but I use three views:

- Category Count View that counts flagged metrics by taxonomical category (this is helpful to provide a high-level view of the performance issue)

- Metrics Aggregate View which shows each flagged metric and their average values along with the normal ranges and other information that can be useful

- Metrics Time-Series View which I usually use to provide the time-series trend for a single metric

Since the DOPA process is looking at a very large number of dynamically flagged metrics, this can prevent the analyst from missing essential observations that would not be shown if metrics were pre-selected. Using the DOPA process, the analyst can develop a better hypothesis of the problem. Further, in many cases the set of expected values for a metric is not known by the DBA; the DOPA process provides metric value assessment in the process of computing the normal ranges.

Additionally, I have personally benefitted from using the DOPA process expanding my knowledge of tuning by doing additional research on a flagged metric that I have not yet seen. It is the task of the DOPA process user to exercise good judgment and decide what metrics or problem areas to focus on for further analysis.

To the database tuner, gathering information is a necessary first step in the process of identifying the root cause of a performance problem; actually, you can think of information gathering as a precondition. Database application users or support staff would like to simply tell us that “your” database isn’t working and expect that we will know how to find and fix the problem. Trying to address a performance incident in an information vacuum is just not reasonable; obtaining some basic information about the performance degradation users are experiencing is essential to discovering the root cause and devising a tuning plan. An accurate diagnosis depends to a large degree on an accurate description of the problem. At the very least, accurate information will speed the tuning process; user description of the problem is a piece of the puzzle, and without it, the tuner is greatly handicapped. Accurate information is also vital because you want to make certain that you are addressing the performance degradation the user is experiencing and not another problem that might present itself, but for which no degradation has been reported. For example, tuning the top SQL [as previously described] can easily miss the problem being experienced by the users; this issue might be worthy of your attention, but the user complaint should always be the priority.

Because users are not familiar with the workings of the database but interact with it through applications that run on the database, the information they provide when reporting a performance incident can be quite faulty. Often users will express the nature of the problem they are experiencing in the language of the tool they are using to access the database. For example, they might give an Informatics job name or an application log with no interpretation. As database analysts, we often don’t have experience interpreting application-specific messages/logs. We usually don’t have a mapping from Informatics job name to something that occurs on the database. To overcome this difficulty, we need to ask users to translate the job name to the SQL that’s running or at least provide a start/end time and an expectation of how long they think it should take because then we can look to see what was running during that time interval.

Sometimes users will have a performance problem but delay requesting help. If the delay is long, the problem interval may no longer be stored in the history data. In this case, it is necessary to wait until the problem reoccurs. In this situation, it’s important to educate the user about the importance of a timely request so that future problems can be addressed quickly.

Another problem I have encountered is that users will try to “self-diagnose” a problem and/or use incorrect terms. For example, I had a user report that the database was “deadlocked.” This term has a very specific meaning to a database professional, but when I questioned the user, it was clear that he was using the term to mean something very different (e.g., in this case, what he meant was “slow-running SQL”). This serves to show that you can’t always take the user’s description at face value or you may be running down rabbit holes.

These are just a few examples of the kinds of obstacles I have encountered in attempting to gain information from users about a particular problem. In order to overcome them and to standardize the information with which I begin my analysis, I have come up with a few basic questions for which I ask users to supply an answer if possible. I’ve listed those questions in the following text with a brief explanation about how this information may be relevant for the tuning process.

Has This Run Before?

It’s good to know if the poor-performing process is new. I see many cases where scalability testing was not done prior to production, and then when the application hits production scale for the first time, it falls over.

When Did the Problem Start?

This will tell me the time interval that I need to look at for my tuning analysis. Since I work on databases that run applications for users across the globe, I always ask for the database timestamp which includes the time zone.

What Was the Start and End Time of the Application Run?

When the time interval can be clearly identified (e.g., the problem occurred between 2p.m. and 3p.m. EST), it will necessitate a smaller analysis interval and hence a smaller data set than if a less-well-defined period is reported (e.g., the problem occurred yesterday). A more narrow interval will make it quicker and easier to find the problem.

What Database Were You Connected To?

It’s important to know the database name because in organizations that have many Oracle instances running, applications can be run on any of a number of different databases, and I need to be sure I am looking at the correct one.

What User Accounts Are They Using to Connect to the Database?

There can be many users accessing the database for different purposes, and not all will necessarily be experiencing problems. On a large database, there could be hundreds of connect accounts. If I target just those metrics related to the connect account for the user reporting a problem, it will greatly reduce the data set for analysis.

What SQL Do You Believe You Were Running?

Most of the time, users don’t know the answer to this question. But they might be able to provide some hints that are useful. For example, they might say something like, “We were updating the Study table.” That statement gives me two pieces of information that I can use. It tells me that the problem probably involves an update statement and that I’ll want to be looking for a SQL statement that touched the Study table.

What Is the Expected Duration?

I use the user’s report of expected duration as the performance requirement. This will be my target for the tuning efforts. Performance requirement is sometimes, but not usually, included in the documentation of an application. To overcome this oft-missing information, I ask the user for the expected duration based on his/her previous experience with the system, as a proxy. This is information they are always able to provide.

Did You Receive Any Oracle Error Messages?

Knowing if there is an Oracle error message and what those messages were is very important. An Oracle message can sometimes indicate that the problem is clearly not a performance issue. For example, a “duplicate value in index” message is not a performance problem; it indicates that the problem is with the application. In these problem types, the program abnormally ends with Oracle error message. Some Oracle error messages, however, do fall into the category of performance problems. For many error messages, ascertaining whether the error code is a result of performance issues will require further discovery. An example of this would be “TEMP tablespace” errors. A “TEMP tablespace error” could indicate a deficient amount of tablespace for the application which would be a configuration problem, but it might also indicate a problem with the execution plan which is causing too much tablespace to be used.

The questions I’ve noted thus far are part of a basic intake form that I ask clients to complete. In addition to this basic information, the following information may also be helpful if it can be obtained:

- sql_id / top level sql_id

- SQL text or part of the SQL text

- Machine/module/program/action

- Session_id, session_serial#,

What made you conclude that it is a database problem?

For some situations, it’s helpful to have run details for when the application was behaving normally.

Summary

To summarize, the purpose of the information gathering process is threefold:

- We want to be able to focus in on the performance degradation being experienced by the users.

- We want to confirm that we are looking for the problem in the right database.

- We want to be able to drive down to the session level as quickly as possible—this allows for the most efficient analysis because unless the analysis indicates there is a systemic problem, most of the tuning will be done at the SQL tuning level.

Before I began using the DOPA process, I would not even start to diagnose a problem until the users provided the information requested, or at least as much of it as they could. Since I have been using this tool, however, I have discovered that I can run the code and gain a very good idea of what the problem is with only the most basic information (database name and the approximate time interval). The DOPA process is so powerful and so capable that it can evaluate the available metrics and provide an operational profile of what is going on in the database, revealing what is unusual. The DOPA process puts so many of the pieces of the puzzle together that I am able to obtain a good notion of what the whole picture looks like even with only a scant problem description. Once the users provide the requested information, it’s just a matter of filling in the gaps and completing the analysis.

The ability of the DOPA process to work even when given a poor description of the problem does not make the data collection process obsolete. Rather, it allows me to take a first analysis step. Additional information from the client will help me focus in on the problem area.

I want to illustrate this with an example:

I had a user complain of a hung process. While I was waiting for them to provide the requested information, I ran my DOPA process using an approximated interval. I could see from the results that there was a high level of idle waits on the network, so I had a good notion of where the problem was occurring. When I received the information from the user and a more detailed description, I quickly discovered they had a SQL statement that was sitting there doing nothing, just waiting for the network [a large-scale network outage impacted many SQL statements that were using database links (database links can be used in a SQL statement to query data from a remote database, or several remote databases, in a single query)]. We killed the process and restarted it and performance returned to normal.

Let us now move on to data preparation.