It’s all too easy to dive straight into the nitty-gritty technical sides of monolithic decomposition - and this will be the focus of the rest of the book! But first we really need to explore several less technical issues. Where should you start the migration? How should you manage the change? How do you bring others along on the journey? And the important question to be asked early on - should you even use microservices in the first place?

Understanding the Goal

Microservices are not the goal. You don’t “win” by having microservices. Adopting a microservice architecture should be a conscious decision, one based on rational decision-making. You should be thinking of migrating to a microservice architecture in order to achieve something that you can’t currently achieve with your existing system architecture.

Without having a handle on what you are trying to achieve, how are you going to inform your decision-making process about what options you should take? What you are trying to achieve by adopting microservices will greatly change where you focus your time, and how you prioritize your efforts.

It will also help you avoid becoming a victim of analysis paralysis - being overburdened by choices. You also risk falling into a cargo cult mentality, just assuming that “If microservices are good for Netflix, they’re good for us!”

A Common Failing

Several years ago, I was running a microservices workshop at a conference. As I do with all my classes, I like to get a sense of why people are there and what they’re hoping to get out of the workshop. In this particular class several people had come from the same company, and I was curious to find out why their company had sent them. “Why are you at the workshop? Why are you interested in using microservices?” I asked one of them. Their answer? “We don’t know; our boss told us to come!” Intrigued, I probed further. “So, any idea why your boss wanted you here?” “Well, you could ask him - he’s sitting behind us,” the attendee responded. I switched my line of questioning to their boss, asking the same question, “So, why are you looking to use microservices?” The boss’s response? “Our CTO said we’re doing microservices, so I thought we should find out what they are!”

This true story, while at one level funny, is unfortunately all too common. I encounter many teams who have made the decision to adopt a microservice architecture without really understanding why, or what they are hoping to achieve.

The problems with not having a clear view as to why you are using microservices are varied. It can require significant investment, either directly, in terms of adding more people or money, or in terms of prioritizing the transition work over and above adding features. This is further complicated by the fact that it can take a while to see the benefits of a transition. Occasionally this leads to the situation where people are a year or more into a transition but can’t remember why they started it in the first place. It’s not simply a matter of the sunk cost fallacy; they literally don’t know why they’re doing the work.

At the same time, I get asked by people to share the return on investment (ROI) of moving to a microservice architecture. Some people want hard facts and figures to back up why they should even consider this approach. The reality is that quite aside from the fact that detailed studies on these sorts of things are few and far between, even when they do exist, the observations from such studies are rarely transferable because of the different contexts people might find themselves in.

So where does that leave us, guess work? Well, no. I am convinced that we can and should have better studies into the efficacy of our development, technology, and architecture choices. Some work is already being done to this end in the form of things like “The State of DevOps Report”, but that looks at architecture only in passing. In lieu of these rigorous studies, we should at least strive to apply more critical thinking to our decision making, and at the same time embrace more of an experimental frame of mind.

You need a clear understanding as to what you are hoping to achieve. No ROI calculation can be done without properly assessing what the return is that you are looking for. We need to focus on the outcomes we hope to achieve, and not slavishly, dogmatically stick to a single approach. We need to think clearly and sensibly about the best way to get what we need, even if it means ditching a lot of work, or going back to the good old-fashioned boring approach.

Three Key Questions

When working with an organization to help them understand if they should consider adopting a microservice architecture, I tend to ask the same set of questions:

What are you hoping to achieve?

This should be a set of outcomes that are aligned to what the business is trying to achieve, and can be articulated in a way that describes the benefit to the end users of the system.

Have you considered alternatives to using microservices?

As we’ll explore later, there are often many other ways to achieve some of the same benefits that microservices bring. Have you looked at these things? If not, why not? Quite often you can get what you need by using a much easier, more boring technique.

How will you know if the transition is working?

If you decide to embark on this transition, how will you know if you’re going in the right direction? We’ll come back to this topic at the end of the article.

More than once I’ve found that asking these questions is enough for companies to think again regarding whether to go any further with a microservice architecture.

Why Might You Choose Microservices?

I can’t define the goals you may have for your company. You know better the aspirations of your company and the challenges you are facing. What I can outline are the reasons that often get stated as to why microservices are being adopted by companies all over the world. In the spirit of honesty, I’ll also outline other ways you could potentially achieve these same outcomes using different approaches.

Improve Team Autonomy

Whatever industry you operate in, it is all about your people, and catching them doing things right, and providing them with the confidence, the motivation, the freedom and desire to achieve their true potential.

John Timpson

Many organizations have shown the benefits of creating autonomous teams. Keeping organizational groups small, allowing them to build close bonds and work effectively together without bringing in too much bureaucracy, has helped many organizations grow and scale more effectively than some of its peers. Gore has found great success by making sure none of their business units ever gets to more than 150, to make sure that everyone knows each other. For these smaller business units to work, they have to be given power and responsibility to work as autonomous units.

Timpsons, a highly successful UK retailer, has achieved massive scale by empowering its workforce, reducing the need for central functions and allowing the local stores to make decisions for themselves, things like giving them power over how much to refund unhappy customers. Now chairman of the company, John Timpson was famous for scrapping internal rules and replacing them with just two:

- Look the part and put the money in the till.

- You can do anything else to best serve customers.

Autonomy works at the smaller scale too, and most modern organizations I work with are looking to create more autonomous teams within their organizations, often trying to copy models from other organizations like Amazon’s two-pizza team model, or the Spotify model.1

If done right, team autonomy can empower people, help them step up and grow, and get the job done faster. When teams own microservices, and have full control over those services, they increase the amount of autonomy they can have within a larger organization.

How else could you do this?

Autonomy - distribution of responsibility - can play out in many ways. Working out how you can push more responsibility into the team doesn’t require a shift in architecture. Essentially, though, it’s a process of identifying what responsibilities can be pushed into the teams, and this could play out in many ways. Giving ownership to parts of the codebase to different teams could be one answer (a modular monolith could still benefit you here): this could also be done by identifying people empowered to make decisions for parts of the codebase on functional grounds (e.g., Ryan knows display ads best, so he’s responsible for that; Jane knows the most about tuning our query performance, so run anything in that area past her first).

Improving autonomy can also play out in simply not having to wait for other people to do things for you, so adopting self-service approaches to provisioning machines or environments can be a huge enabler, avoiding the need for central operations teams to have to field tickets for day-to-day activities.

Reduce Time to Market

By being able to make and deploy changes to individual microservices, and deploy these changes without having to wait for coordinated releases, we have the potential to release functionality to our customers more quickly. Being able to bring more people to bear on a problem is also a factor - we’ll cover that shortly.

How else could you do this?

Well, where do we start? There are so many variables that go into play when considering how to ship software more quickly. I always suggest you carry out some sort of path-to-production modeling exercise, as it may help show that the biggest blocker isn’t what you think.

I remember on one project many years ago at a large investment bank, we had been brought in to help speed up delivery of software. “The developers take too long to get things into production!” we were told. One of my colleagues, Kief Morris, took the time to map out all the stages involved in delivering software, looking at the process from the moment a product owner comes up with an idea to the point where that idea actually got into production.

He quickly identified that it took around six weeks on average from when a developer started on a piece of work to it being deployed into a production environment. We felt that we could shave a couple of weeks off of this process through the use of suitable automation, as manual processes were involved. But Kief found a much bigger problem in the path to production - often it took over 40 weeks for the ideas to get from the product owner to the point where developers could even start on the work. By focusing our efforts on improving that part of the process, we’d help the client much more in improving the time to market for new functionality.

So think of all the steps involved with shipping software. Look at how long they take, the durations (both elapsed time and busy time) for each step, and highlight the pain points along the way. After all of that, you may well find that microservices could be part of the solution, but you’ll probably find many other things you could try in parallel.

Scale Cost-Effectively for Load

By breaking our processing into individual microservices, these processes can be scaled independently. This means we can also hopefully cost-effectively scale - we need to scale up only those parts of our processing that are currently constraining our ability to handle load. It also follows that we can then scale down those microservices that are under less load, perhaps even turning them off when not required. This is in part why so many companies that build SaaS products adopt microservice architecture - it gives them more control over operational costs.

How else could you do this?

Here we have a huge number of alternatives to consider, most of which are easier to experiment with before committing to a microservices-oriented approach. We could just get a bigger box for a start - if you’re on a public cloud or other type of virtualized platform, you could simply provision bigger machines to run your process on. This “vertical” scaling obviously has its limitations, but for a quick short-term improvement, it shouldn’t be dismissed outright.

Traditional horizontal scaling of the existing monolith - basically running multiple copies - could prove to be highly effective. Running multiple copies of your monolith behind a load-distribution mechanism like a load balancer or a queue could allow for simple handling of more load, although this may not help if the bottleneck is in the database, which, depending on the technology, may not support such a scaling mechanism. Horizontal scaling is an easy thing to try, and you really should give it a go before considering microservices, as it will likely be quick to assess its suitability, and has far fewer downsides than a full-blown microservice architecture.

You could also replace technology being used with alternatives that can handle load better. This is typically not a trivial undertaking, however - consider the work to port an existing program over to a new type of database or programming language. A shift to microservices may actually make changing technology easier, as you can change the technology being used only inside the microservices that require it, leaving the rest untouched and thereby reducing the impact of the change.

Improve Robustness

The move from single-tenant software to multitenant SaaS applications means the impact of system outages can be significantly more widespread. The expectations of our customers for their software to be available, as well as the importance of software in their lives, has increased. By breaking our application into individual, independently deployable processes, we open up a host of mechanisms to improve the robustness of our applications.

By using microservices, we are able to implement a more robust architecture because functionality is decomposed - that is, an impact on one area of functionality need not bring down the whole system. We also get to focus our time and energy on those parts of the application that most require robustness - ensuring critical parts of our system remain operational.

Resilience Versus Robustness

Typically, when we want to improve a system’s ability to avoid outages, handle failures gracefully when they occur, and recover quickly when problems happen, we often talk about resilience. Much work has been done in the field of what is now known as resilience engineering, looking at the topic as a whole as it applies to all fields, not just computing. The model for resilience pioneered by David Woods looks more broadly at the concept of resilience, and points to the fact that being resilient isn’t as simple as we might first think, by separating out our ability to deal with known and unknown sources of failure, among other things.2

John Allspaw, a colleague of David Woods, helps distinguish between the concepts of robustness and resilience. Robustness is the ability to have a system that is able to react to expected variations. Resilience is having an organization capable of adapting to things that haven’t been thought of, which could well include creating a culture of experimentation through things like chaos engineering. For example, we are aware that a specific machine could die, so we might bring redundancy into our system by load balancing an instance. That is an example of addressing robustness. Resiliency is the process of an organization preparing itself for the fact that it cannot anticipate all potential problems.

An important consideration here is that microservices do not necessarily give you robustness for free. Rather, they open up opportunities to design a system in such a way that it can better tolerate network partitions, service outages, and the like. Just spreading your functionality across multiple separate processes and separate machines does not guarantee improved robustness; quite the contrary - it may just increase your surface area of failure.

How else could you do this?

By running multiple copies of your monolith, perhaps behind a load balancer or another load distribution mechanism like a queue, we add redundancy to our system. We can further improve robustness of our applications by distributing instances of our monolith across multiple failure planes (e.g., don’t have all machines in the same rack or same data center).

Investment in more reliable hardware and software could likewise yield benefits, as could a thorough investigation of existing causes of system outages. I’ve seen more than a few production issues caused by an overreliance on manual processes, for example, or people “not following protocol,” which often means an innocent mistake by an individual can have significant impacts. British Airways suffered a massive outage in 2017, causing all of its flights into and out of London Heathrow and Gatwick to be canceled. This problem was apparently inadvertently triggered by a power surge resulting from the actions of one individual. If the robustness of your application relies on human beings never making a mistake, you’re in for a rocky ride.

Scale the Number of Developers

We’ve probably all seen the problem of throwing developers at a project to try to make it go faster - it so often backfires. But some problems do need more people to get them done. As Frederick Brooks outlines in his now seminal book, The Mythical Man Month,” adding more people will only continue to improve how quickly you can deliver, if the work itself can be partitioned into separate pieces of work with limited interactions between them. He gives the example of harvesting crops in a field - it’s a simple task to have multiple people working in parallel, as the work being done by each harvester doesn’t require interactions with other people. Software rarely works like this, as the work being done isn’t all the same, and often the output from one piece of work is needed as the input for another.

With clearly identified boundaries, and an architecture that has focused around ensuring our microservices limit their coupling with each other, we come up with pieces of code that can be worked on independently. Therefore, we hope we can scale the number of developers by reducing the delivery contention.

To successfully scale the number of developers you bring to bear on the problem requires a good degree of autonomy between the teams themselves. Just having microservices isn’t going to be good enough. You’ll have to think about how the teams align to the service ownership, and what coordination between teams is required. You’ll also need to break up work in such a way that changes don’t need to be coordinated across too many services.

How else could you do this?

Microservices work well for larger teams as the microservices themselves become decoupled pieces of functionality that can be worked on independently. An alternative approach could be to consider implementing a modular monolith: different teams own each module, and as long as the interface with other modules remains stable, they could continue to make changes in isolation.

This approach is somewhat limited, though. We still have some form of contention between the different teams, as the software is still all packaged together, so the act of deployment still requires coordination between the appropriate parties.

Embrace New Technology

Monoliths typically limit our technology choices. We normally have one programming language on the backend, making use of one programming idiom. We’re fixed to one deployment platform, one operating system, one type of database. With a microservice architecture, we get the option to vary these choices for each service.

By isolating the technology change in one service boundary, we can understand the benefits of the new technology in isolation, and limit the impact if the technology turns out to have issues.

In my experience, while mature microservice organizations often limit how many technology stacks they support, they are rarely homogeneous in the technologies in use. The flexibility in being able to try new technology in a safe way can give them competitive advantage, both in terms of delivering better results for customers and in helping keep their developers happy as they get to master new skills.

How else could you do this?

If we still continue to ship our software as a single process, we do have limits on which technologies we can bring in. We could safely adopt new languages on the same runtime, of course - the JVM as one example can happily host code written in multiple languages within the same running process. New types of databases become more problematic, though, as this implies some sort of decomposition of a previously monolithic data model to allow for an incremental migration, unless you’re going for a complete, immediate switchover to a new database technology, which is complicated and risky.

If the current technology stack is considered a “burning platform,” you may have no choice other than to replace it with a newer, better-supported technology stack.5 Of course, there is nothing to stop you from incrementally replacing your existing monolith with the new monolith - patterns can work well for that.

Reuse?

Reuse is one of the most oft-stated goals for microservice migration, and in my opinion is a poor goal in the first place. Fundamentally, reuse is not a direct outcome people want. Reuse is something people hope will lead to other benefits. We hope that through reuse, we may be able to ship features more quickly, or perhaps reduce costs, but if those things are your goals, track those things instead, or you may end up optimizing the wrong thing.

To explain what I mean, let’s take a deeper look into one of the usual reasons reuse is chosen as an objective. We want to ship features more quickly. We think that by optimizing our development process around reusing existing code, we won’t have to write as much code - and with less work to do, we can get our software out the door more quickly, right? But let’s take a simple example. The Customer Services team in Music Corp needs to format a PDF in order to provide customer invoices. Another part of the system already handles PDF generation: we produce PDFs for printing purposes in the warehouse, to produce packing slips for orders shipped to customers and to send order requests to suppliers.

Following the goal of reuse, our team may be directed to use the existing PDF generation capability. But that functionality is currently managed by a different team, in a different part of the organization. So now we have to coordinate with them to make the required changes to support our features. This may mean we have to ask them to do the work for us, or perhaps we have to make the changes ourselves and submit a pull request (assuming our company works like that). Either way, we have to coordinate with another part of the organization to make the change.

We could spend the time to coordinate with other people and get the changes made, all so we could roll out our change. But we work out that we could actually just write our own implementation much faster and ship the feature to the customer more quickly than if we spend the time to adapt the existing code. If your actual goal is faster time to market, this may be the right choice. But if you optimize for reuse hoping you get faster time to market, you may end up doing things that slow you down.

Measuring reuse in complex systems is difficult, and as I’ve outlined, it is typically something we’re doing to achieve something else. Spend your time focusing on the actual objective instead, and recognize that reuse may not always be the right answer.

When Might Microservices Be a Bad Idea?

We’ve spent ages exploring the potential benefits of microservice architectures. But in a few situations I recommend that you not use microservices at all. Let’s look at some of those situations now.

Unclear Domain

Getting service boundaries wrong can be expensive. It can lead to a larger number of cross-service changes, overly coupled components, and in general could be worse than just having a single monolithic system. In Building Microservices, I shared the experiences of the SnapCI product team at ThoughtWorks. Despite knowing the domain of continuous integration really well, their initial stab at coming up with service boundaries for their hosted-CI solution wasn’t quite right. This led to a high cost of change and high cost of ownership. After several months fighting this problem, the team decided to merge the services back into one big application. Later, when the feature-set of the application had stabilized somewhat and the team had a firmer understanding of the domain, it was easier to find those stable boundaries.

SnapCI was a hosted continuous integration and continuous delivery tool. The team had previously worked on another similar tool, Go-CD, a now open source continuous delivery tool that can be deployed locally rather than being hosted in the cloud. Although there was some code reuse early on between the SnapCI and Go-CD projects, in the end SnapCI turned out to be a completely new codebase. Nonetheless, the previous experience of the team in the domain of CD tooling emboldened them to move more quickly in identifying boundaries, and building their system as a set of microservices.

After a few months, though, it became clear that the use cases of SnapCI were subtly different enough that the initial take on the service boundaries wasn’t quite right. This led to lots of changes being made across services, and an associated high cost of change. Eventually, the team merged the services back into one monolithic system, giving them time to better understand where the boundaries should exist. A year later, the team was then able to split the monolithic system into microservices, whose boundaries proved to be much more stable. This is far from the only example of this situation I have seen. Prematurely decomposing a system into microservices can be costly, especially if you are new to the domain. In many ways, having an existing codebase you want to decompose into microservices is much easier than trying to go to microservices from the beginning.

If you feel that you don’t yet have a full grasp of your domain, resolving that before committing to a system decomposition may be a good idea. (Yet another reason to do some domain modeling! We’ll discuss that more shortly.)

Startups

This might seem a bit controversial, as so many of the organizations famous for their use of microservices are considered startups, but in reality many of these companies including Netflix, Airbnb, and the like moved toward microservice architecture only later in their evolution. Microservices can be a great option for “scale-ups” - startup companies that have established at least the fundamentals of their product/market fit, and are now scaling to increase (or likely simply achieve) profitability.

Startups, as distinct from scale-ups, are often experimenting with various ideas in an attempt to find a fit with customers. This can lead to huge shifts in the original vision for the product as the space is explored, resulting in huge shifts in the product domain.

A real startup is likely a small organization with limited funding, which needs to focus all its attention on finding the right fit for its product. Microservices primarily solve the sorts of problems startups have once they’ve found that fit with their customer base. Put a different way, microservices are a great way of solving the sorts of problems you’ll get once you have initial success as a startup. So focus initially on being a success! If your initial idea is bad, it doesn’t matter whether you built it with microservices or not.

It is much easier to partition an existing, “brownfield” system than to do so up front with a new, greenfield system that a startup would create. You have more to work with. You have code you can examine, and you can speak to people who use and maintain the system. You also know what good looks like - you have a working system to change, making it easier for you to know when you may have made a mistake or been too aggressive in your decision-making process.

You also have a system that is actually running. You understand how it operates and how it behaves in production. Decomposition into microservices can cause some nasty performance issues, for example, but with a brownfield system you have a chance to establish a healthy baseline before making potentially performance-impacting changes.

I’m certainly not saying never do microservices for startups, but I am saying that these factors mean you should be cautious. Only split around those boundaries that are clear at the beginning, and keep the rest on the more monolithic side. This will also give you time to assess how mature you are from an operational point of view - if you struggle to manage two services, managing ten is going to be difficult.

Customer-Installed and Managed Software

If you create software that is packaged and shipped to customers who then operate it themselves, microservices may well be a bad choice. When you migrate to a microservice architecture, you push a lot of complexity into the operational domain. Previous techniques you used to monitor and troubleshoot your monolithic deployment may well not work with your new distributed system. Now teams who undertake a migration to microservices offset these challenges by adopting new skills, or perhaps adopting new technology - these aren’t things you can typically expect of your end customers.

Typically, with customer-installed software, you target a specific platform. For example, you might say “requires Windows 2016 Server” or “needs macOS 10.12 or above.” These are well-defined target deployment environments, and you are quite possibly packaging your monolithic software using mechanisms that are well understood by people who manage these systems (e.g., shipping Windows services, bundled up in a Windows Installer package). Your customers are likely familiar with purchasing and running software in this way.

Imagine the trouble you have if you go from giving them one process to run and manage, to then giving them 10 or 20? Or perhaps even more aggressively, expecting them to run your software on a Kubernetes cluster or similar?

The reality is that you cannot expect your customers to have the skills or platforms available to manage microservice architectures. Even if they do, they may not have the same skills or platform that you require. There is a large variation between Kubernetes installs, for example.

Not Having a Good Reason!

And finally, we have the biggest reason not to adopt microservices, and that is if you don’t have a clear idea of what exactly it is that you’re trying to achieve. As we’ll explore, the outcome you are looking for from your adoption of microservices will define where you start that migration and how you decompose the system. Without a clear vision of your goals, you are fumbling around in the dark. Doing microservices just because everyone else is doing it is a terrible idea.

Trade-Offs

So far, I’ve outlined the reasons people may want to adopt microservices in isolation, and laid out (however briefly) the case for also considering other options. However, in the real world, it’s common for people to be trying to change not one thing, but many things, all at once. This can lead to confusing priorities that can quickly increase the amount of change needed and delay seeing any benefits.

It all starts innocently enough. We need to rearchitect our application to handle a significant increase in traffic, and decide microservices are the way forward. Someone else comes up and says, “Well, if we’re doing microservices, we can make our teams more autonomous at the same time!” Another person chimes in, “And this gives us a great chance to try out Kotlin as a programming language!” Before you know it, you have a massive change initiative that is attempting to roll out team autonomy, scale the application, and bring in new technology all at once, along with other things people have tacked on to the program of work for good measure.

Moreover, in this situation, microservices become locked in as the approach. If you focus on just the scaling aspect, during your migration you may come to realize that you’d be better off just horizontally scaling out your existing monolithic application. But doing that won’t help the new secondary goals of improving team autonomy or bringing in Kotlin as a programming language.

It is important, therefore, to separate the core driver behind the shift from any secondary benefits you might also like to achieve. In this case, handling improved scale of the application is the most important thing - work done to make progress on the other secondary goals (like improving team autonomy) may be useful, but if they get in the way or detract from the key objective, they should take a back seat.

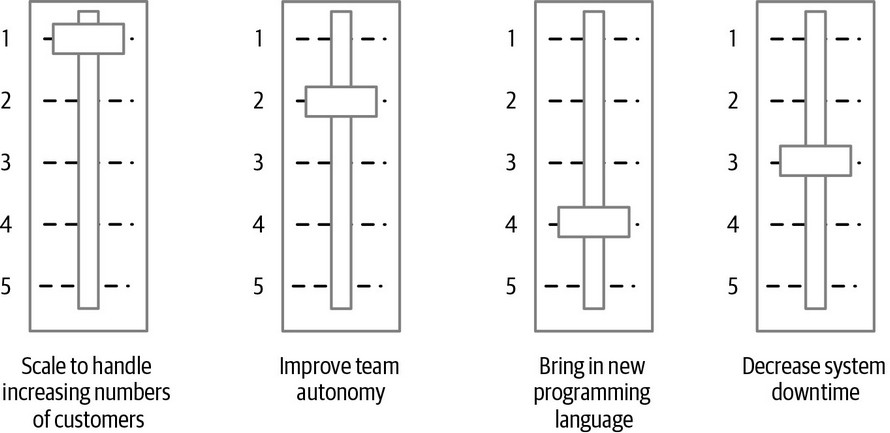

The important thing here is to recognize that some things are more important than others. Otherwise, you can’t properly prioritize. One exercise I like here is to think of each of your desired outcomes as a slider. Each slider starts in the middle. As you make one thing more important, you have to drop the priority of another - you can see an example of this in Figure 2. This clearly articulates, for example, that while you’d like to make polyglot programming easier, it’s not as important as ensuring the application has improved resiliency. When it comes to working out how you’re going to move forward, having these outcomes clearly articulated and ranked can make decision-making much easier.

Figure 1. Using sliders to balance the competing priorities you may have

These relative priorities can change (and should change as you learn more). But they can help guide decision-making. If you want to distribute responsibility, pushing more power into newly autonomous teams, simple models like this can help inform their local decision-making and help them make better choices that line up with what you’re trying to achieve across the company.

Taking People on the Journey

I am frequently asked, “How can I sell microservices to my boss?” This question typically comes from a developer - someone who has seen the potential of microservice architecture and is convinced it’s the way forward.

Often, when people disagree about an approach, it’s because they may have different views of what you are trying to achieve. It’s important that you and the other people you need to bring on the journey with you have a shared understanding about what you’re trying to achieve. If you’re on the same page about that, then at least you know you are disagreeing only about how to get there. So it comes back to the goal again - if the other folks in the organization share the goal, they are much more likely to be onboard for making a change.

In fact, it’s worth exploring in more detail about how you can both help sell the idea, and make it happen, by looking for inspiration at one of the better-known models for helping make organizational change. Let’s take a look at that next.

Changing Organizations

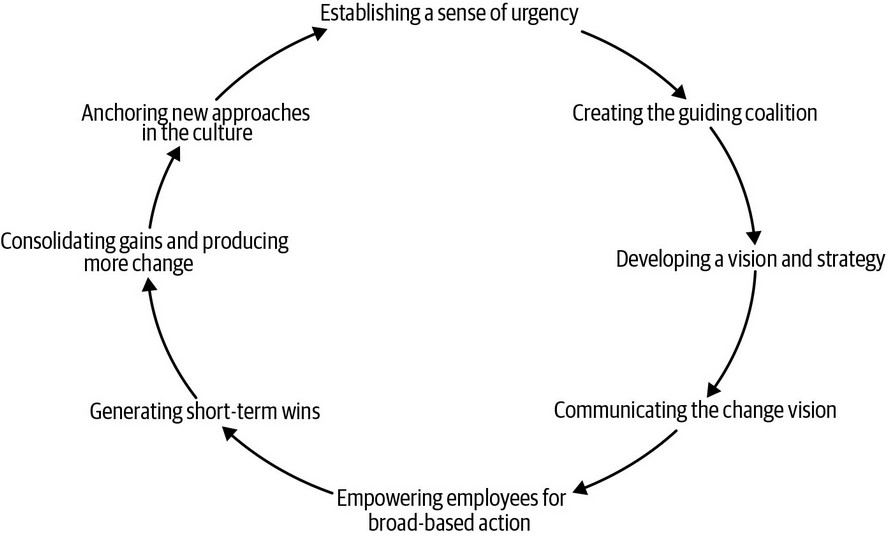

Dr. John Kotter’s eight-step process for implementing organizational change is a staple of change managers the world over, partly as it does a good job of distilling the required activities into discrete, comprehensible steps. It’s far from the only such model out there, but it’s the one I find the most helpful.

A wealth of content out there describes the process, outlined in Figure 2, so I won’t dwell on it too much here.6 However, it is worth briefly outlining the steps and thinking about how they may help us if we’re considering adopting a microservice architecture.

Before I outline the process here, I should note that this model for change is typically used to institute large-scale organizational shifts in behavior. As such, it may well be huge overkill if all you’re trying to do is bring microservices to a team of 10 people. Even in these smaller-scoped settings, though, I’ve found this model to be useful, especially the earlier steps.

Figure 2. Kotter’s eight-step process for making organizational change

Establishing a Sense of Urgency

People may think your idea of moving to microservices is a good one. The problem is that your idea is just one of many good ideas that are likely floating around the organization. The trick is to help people understand that now is the time to make this particular change.

Looking for “teachable” moments here can help. Sometimes the right time to bolt the stable door is after the horse has bolted, because people suddenly realize that horses running off is a thing they need to think about, and now they realize they even have a door, and “Oh look, it can be closed and everything!” In the moments after a crisis has been dealt with, you have a brief moment in people’s consciousness where pushing for change can work. Wait too long, and the pain - and causes of that pain - will diminish.

Remember, what you’re trying to do is not say, “We should do microservices now!” You’re trying to share a sense of urgency about what you want to achieve - and as I’ve stated, microservices are not the goal!

Creating the Guiding Coalition

You don’t need everyone on board, but you need enough to make it happen. You need to identify the people inside your organization who can help you drive this change forward. If you’re a developer, this probably starts with your immediate colleagues in your team, and perhaps someone more senior - it might be a tech lead, an architect, or a delivery manager. Depending on the scope of the change, you may not need loads of people involved. If you’re just changing how your team does something, then ensuring you have enough air cover may be enough. If you’re trying to transform the way software is developed in your company, you may need someone at the exec level to be championing this (perhaps a CIO or CTO).

Getting people on board to help make this change may not be easy. No matter how good you think the idea is, if the person has never heard of you, or never worked with you, why should they back your idea? Trust is earned. Someone is much more likely to back your big idea if they’ve already worked with you on smaller, quick wins.

It’s important here that you have involvement of people outside software delivery. If you are already working in an organization where the barriers between “IT” and “The Business” have been broken down, this is probably OK. On the other hand, if these silos still exist, you may need to reach across the aisle to find a supporter elsewhere. Of course, if your adoption of microservice architecture is focused on solving problems the business is facing, this will be a much easier sell.

The reason you need involvement from people outside the IT silo is that many of the changes you make can potentially have significant impacts on how the software works and behaves. You’ll need to make different trade-offs around how your system behaves during failure modes, for example, or how you tackle latency. For example, caching data to avoid making a service call is a good way to ensure you reduce the latency of key operations in a distributed system, but the trade-off is that this can result in users of your system seeing stale data. Is that the right thing to do? You’ll probably have to discuss that with your users - and that will be a tough discussion to have if the people who champion the cause of your users inside your organization don’t understand the rationale behind the change.

Developing a Vision and Strategy

This is where you get your folks together and agree on what change you’re hoping to bring (the vision) and how you’re going to get there (the strategy). Visions are tricky things. They need to be realistic yet aspirational, and finding the balance between the two is key. The more widely shared the vision, the more work will need to go into packaging it up to get people onboard. But a vision can be a vague thing and still work with smaller teams (“We’ve got to reduce our bug count!”).

The vision is mostly about the goal - what it is you’re aiming for. The strategy is about the how. Microservices are going to achieve that goal (you hope; they’ll be part of your strategy). Remember that your strategy may change. Being committed to a vision is important, but being overly committed to a specific strategy in the face of contrary evidence is dangerous, and can lead to significant sunk cost fallacy.

Communicating the Change Vision

Having a big vision can be great, but don’t make it so big that people won’t believe it’s possible. I saw a statement put out by the CEO of a large organization recently that said (paraphrasing somewhat)

In the next 12 months, we will reduce costs and deliver faster by moving to microservices and embracing cloud-native technologies,

Unnamed CEO

None of the staff in the company I spoke to believed anything of the sort was possible. Part of the problem in the preceding statement is the potentially contradictory goals that are outlined - a wholesale change of how software is delivered may help you deliver faster, but doing that in 12 months isn’t going to reduce costs, as you’ll likely have to bring in new skills, and you’ll probably suffer a negative impact in productivity until the new skills are bedded in. The other issue is the time frame outlined here. In this particular organization, the speed of change was slow enough that a 12-month goal was considered laughable. So whatever vision you share has to be somewhat believable.

You can start small when it comes to sharing a vision. I was part of a program called the “Test Mercenaries” to help roll out test automation practices at Google many years ago. The reason that program even got started was because of previous endeavors by what we would now call a community of practice (a “Grouplet” in Google nomenclature) to help share the importance of automated testing. One of the program’s early efforts in sharing information about testing was an initiative called “Testing on the Toilet.” It consisted of brief, one-page articles pinned to the back of the toilet doors so folks could read them “at their leisure”! I’m not suggesting this technique would work everywhere, but it worked well at Google - and it was a really effective way of sharing small, actionable advice.7

One last note here. There is an increasing trend away from face-to-face communication in favor of systems like Slack. When it comes to sharing important messages of this sort, face-to-face communication (ideally in person, but perhaps by video) will be significantly more effective. It makes it easier to understand people’s reaction to hearing these ideas, and helps to calibrate your message and avoid misunderstandings. Even if you may need other forms of communication to broadcast your vision across a larger organization, do as much face to face as possible first. This will help you refine your message much more efficiently.

Empowering Employees for Broad-Based Action

“Empowering employees” is management consultancy speak for helping them do their job. Most often this means something pretty straightforward - removing roadblocks.

You’ve shared your vision and built up excitement - and then what happens? Things get in the way. One of the most common problems is that people are too busy doing what they do now to have the bandwidth to change - this is often why companies bring new people into an organization (perhaps through hires or as consultants) to give teams extra bandwidth and expertise to make a change.

As a concrete example, when it comes to microservice adoption, the existing processes around provisioning of infrastructure can be a real problem. If the way your organization handles the deployment of a new production service involves placing an order for hardware six months in advance, then embracing technology that allows for the on-demand provisioning of virtualized execution environments (like virtual machines or containers) could be a huge boon, as could the shift to a public cloud vendor.

Don’t throw new technology into the mix for the sake of it. Bring it in to solve concrete problems you see. As you identify obstacles, bring in new technology to fix those problems. Don’t fall into the trap of spending a year defining The Perfect Microservice Platform only to find that it doesn’t actually solve the problems you have.

As part of the Test Mercenaries program at Google, we ended up creating frameworks to make test suite creation and management easier, promoted visibility of tests as part of the code review system, and even ended up driving the creation of a new company-wide CI tool to make test execution easier. We didn’t do this all at once, though. We worked with a few teams, saw the pain points, learned from that, and then invested time in bringing in new tools. We also started small - making test suite creation easier was a pretty simple process, but changing the company-wide code review system was a much bigger ask. We didn’t try that until we’d had some successes elsewhere.

Generating Short-Term Wins

If it takes too long for people to see progress being made, they’ll lose faith in the vision. So go for some quick wins. Focusing initially on small, easy, low-hanging fruit will help build momentum. When it comes to microservice decomposition, functionality that can easily be extracted from our monolith should be high on your list. But as we’ve already established, microservices themselves aren’t the goal - so you’ll need to balance the ease of extraction of some piece of functionality versus the benefit that will bring. We’ll come back to that idea later in this blog.

Of course, if you choose something you think is easy and end up having huge problems with making it happen, that could be valuable insight into your strategy, and may make you reconsider what you’re doing. This is totally OK! The key thing is that if you focus something easy first, you’re likely to gain this insight early. Making mistakes is natural - all we can do is structure things to make sure we learn from those mistakes as quickly as possible.

Consolidating Gains and Producing More Change

Once you’ve got some success, it becomes important not to sit on your laurels. Quick wins might be the only wins if you don’t continue to push on. It’s important you pause and reflect after successes (and failures) so you can think about how to keep driving the change. You may need to change your approach as you reach different parts of the organization.

With a microservice transition, as you cut deeper, you may find it harder going. Handling decomposition of a database may be something you put off initially, but it can’t be delayed forever. You have many techniques at your disposal, but working out which is the right approach will take careful consideration. Just remember that decomposition technique that worked for you in one area of your monolith may not work somewhere else - you’ll need to be constantly trying new ways of making forward progress.

Anchoring New Approaches in the Culture

By continuing to iterate, roll out changes, and share the stories of successes (and failures), the new way of working will start to become business as usual. A large part of this is about sharing stories with your colleagues, with other teams and other folks in the organization. It’s all too often that once we’ve solved a hard problem, we just move on to the next. For change to scale - and stick - continually finding ways to share information inside your organization is essential.

Over time, the new way of doing something becomes the way that things are done. If you look at companies that are a long way down the road of adopting microservice architectures, whether or not it’s the right approach has ceased to be a question. This is the way things are now done, and the organization understands how to do them well.

This, in turn, can create a new problem. Once the Big New Idea becomes the Established Way of Working, how can you make sure that future, better approaches have space to emerge and perhaps displace how things are done?

Importance of Incremental Migration

If you do a big-bang rewrite, the only thing you’re guaranteed of is a big bang.

Martin Fowler

If you get to the point of deciding that breaking apart your existing monolithic system is the right thing to do, I strongly advise you to chip away at these monoliths, extracting a bit at a time. An incremental approach will help you learn about microservices as you go, and will also limit the impact of getting something wrong (and you will get things wrong!). Think of our monolith as a block of marble. We could blow the whole thing up, but that rarely ends well. It makes much more sense to just chip away at it incrementally.

The issue is that the cost of an experiment to move a nontrivial monolithic system over to a microservice architecture can be large, and if you’re doing everything at once, it can be difficult to get good feedback about what is (or isn’t) working well. It’s much easier to break such a journey into smaller stages; each one can be analyzed and learned from. It’s for this reason that I have been a huge fan of iterative software delivery since even before the advent of agile - accepting that I will make mistakes, and therefore need a way to reduce the size of those mistakes.

Any transition to a microservice architecture should bear these principles in mind. Break the big journey into lots of little steps. Each step can be carried out and learned from. If it turns out to be a retrograde step, it was only a small one. Either way, you learn from it, and the next step you take will be informed by those steps that came before.

As we discussed earlier, breaking things into smaller pieces also allows you to identify quick wins and learn from them. This can help make the next step easier and can help build momentum. By splitting out microservices one at a time, you also get to unlock the value they bring incrementally, rather than having to wait for some big bang deployment.

All of this leads to what has become almost stock advice for people looking at microservices. If you think it’s a good idea, start somewhere small. Choose one or two areas of functionality, implement them as microservices, get them deployed into production, and reflect on whether it worked. I’ll take you through a model for identifying which microservices you should start with later in the article.

It’s Production That Counts

It is really important to note that the extraction of a microservice can’t be considered complete until it is in production and being actively used. Part of the goal of incremental extraction is to give us chances to learn from and understand the impact of the decomposition itself. The vast majority of the important lessons will not be learned until your service hits production.

Microservice decomposition can cause issues with troubleshooting, tracing flows, latency, referential integrity, cascading failures, and a host of other things. Most of those problems are things you’ll notice only after you hit production. In the next couple of my articles, we will look at techniques that allow you to deploy into a production environment but limit the impact of issues as they occur. If you make a small change, it’s much easier to spot (and fix) a problem you create.

Cost of Change

There are many reasons why, throughout my blog, I promote the need to make small, incremental changes, but one of the key drivers is to understand the impact of each alteration we make and change course if required. This allows us to better mitigate the cost of mistakes, but doesn’t remove the chance of mistakes entirely. We can - and will - make mistakes, and we should embrace that. What we should also do, though, is understand how best to mitigate the costs of those mistakes.

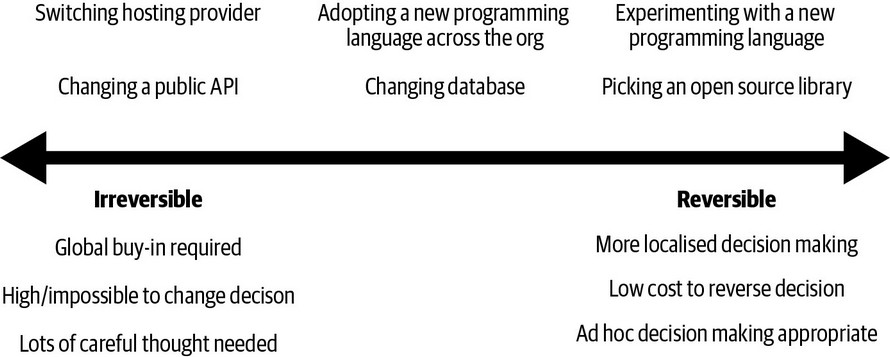

Reversible and Irreversible Decisions

Jeff Bezos, Amazon CEO, provides interesting insights into how Amazon works in his yearly shareholder letters. The 2015 letter held this gem:

Some decisions are consequential and irreversible or nearly irreversible - one-way doors - and these decisions must be made methodically, carefully, slowly, with great deliberation and consultation. If you walk through and don’t like what you see on the other side, you can’t get back to where you were before. We can call these Type 1 decisions. But most decisions aren’t like that - they are changeable, reversible - they’re two-way doors. If you’ve made a suboptimal Type 2 decision, you don’t have to live with the consequences for that long. You can reopen the door and go back through. Type 2 decisions can and should be made quickly by high judgment individuals or small groups.

Jeff Bezos, Letter to Amazon Shareholders (2015)

Bezos goes on to say that people who don’t make decisions often may fall into the trap of treating Type 2 decisions like Type 1 decisions. Everything becomes life or death; everything becomes a major undertaking. The problem is that adopting a microservice architecture brings with it loads of options regarding how you do things - which means you may need to make many more decisions than before. And if you - or your organization - isn’t used to that, you may find yourself falling into this trap, and progress will grind to a halt.

The terms aren’t terribly descriptive, and it can be hard to remember what Type 1 or Type 2 actually means, so I prefer the names Irreversible (for Type 1) or Reversible (for Type 2).

While I like this concept, I don’t think decisions always fall neatly into either of these buckets; it feels slightly more nuanced than that. I’d rather think in terms of Irreversible and Reversible as being on two ends of a spectrum, as shown in Figure 3.

Figure 3. The differences between Irreversible and Reversible decisions, with examples along the spectrum

Assessing where you are on that spectrum can be challenging initially, but fundamentally it all comes back to understanding the impact if you decide to change your mind later. The bigger the impact a later course correction will cause, the more it starts to look like an Irreversible decision.

The reality is, the vast number of decisions you will make as part of a microservice transition will be toward the Reversible end of the spectrum. Software has a property where rollbacks or undos are often possible; you can roll back a software change or a software deployment. What you do need to take into account is the cost of changing your mind later.

The Irreversible decisions will need more involvement, careful thought, and consideration, and you should (rightly) take more time over them. The further we get to the right on this spectrum, toward our Reversible decisions, the more we can just rely on our colleagues who are close to the problem at hand to make the right call, knowing that if they make the wrong decision, it’s an easy thing to fix later.

Easier Places to Experiment

The cost involved in moving code around within a codebase is pretty small. We have lots of tools that support us, and if we cause a problem, the fix is generally quick. Splitting apart a database, however, is much more work, and rolling back a database change is just as complex. Likewise, untangling an overly coupled integration between services, or having to completely rewrite an API that is used by multiple consumers can be a sizeable undertaking. The large cost of change means that these operations are increasingly risky. How can we manage this risk? My approach is to try to make mistakes where the impact will be lowest.

I tend to do much of my thinking in the place where the cost of change and the cost of mistakes is as low as it can be: the whiteboard. Sketch out your proposed design. See what happens when you run use cases across what you think your service boundaries will be. For our music shop, for example, imagine what happens when a customer searches for a record, registers with the website, or purchases an album. What calls get made? Do you start seeing odd circular references? Do you see two services that are overly chatty, which might indicate they should be one thing?

So Where Do We Start?

OK, so we’ve spoken about the importance of clearly articulating our goals and understanding the potential trade-offs that might exist. What next? Well, we need a view of what pieces of functionality we may want to extract into services, so that we can start thinking rationally about what microservices we might create next. When it comes to decomposing an existing monolithic system, we need to have some form of logical decomposition to work with, and this is where domain-driven design can come in handy.

Domain-Driven Design

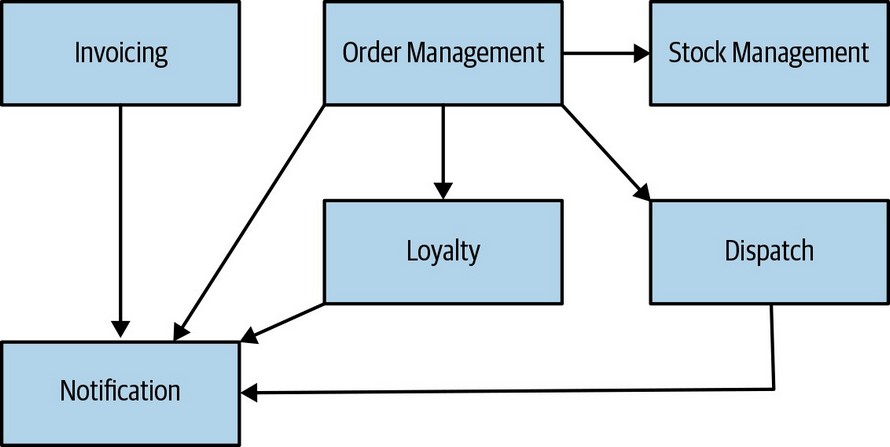

Domain-driven design is an important concept in helping define boundaries for our services. Developing a domain model also helps us when it comes to working out how to prioritize our decomposition too. In Figure 4, we have an example high-level domain model for Music Corp. What you’re seeing is a collection of bounded contexts identified as a result of a domain modeling exercise. We can clearly see relationships between these bounded contexts, which we’d imagine would represent interactions within the organization itself.

Figure 4. The bounded contexts and the relationships between them for Music Corp

Each of these bounded contexts represents a potential unit of decomposition. As we discussed previously, bounded contexts make great starting points for defining microservice boundaries. So already we have our list of things to prioritize. But we have useful information in the form of the relationships between these bounded contexts too - which can help us assess the relative difficulty in extracting the different pieces of functionality. We’ll come back to this idea shortly

I consider coming up with a domain model as a near-essential step that’s part of structuring a microservice transition. What can often be daunting is that many people have no direct experience of creating such a view. They also worry greatly about how much work is involved. The reality is that while having experience can greatly help in coming up with a logical model like this, even a small amount of effort exerted can yield some really useful benefits.

How Far Do You Have to Go?

When approaching the decomposition of an existing system, it’s a daunting prospect. Many people probably built and continue to build the system, and in all likelihood, a much larger group of people actually use it in a day-to-day fashion. Given the scope, trying to come up with a detailed domain model of the entire system may be daunting.

It’s important to understand that what we need from a domain model is just enough information to make a reasonable decision about where to start our decomposition. You probably already have some ideas of the parts of your system that are most in need of attention, and therefore it may be enough to come up with a generalized model for the monolith in terms of high-level groupings of functionality, and pick the parts that you want to dive deeper into. There is always a danger that if you look only at part of the system you may miss larger systemic issues that require addressing. But I wouldn’t obsess about it - you don’t have to get it right first time; you just need enough information to make some informed next steps. You can - and should - continuously refine your domain model as you learn more, and keep it fresh to reflect new functionality as it’s rolled out.

Event Storming

Event Storming, created by Alberto Brandolini, is a collaborative exercise involving technical and nontechnical stakeholders who together define a shared domain model. Event Storming works from the bottom up. Participants start by defining the “Domain Events” - things that happen in the system. Then these events are grouped into aggregates, and the aggregates are then grouped into bounded contexts.

It’s important to note that Event Storming doesn’t mean you have to then build an event-driven system. Instead, it focuses on understanding what (logical) events occur in the system - identifying the facts that you care about as a stakeholder of the system. These domain events can map to events fired as part of an event-driven system, but they could be represented in different ways.

One of the things Alberto is really focusing on with this technique is the idea of the collective defining the model. The output of this exercise isn’t just the model itself; it is the shared understanding of the model. For this process to work, you need to get the right stakeholders in the room - and often that is the biggest challenge.

Exploring Event Storming in more detail is outside the scope of this blog, but it’s a technique I’ve used and like very much. If you want to explore more, you could read Alberto’s Introducing EventStorming (currently in progress).

Using a Domain Model for Prioritization

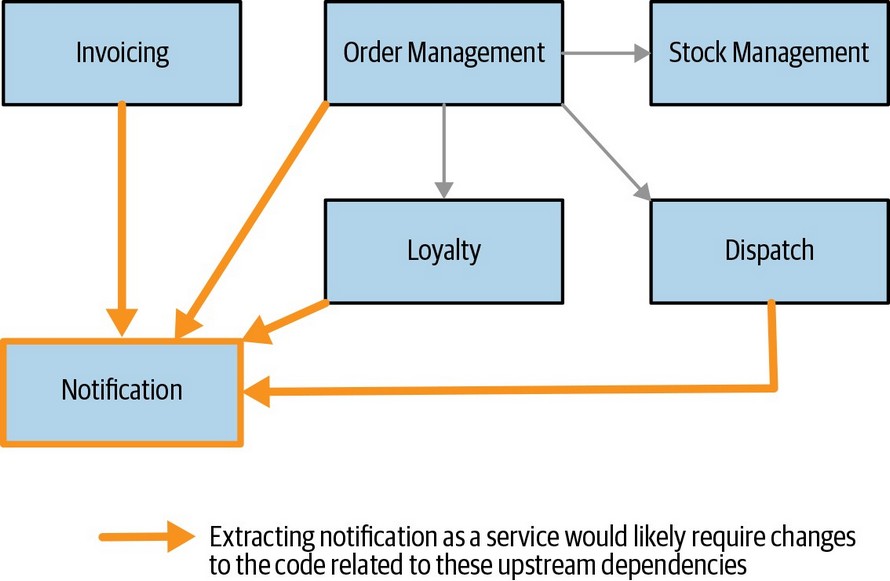

We can gain some useful insights from diagrams like Figure 4. Based on the number of upstream or downstream dependencies, we can extrapolate a view regarding which functionality is likely to be easier - or harder - to extract. For example, if we consider extracting Notification functionality, then we can clearly see a number of inbound dependencies, as indicated in Figure 5 - lots of parts of our system require the use of this behavior. If we want to extract out our new Notification service, we’d therefore have a lot of work to do with the existing code, changing calls from being local calls to the existing notification functionality and making them service calls instead.

Figure 5. Notification functionality seems logically coupled from our domain model point of view, so may be harder to extract

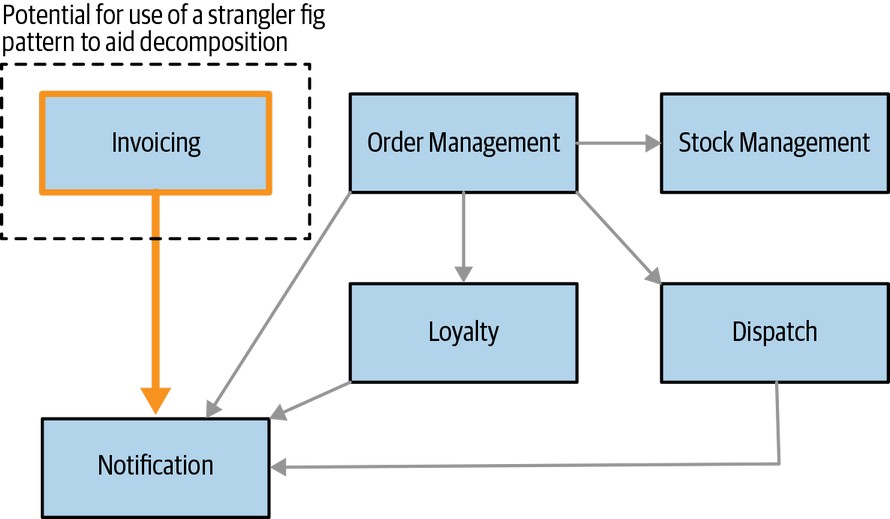

So Notification may not be a good place to start. On the other hand, as highlighted in Figure 6, Invoicing may well represent a much easier piece of system behavior to extract; it has no in-bound dependencies, which would reduce the required changes we’d need to make to the existing monolith. A pattern like the strangler fig could be effective in these cases, as we can easily proxy these inbound calls before they hit the monolith.

Figure 6. Invoicing appears to be easier to extract

When assessing likely difficulty of extraction, these relationships are a good way to start, but we have to understand that this domain model represents a logical view of an existing system. There is no guarantee that the underlying code structure of our monolith is structured in this way. This means that our logical model can help guide us in terms of pieces of functionality that are likely to be more (or less) coupled, but we may still need to look at the code itself to get a better assessment of the degree of entanglement of the current functionality. A domain model like this won’t show us which bounded contexts store data in a database. We might find for that Invoicing manages lots of data, meaning we’d need to consider the impact of database decomposition work. We can and should look to break apart monolithic datastores, but this may not be something we want to start off with for our first couple of microservices.

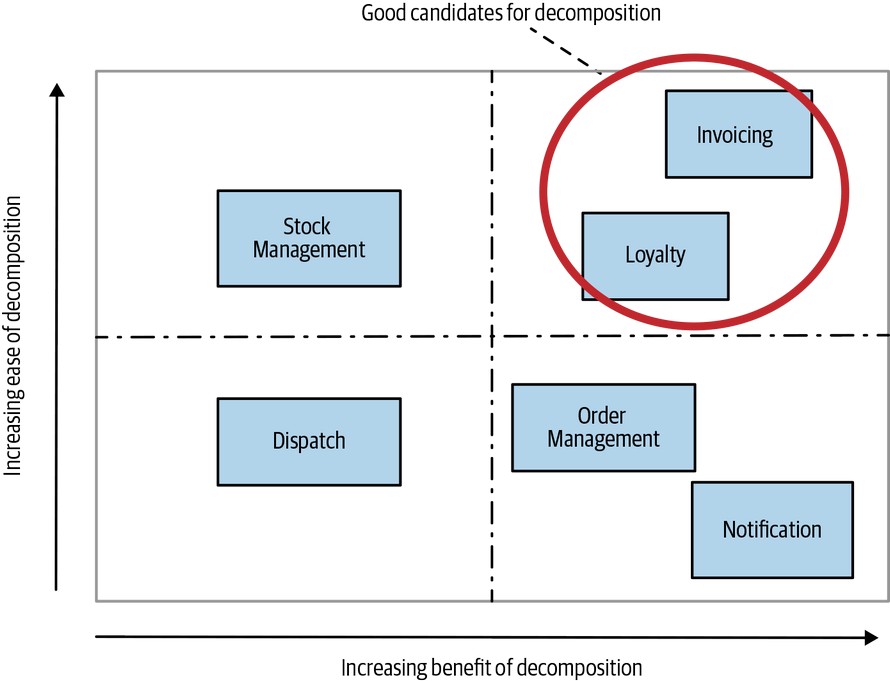

So we can look at things through a lens of what looks easy and what looks hard, and this is a worthwhile activity - we want to find some quick wins after all! However, we have to remember that we’re looking at microservices as a way of achieving something specific. We may identify that Invoicing does, in fact, represent an easy first step, but if our goal is to help improve time to market, and the Invoicing functionality is hardly ever changed, then this may not be a good use of our time.

We need, therefore, to combine our view of what is easy and what is hard, together with our view of what benefits microservice decomposition will bring.

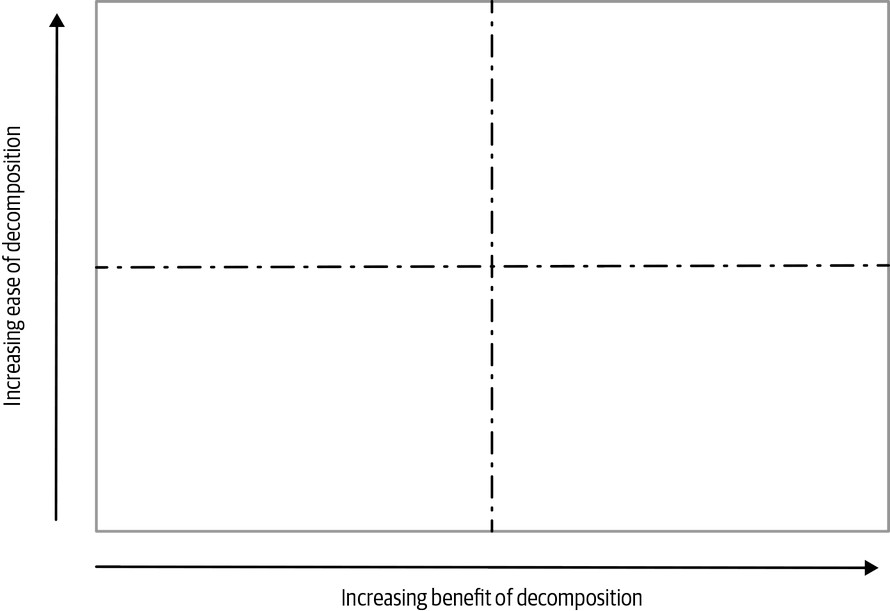

A Combined Model

We want some quick wins to make early progress, to build a sense of momentum, and to get early feedback on the efficacy of our approach. This will push us toward wanting to choose easier things to extract. But we also need to gain some benefits from the decomposition - so how do we factor that into our thinking?

Fundamentally, both forms of prioritization make sense, but we need a mechanism for visualizing both together and making appropriate trade-offs. I like to use a simple structure for this, as shown in Figure 7. For each candidate service to be extracted, you place it along the two axes displayed. The x-axis represents the value that you think the decomposition will bring. Along the y-axis, you order things based on their difficulty.

Figure 7. A simple two-axis model for prioritizing service decomposition

By working through this process as a team, you can come up with a view of what could be good candidates for extraction - and like every good quadrant model, it’s the stuff in the top right we like, as Figure 8 shows. Functionality there, including Invoicing, represents functionality we think should be easy to extract, and will also deliver some benefit. So, choose one (or maybe two) services from this group as your first services to extract.

As you start to make changes, you’ll learn more. Some of the things you thought were easy will turn out to be hard. Some of the things you thought would be hard turn out to be easy. This is natural! But it does mean that it’s important to revisit this prioritization exercise and replan as you learn more. Perhaps as you chip away, you realize that Notifications might be easier to extract than you thought.

Figure 8. An example of the prioritization quadrant in use

Reorganizing Teams

After this blog, we’ll mostly be focusing on the changes that you’ll need to make to your architecture and code to create a successful transition to a microservices. But as we’ve already explored, aligning architecture and organization can be key to getting the most out of a microservice architecture.

However, you might be in a situation where your organization needs to change to take advantage of these new ideas. While an in-depth study of organizational change is outside the scope of my blog, I want to leave you with a few ideas before we dive into the deeper technical side of things.

Shifting Structures

Historically, IT organizations were structured around core competency. Java developers were in a team with other Java developers. Testers were in a team with other testers. The DBAs were all in a team by themselves. When creating software, people from these teams would be assigned to work on these often-short lived initiatives.

The act of creating software therefore required multiple hand-offs between teams. The business analyst would speak to a customer and find out what they wanted. The analyst then write up a requirement and hand it to the development team to work on. A developer would finish some work and hand that to the test team. If the test team found an issue, it would be sent back. If it was OK, it might proceed to the operations team to be deployed.

This siloing seems quite familiar. Layered architectures could require multiple services to need to be changed when rolling out simple changes. The same applies with organizational silos: the more teams that need to be involved in creating or changing a piece of software, the longer it can take.

These silos have been breaking down. Dedicated test teams are now a thing of the past for many organizations. Instead, test specialists are becoming an integrated part of delivery teams, enabling developers and testers to work more closely together. The DevOps movement has also led in part to many organizations shifting away from centralized operations teams, instead pushing more responsibility for operational considerations onto the delivery teams.

In situations where the roles of these dedicated teams have been pushed into the delivery teams, the roles of these centralized teams have shifted. They’ve gone from doing the work themselves to helping the delivery teams do the work instead. This can involve embedding specialists with the teams, creating self-service tooling, providing training, or a whole host of other activities. Their responsibility has shifted from doing to enabling.

Increasingly, therefore, we’re seeing more independent, autonomous teams, able to be responsible for more of the end-to-end delivery cycle than ever before. Their focus is on different areas of the product, rather than a specific technology or activity - just in the same way that we’re switching from technical-oriented services toward services modeled around vertical slices of business functionality. Now, the important thing to understand is that while this shift is a definite trend that has been evident for many years, it isn’t universal, nor is such a shift a quick transformation.

It’s Not One Size Fits All

We started this article by discussing how your decision about whether to use microservices should be rooted in the challenges you are facing, and the changes you want to bring about. Making changes in your organizational structure is just as important. Understanding if and how your organization needs to change needs to be based on your context, your working culture, and your people. This is why just copying other people’s organizational design can be especially dangerous.

Earlier, we touched very briefly on the Spotify model. Interest grew in how Spotify organized itself in the well-known 2012 paper “Scaling Agile @ Spotify” by Henrik Kniberg and Anders Ivarsson. This is the paper that popularized the notion of Squads, Chapters, and Guilds, terms that are now commonplace (albeit misunderstood) in our industry. Ultimately, this led to people christening this “the Spotify model,” even though this was never a term used by Spotify.

Subsequently, there was a rush of companies adopting this structure. But as with microservices, many organizations gravitated to the Spotify model without sufficient thought as to the context in which Spotify operates, their business model, the challenges they are facing, or the culture of the company. It turns out that an organizational structure that worked well for a Swedish music streaming company may not work for an investment bank. In addition, the original paper showed a snapshot of how Spotify worked in 2012 and things have changed since. It turns out not even Spotify uses the Spotify model.

The same needs to apply to you. Take inspiration from what other organizations have done, absolutely, but don’t assume that what worked for someone else will work in your context. As Jessica Kerr once put it, in relation to the Spotify model, “Copy the questions, not the answers”. The snapshot of Spotify’s organizational structure reflected changes its had carried out to solve its problems. Copy that flexible, questioning attitude in how you do things, and try new things, but make sure the changes you apply are rooted in an understanding of your company, its needs, its people, and its culture.

To give a specific example, I see a lot of companies saying to their delivery teams, “Right, now you all need to deploy your software and run 24/7 support.” This can be incredibly disruptive and unhelpful. Sometimes, making big, bold statements can be a great way to get things moving, but be prepared for the chaos it can bring. If you’re working in an environment where the developers are used to working 9–5, not being on call, have never worked in a support or operations environment, and wouldn’t know their SSH from their elbow, then this is a great way to alienate your staff and lose a lot of people. If you think that this is the right move for your organization, then great! But talk about it as an aspiration, a goal you want to achieve, and explain why. Then work with your people to craft a journey toward that goal.

If you really want to make a shift toward teams more fully owning the whole life cycle of their software, understand that the skills of those teams need to change. You can provide help and training, add new people to the team (perhaps by embedding people from the current operations team in delivery teams). No matter what change you want to bring about, just as with our software, you can make this happen in an incremental fashion.

DevOps Doesn’t Mean NoOps!

There is widespread confusion around DevOps, with some people assuming that it means that developers do all the operations, and that operations people are not needed. This is far from the case. Fundamentally, DevOps is a cultural movement based on the concept of breaking down barriers between development and operations. You may still want specialists in these roles, or you might not, but whatever you want to do, you want to promote common alignment and understanding across the people involved in delivering your software, no matter what their specific responsibilities are.

For more on this, I recommend Team Topologies, which explores DevOps organizational structures. Another excellent resource on this topic, albeit broader in scope, is The Devops Handbook.

Making a Change

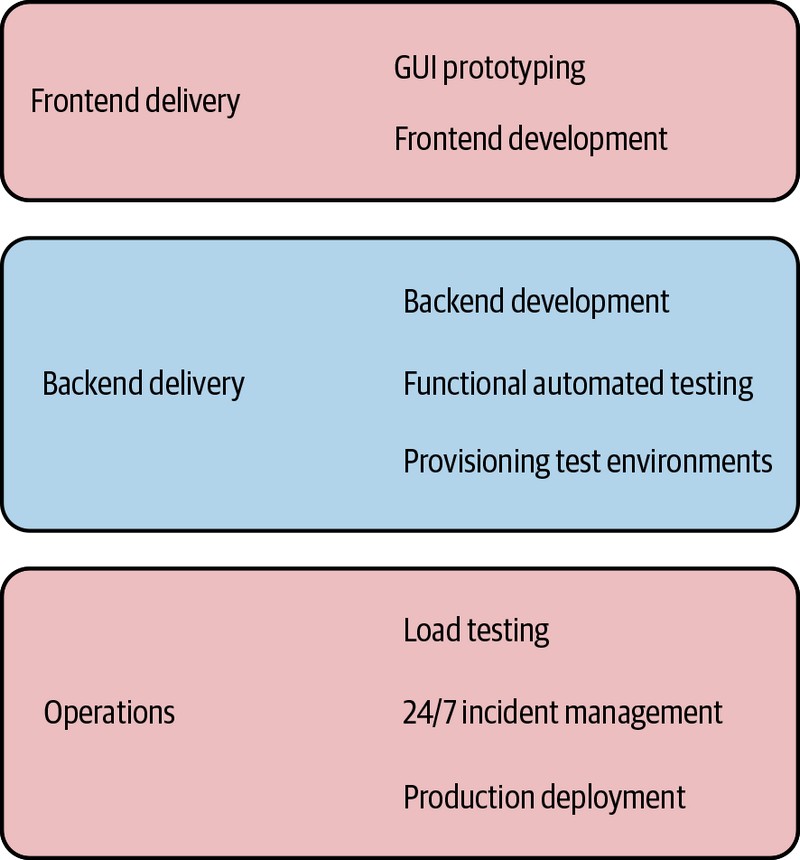

So if you shouldn’t just copy someone else’s structure, where should you start? When working with organizations that are changing the role of delivery teams, I like to begin with explicitly listing all the activities and responsibilities that are involved in delivering software within that company. Next, map these activities to your existing organizational structure.

If you’ve already modeled your path to production (something I am a big fan of), you could overlay those ownership boundaries on an existing view. Alternatively, something simple like Figure 9 could work well. Just get stakeholders from all the roles involved, and brainstorm as a group all the activities that go into shipping software in your company.

Figure 9. Showing a subset of the delivery-related responsibilities, and how they map to existing teams

Having this understanding of the current “as-is” state is very important, as it can help give everyone a shared understanding of all the work involved. The nature of a siloed organization is that you can struggle to understand what one silo does when you’re in a different silo. I find that this really helps organizations be honest with themselves as to how quickly things can change. You’ll likely find that not all teams are equal too - some may already do a lot for themselves, and others may be entirely dependent on other teams for everything from testing to deployment.

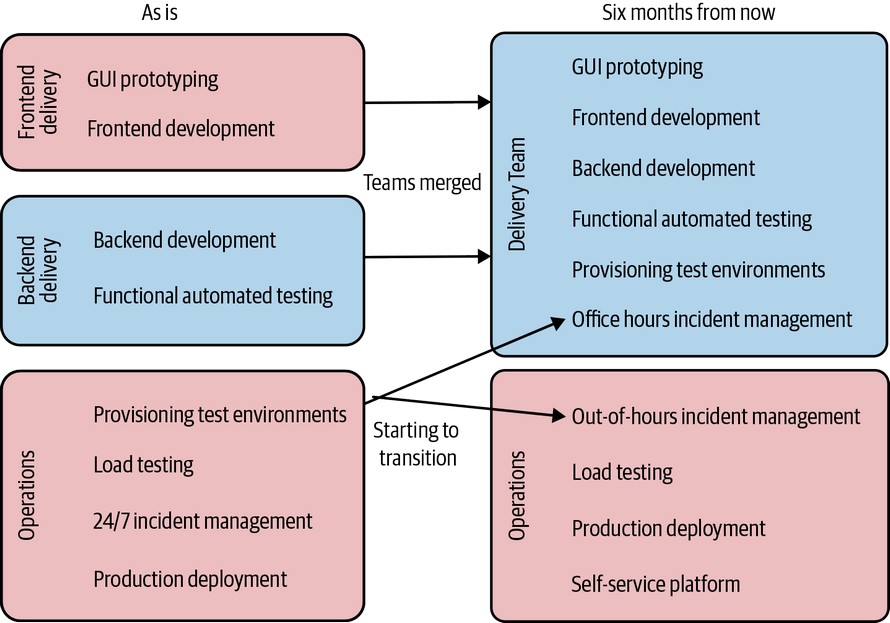

If you find your delivery teams are already deploying software themselves for test and user testing purposes, then the step to production deployments may not be that large. On the other hand, you still have to consider the impact of taking on tier 1 support (carrying a pager), diagnosing production issues, and so on. These skills are built up by people over years of work, and expecting developers to get up to speed with this overnight is totally unrealistic.

Once you have your as-is picture, redraw things with your vision for how things should be in the future, within some sensible timescale. I find that six months to a year is probably as far forward as you’ll want to explore in detail. What responsibilities are changing hands? How will you make that transition happen? What is needed to make that shift? What new skills will the teams need? What are the priorities for the various changes you want to make?

Taking our earlier example, in Figure 10 we see that we’ve decided to merge frontend and backend team responsibilities. We also want teams to be able to provision their own test environments. But to do that, the operations team will need to provide a self-service platform for the delivery team to use. We want the delivery team to ultimately handle all support for their software, and so we want to start the teams getting happier with the work involved. Having them owning their own test deployments is a good first step. We’ve also decided they’ll handle all incidents during the working day, giving them a chance to come up to speed with that process in a safe environment, where the existing operations team is on hand to coach them.

Figure 10. One example of how we might want to reassign responsibilities within our organization

The big picture views can really help when starting out with the changes you want to bring, but you’ll also need to spend time with the people on the ground to work out whether these changes are feasible, and if so, how to bring them about. By dividing things among specific responsibilities, you can also take an incremental approach to this shift. For you, focusing first on eliminating the need for the operations teams to provision test environments is the right first step.

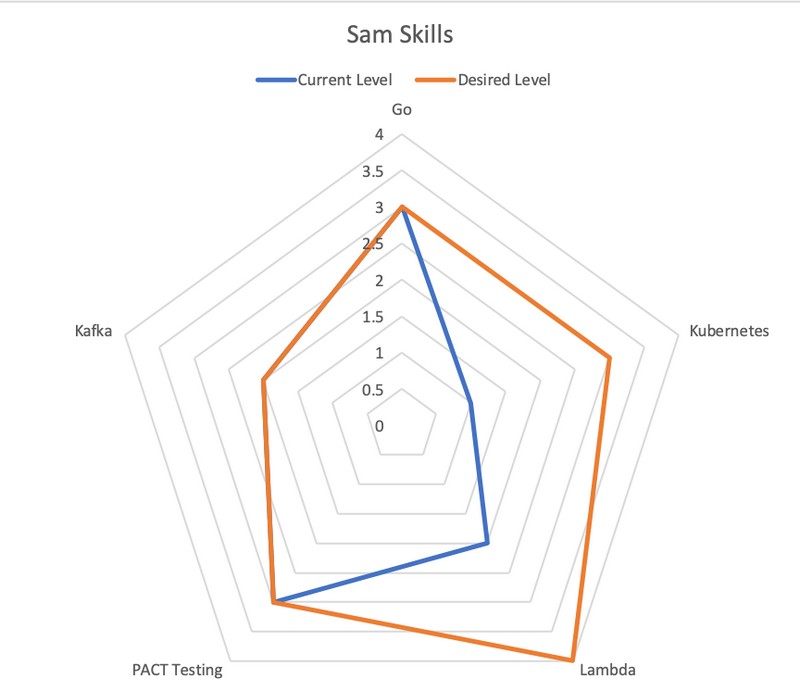

Changing Skills

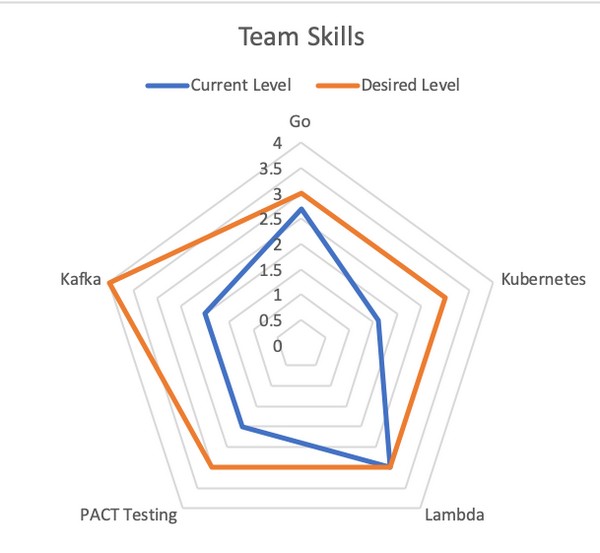

When it comes to assessing the skills that people need, and helping them bridge the gap, I’m a big fan of having people self-assess and use that to build a wider understanding of what support the team may need to carry out that change.

A concrete example of this in action is a project I was involved with during my time at ThoughtWorks. We were hired to help The Guardian newspaper rebuild its online presence. As part of this, they needed to get up to speed with a new programming language and associated technologies.

At the start of the project, our combined teams came up with a list of core skills that were important for The Guardian developers to work on. Each developer then assessed themselves against these criteria, ranking themselves from 1 (“This means nothing to me!”) to 5 (“I could write a book about this”). Each developer’s score was private; this was shared only with the person who was mentoring them. The goal was not that each developer should get each skill to 5; it was more that they themselves should set targets to reach.

As a coach, it was my job to ensure that if one of the developers I was coaching wanted to improve their Oracle skills, I would make sure they had the chance to do that. This could involve making sure they worked on stories that made use of that technology, recommending videos for them to watch, consider attending a training course or conference, etc.